Machine vision-based famous high-quality tea picking point position information acquisition method

A technology of position information and machine vision, applied in picking machines, instruments, harvesters, etc., can solve the problems of low positioning accuracy, mutual occlusion of tea leaves, low efficiency, etc., to reduce impact, improve accuracy and efficiency, and improve integrity. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0072]The present invention is further explained below in conjunction with the embodiment shown in the drawings. However, the present invention is not limited to the following examples.

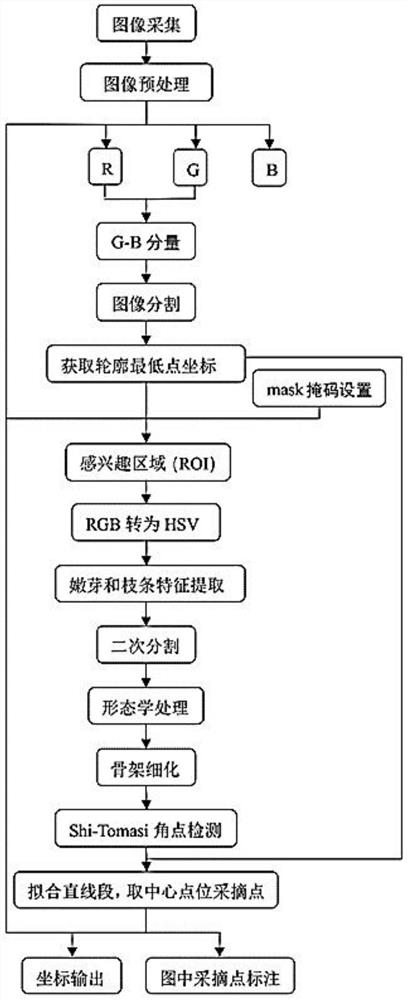

[0073]A machine-visual name-based tea pick point location information acquisition method, including the following steps:

[0074]Step 1, the tea picture obtained from the tea garden (figure 2 ), The collection of tea samples with 3 ╳ ╳ 卷核 核核 (see pictures after treatment)image 3 ).

[0075]At the RGB model, you can first obtain the G-B component map of its picture, and the OTSU algorithm is used to initiate the tear bud, and then the corrosive morphological operation is performed to filter the fine profile of the cause of noise or the like.

[0076]Step 2, in order to reduce the influence of the influence of the inclusive point, the number of image processing is improved, the real time of image processing is improved, and each of the new buds acquired separately, respectively, when the pick point identificatio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com