Multi-mode cooperation esophageal cancer lesion image segmentation system based on self-sampling similarity

A multi-modal, esophageal cancer technology, applied in the field of medical image intelligent processing, can solve the problems that researchers only consider, and achieve the effect of improving efficiency and precise segmentation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The embodiments of the present invention will be described in detail below, but the protection scope of the present invention is not limited to the examples.

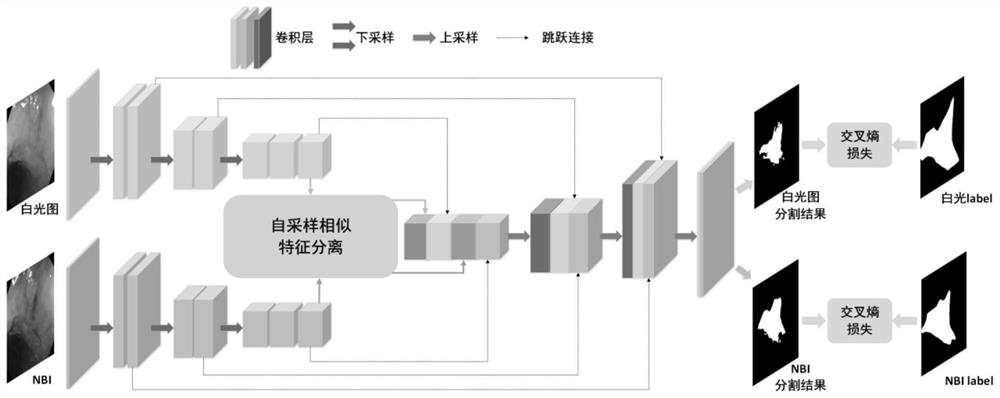

[0035] use figure 1 With the network structure in , a multimodal neural network is trained with 268 white-light NBI image pairs to obtain an automatic lesion region segmentation model.

[0036] The specific steps are:

[0037] (1) When training, scale the image to 500×500. Set the initial learning rate to 0.0001, the decay rate to 0.9, and decay once every two cycles. Minimize the loss function using mini-batch stochastic gradient descent. The batch size is set to 4. Update all parameters in the network, minimize the loss function of the network, and train until convergence;

[0038] (2) When testing, the image I Adjust the size to 500×500, input it into the trained model, and the model outputs white light images and NBI lesion area segmentation results;

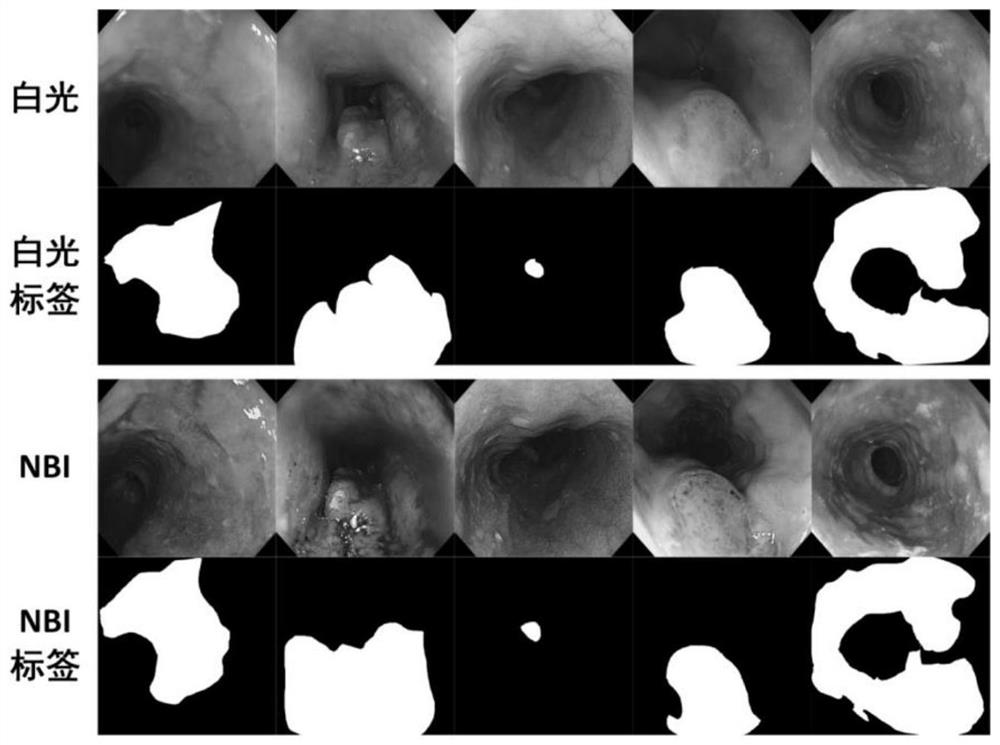

[0039] Figure 4 Showing the segmentation results...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com