Deep learning feature compression and decompression method, system and terminal

A technology of deep learning and compression method, which is applied in the field of image processing and computer vision, can solve the problems of not yet collecting data, not finding instructions or reports, etc., and achieve the effect of saving transmission bandwidth resources and having flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The following is a detailed description of the embodiments of the present invention: this embodiment is implemented on the premise of the technical solution of the present invention, and provides detailed implementation methods and specific operation processes. It should be noted that those skilled in the art can make several modifications and improvements without departing from the concept of the present invention, and these all belong to the protection scope of the present invention.

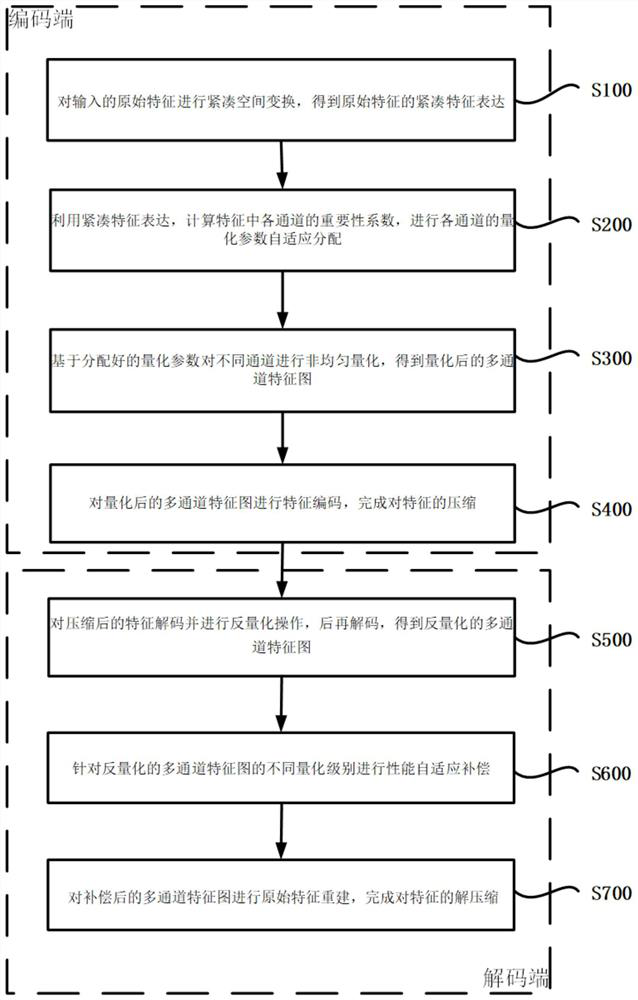

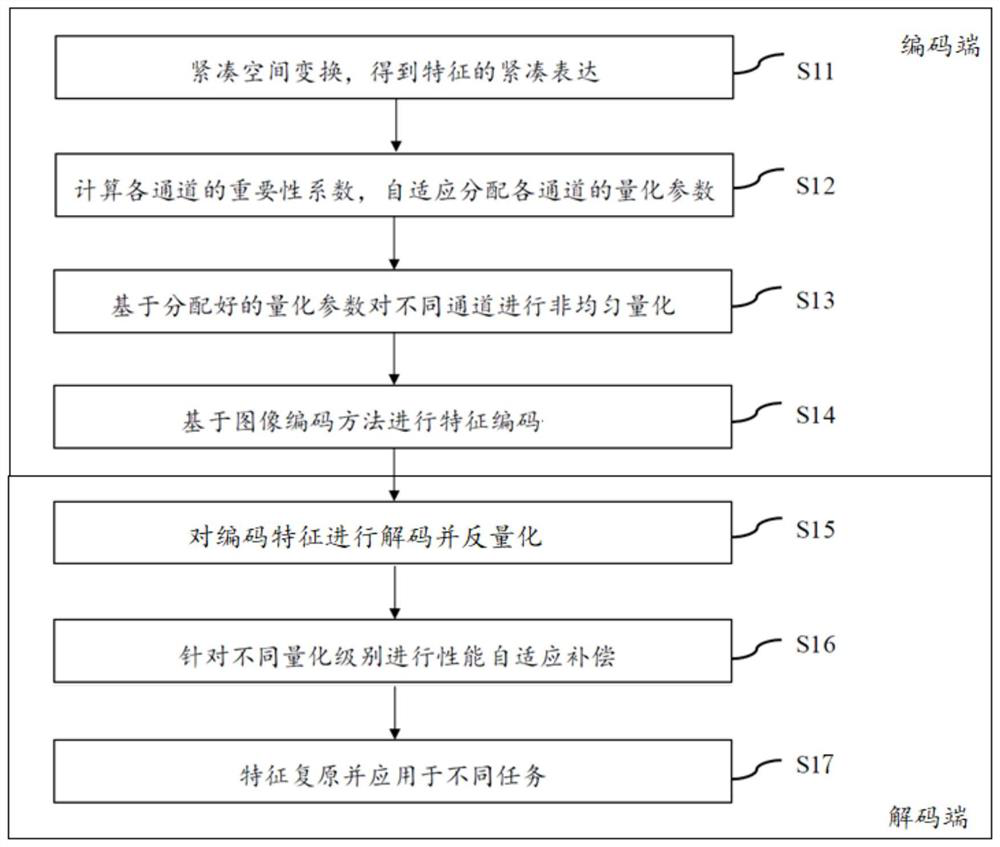

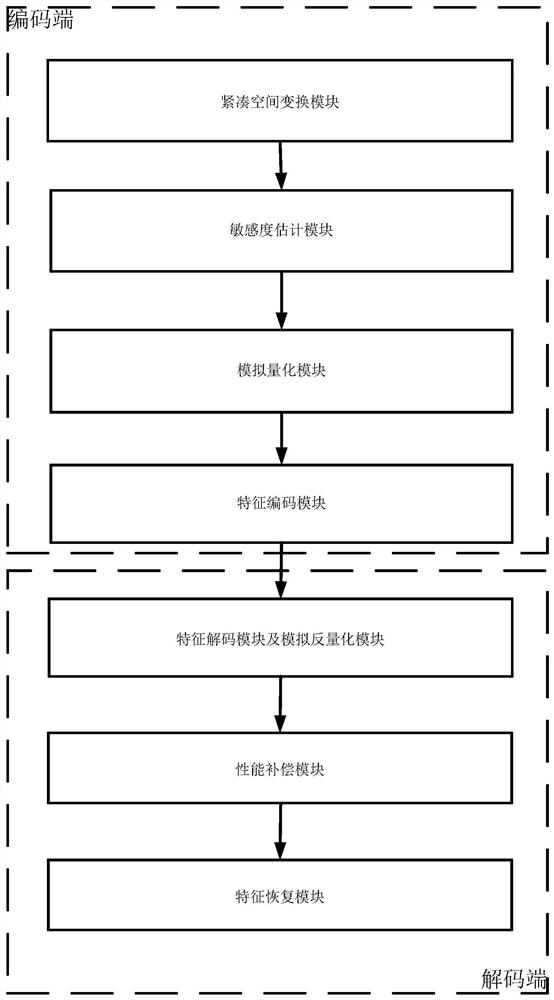

[0061] figure 1 It is a flow chart of deep learning feature compression and its corresponding decompression method provided by an embodiment of the present invention.

[0062] like figure 1 As shown, an embodiment of the present invention provides a deep learning feature compression method, which may include the following steps:

[0063] On the encoding side:

[0064] S100, performing a compact space transformation on the input original features to obtain a compact feature expression...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com