Data set reduction method and system for deep neural network model training

A deep neural network and model training technology, which is applied in the field of deep neural network models, can solve the problems of increasing model training resources and budget, and cannot help significantly improve the accuracy of the target model.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] In order to make the above objects, features and advantages of the present invention more obvious and understandable, the present invention will be further described in detail through specific examples below.

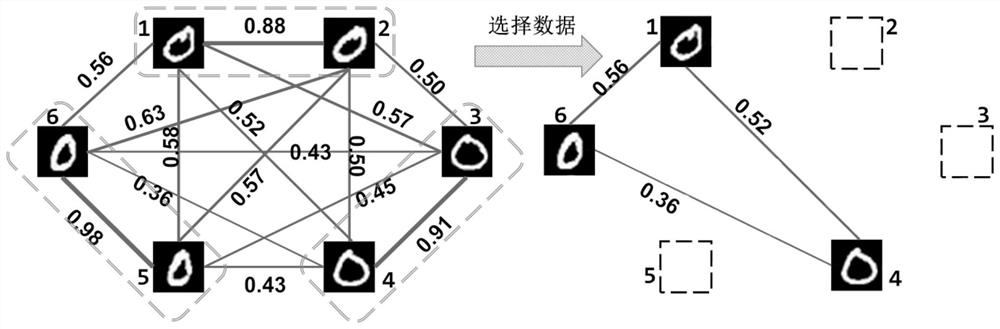

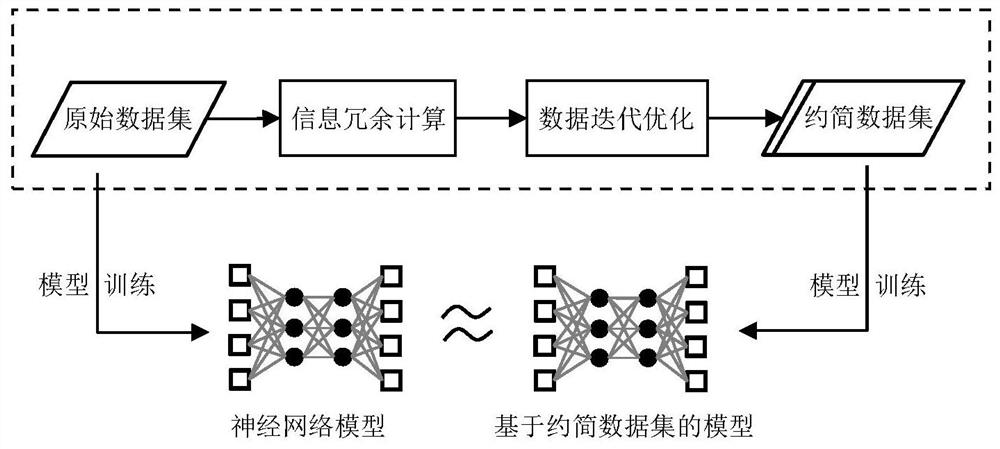

[0020] The scenario that the present invention is mainly aimed at is that the training data of the deep neural network model includes a large amount of redundant information, resulting in a large waste of model training costs. Therefore, in order to solve the waste of computing power in the model training process and improve the training effect of the model, the research is in The measurement method of the information redundancy of the deep neural network model, and how to efficiently remove the redundancy from the massive data to obtain more representative data points. like figure 1 As shown, the original training data set contains six pictures whose content is "0". By calculating the information redundancy between these pictures (that is, the value on the edge)...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com