Load prediction method and device and resource scheduling method and device for multiple cloud data centers

A load prediction, multi-data technology, applied in the field of cloud computing and data mining, to achieve the effect of improving prediction accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

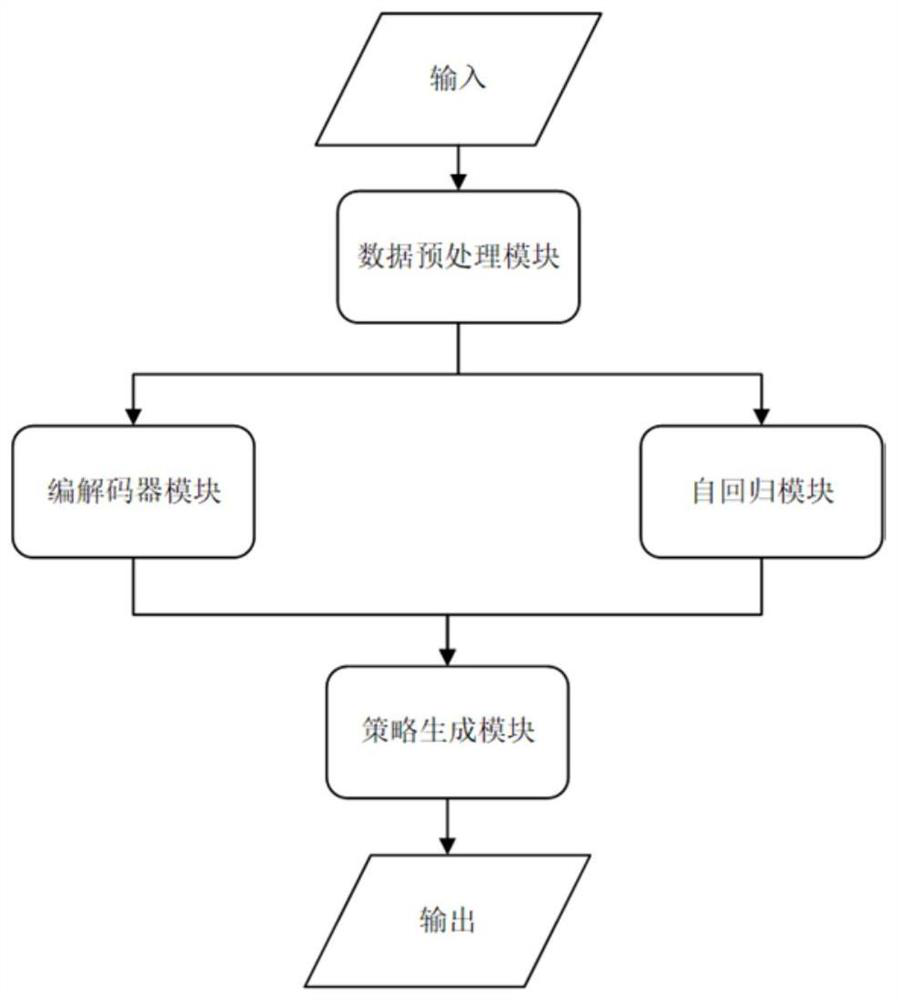

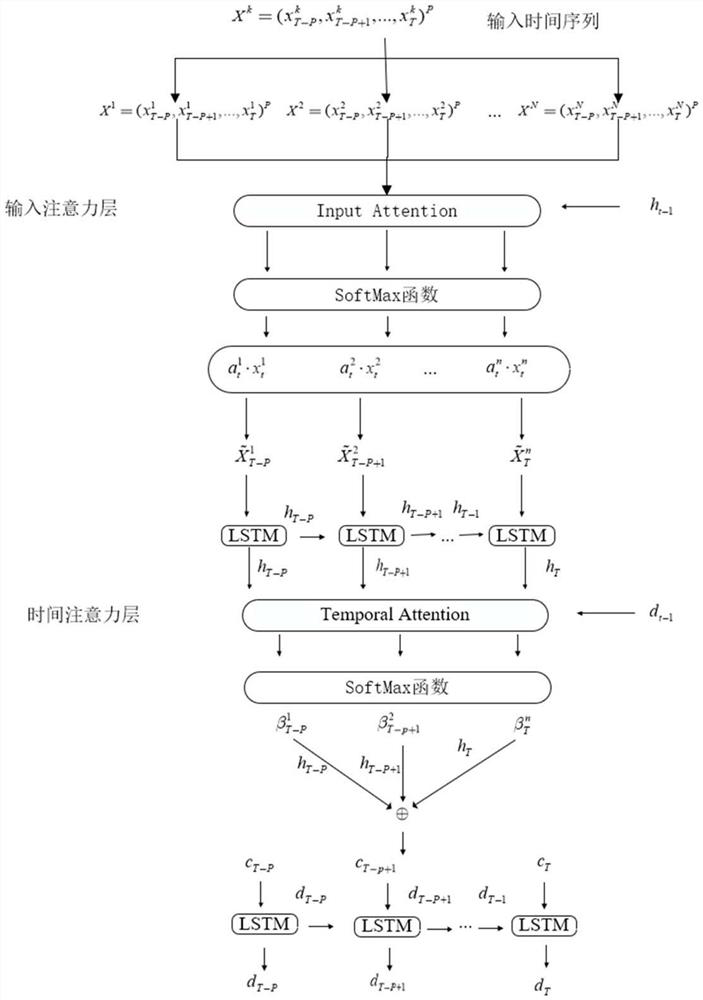

[0069] The present invention designs a cloud multi-data center-oriented load prediction method and resource scheduling method. The system takes the log record files of each virtual machine acquired by the cloud data center as input, and preprocesses the data to predict load linearity and non-linearity. The linear change finally enables the system to generate corresponding resource allocation and scheduling strategies according to the load prediction results.

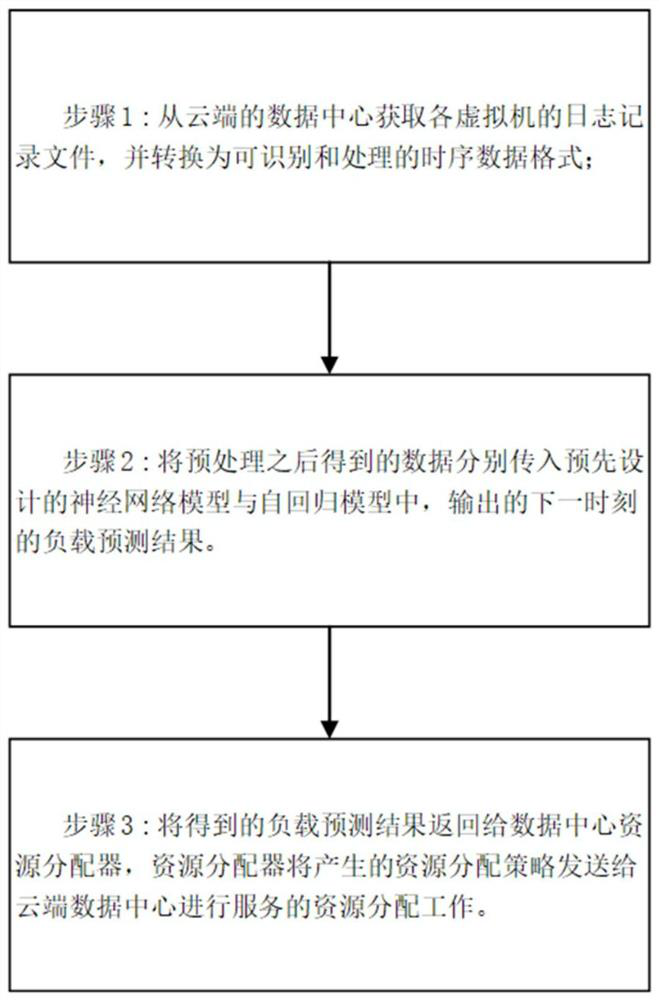

[0070] A load forecasting method for cloud multi-data centers of the present invention, such as figure 2 shown, including the following steps:

[0071] Step 1, first obtain the log record file of the virtual machine on the server in the cluster from the cloud data center, and the log file of the virtual machine includes the usage of various resources occupied by the virtual machine at each time point. Extract the required feature quantity from the log record file and convert it into a time series data format that the s...

Embodiment 2

[0135] Based on the same inventive concept as in Embodiment 1, the embodiment of the present invention is a load forecasting device for cloud multi-data centers, including:

[0136] The data processing module is used to obtain the log record file that records the resource usage of the virtual machine at each point in time, extract the required feature data and historical load data from it, and convert the feature data and historical load data into corresponding input features sequence and historical load vectors;

[0137] The nonlinear component prediction module is used to calculate the nonlinear component of the load prediction by using the pre-built neural network model based on the obtained input feature sequence and historical load vector;

[0138] A linear component forecasting module, configured to use a pre-built autoregressive model to calculate a linear component of the load forecast based on the obtained historical load vector;

[0139] The prediction result calcul...

Embodiment 3

[0142] Based on the same inventive concept as Embodiment 1, a resource allocator in the embodiment of the present invention includes:

[0143] The load prediction module is used to calculate and obtain the load prediction results of the virtual machines on each server in the cluster in the cloud multi-data center environment based on the above method;

[0144] The resource scheduling module is configured to generate corresponding resource scheduling policies based on the load prediction results of the virtual machines on each server.

[0145] For the specific implementation scheme of each module of the device of the present invention, refer to the implementation process of each step of the method in Embodiment 1.

[0146] Those skilled in the art should understand that the embodiments of the present application may be provided as methods, systems, or computer program products. Accordingly, the present application may take the form of an entirely hardware embodiment, an entire...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com