Monocular 3D reconstruction method with depth prediction

A depth prediction, single-purpose technology, applied in the field of 3D reconstruction, can solve the problems of lack of shape details in the reconstructed scene, difficult to train the neural network multi-view geometric basic principle, blurring, etc., to improve tracking and 3D reconstruction accuracy, maintain reconstruction accuracy, The effect of increasing the frame rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

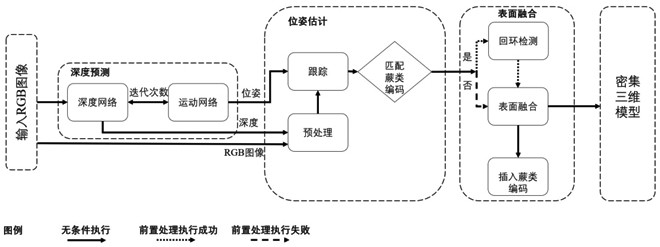

[0076] A monocular 3D reconstruction method with depth prediction, comprising the following steps:

[0077] A. Use the monocular depth estimation network to obtain the depth map and rough pose estimation of the RGB image;

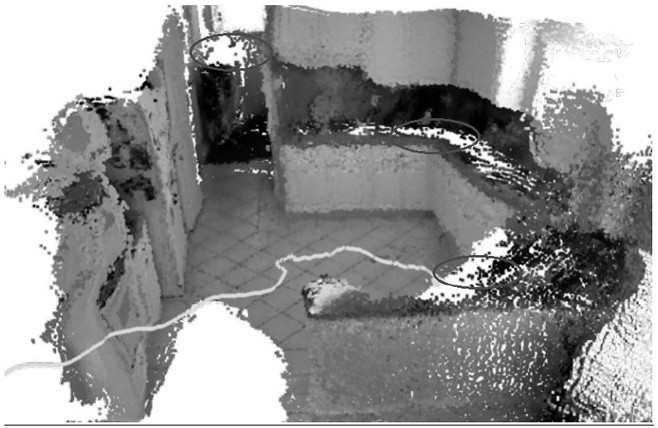

[0078] B. Combining the ICP algorithm and the PnP algorithm to calculate the camera pose estimation, perform loop closure detection at the local and global levels to ensure the consistency of the reconstruction model, and use uncertainty to refine the depth map to improve the reconstruction quality ;

[0079] C. Convert the depth map into a global model, and then insert the random fern code of the current frame into the database.

[0080] In step A, in the forward propagation stage, iterative optimization between subnetworks can produce accurate depth predictions. Then, we correct the depth map according to the camera parameters and transfer the result to the pose estimation module.

[0081] In step A,

[0082] Transform the RGB image into a depth image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com