Assembly image segmentation method and device based on deep learning and guided filtering

A guided filtering and deep learning technology, applied in the field of image processing, can solve problems such as mutual occlusion, reduced efficiency, and cost of manpower and material resources, and achieve the effect of improving data fitting ability, improving segmentation ability, and strengthening learning ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

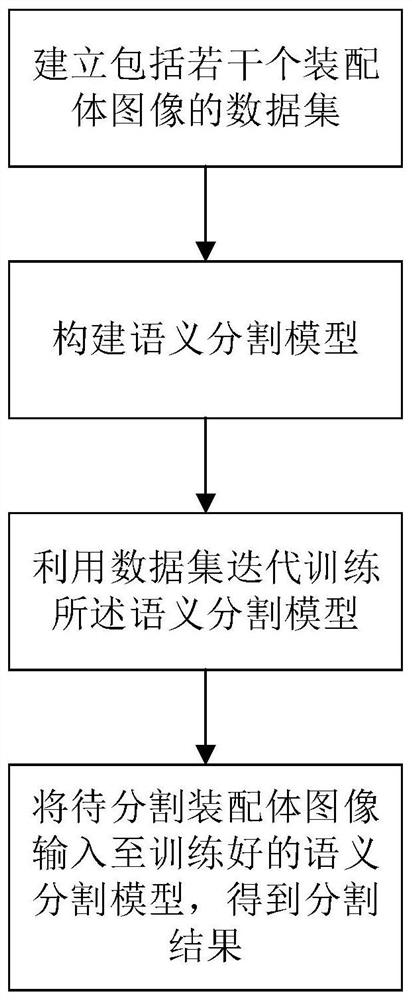

[0034] see figure 1 , an assembly image segmentation method based on deep learning and guided filtering, including the following steps:

[0035] S1. Establish a data set including several assembly depth images.

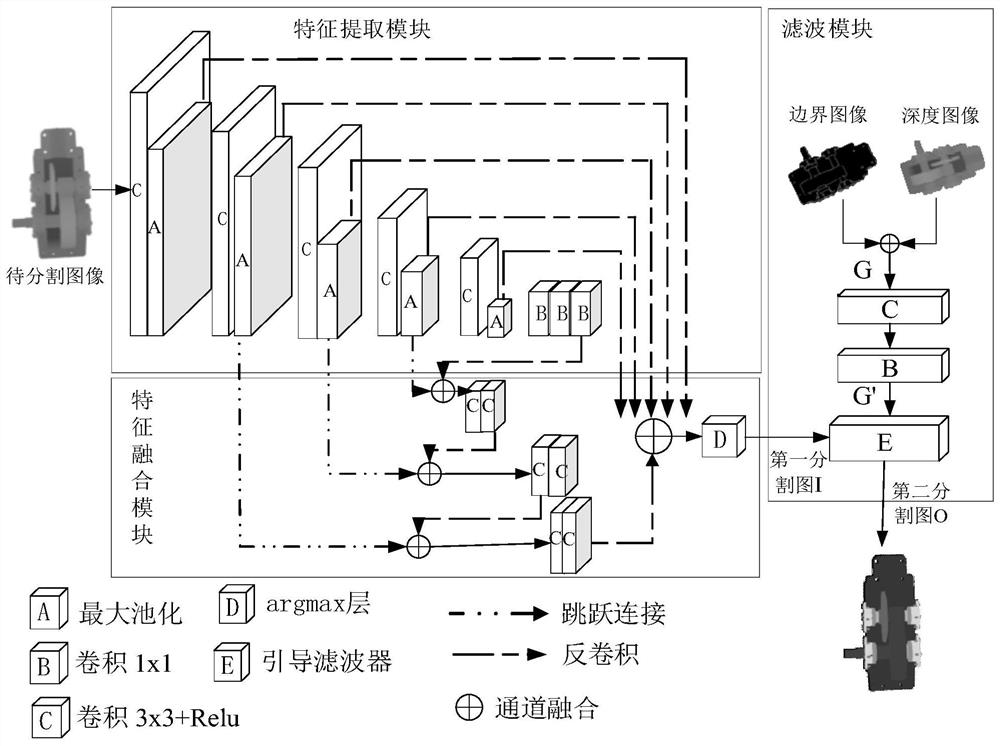

[0036] S2, such as figure 2 As shown, construct a semantic segmentation model including feature extraction module, feature fusion module and filtering module:

[0037] Build a feature extraction module; the feature extraction module includes sequential connections: 3×3 convolutional layer→Relu activation layer→maximum pooling layer→3×3 convolutional layer→Relu activation layer→maximum pooling layer→3×3 convolution Layer→Relu Activation Layer→Maximum Pooling Layer→3×3 Convolutional Layer→Relu Activation Layer→Maximum Pooling Layer→3×3 Convolutional Layer→Relu Activation Layer→Maximum Pooling Layer→1×1 Convolutional Layer→ 1×1 convolutional layer → 1×1 convolutional layer. Among them, the 3×3 convolutional layer indicates that the convolutional layer uses a 3×3 con...

Embodiment 2

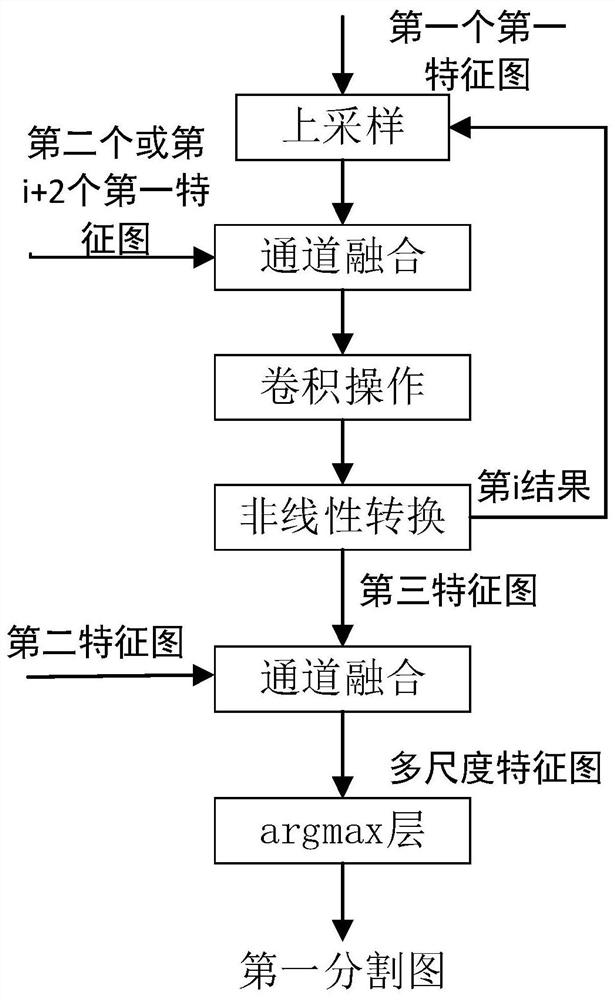

[0052] Further, see image 3 , the specific steps of generating the third feature map in Embodiment 1 are as follows;

[0053] The first feature map (that is, the first first feature map) output by the last 1×1 convolutional layer in the feature extraction module is upsampled by deconvolution (upsampling module), and then combined with the feature extraction The first feature map (that is, the second first feature map) output by the penultimate maximum pooling layer in the module is subjected to channel fusion, convolution operation and nonlinear conversion (channel fusion module → 3×3 convolutional layer → Relu activation layer → 3×3 convolutional layer → Relu activation layer) to get the first result;

[0054] Perform an upsampling operation on the first result, and then perform channel fusion, convolution operation and nonlinear conversion with the feature map output by the penultimate maximum pooling layer in the feature extraction module (that is, the third first feature...

Embodiment 3

[0057] The Sobel operator is used to extract the boundary features of the assembly image to be semantically segmented to obtain the boundary image; the assembly image to be segmented and the boundary image are input to the channel fusion module for channel fusion to obtain the guide image G. The guide image G is input to the 3x3 convolutional layer, the Relu activation layer, and the 1x1 convolutional layer to obtain the optimized guide image G'.

[0058] According to the optimized guide image G′, the first segmentation image I is linearly filtered to obtain the second segmentation image O, which is expressed as:

[0059]

[0060]

[0061] O=A h *I+b h

[0062] Wherein, i, k all represent the index value of the pixel in the image; I i Represent the value of the i-th pixel in the second segmentation map I; O i Represent the value of the i-th pixel in the second segmentation map O; are the coefficients of the local linear function; Indicates that the reconstruction...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com