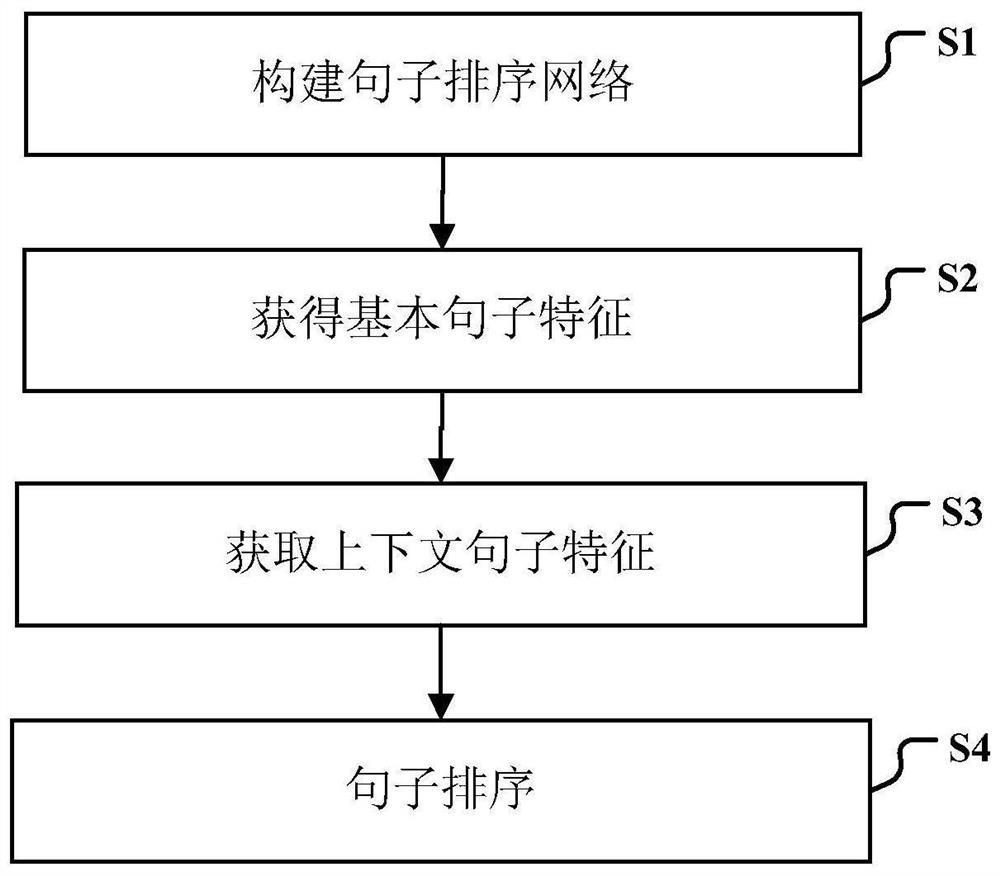

Non-autoregression sentence sorting method

A sorting method and autoregressive technology, applied in the field of non-autoregressive sentence sorting, can solve problems such as high algorithm complexity, high overhead, and inability to realize prediction in parallel

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

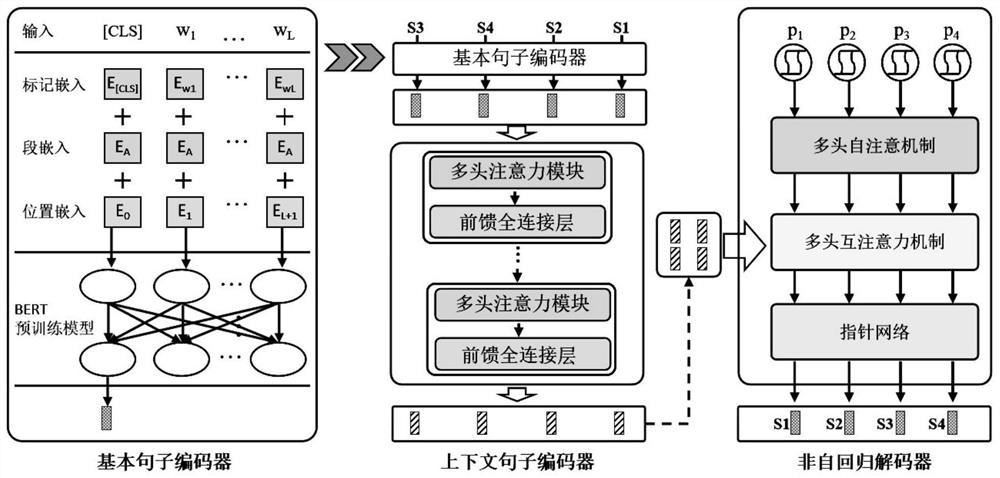

[0044] Specific embodiments of the present invention will be described below in conjunction with the accompanying drawings, so that those skilled in the art can better understand the present invention. It should be noted that in the following description, when detailed descriptions of known functions and designs may dilute the main content of the present invention, these descriptions will be omitted here.

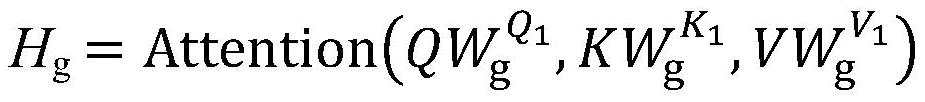

[0045] The existing sentence sorting method uses Bi-LSTM to extract the basic sentence feature vector when encoding, and uses the self-attention mechanism to extract the sentence features combined with the context in the paragraph, and then obtains the paragraph features through the average pooling operation. Pay special attention, here uses Transformer variant structure with position encoding removed. When decoding, the pointer network architecture is used as the decoder. The decoder is composed of LSTM units. The basic sentence feature vector is used as the input of the d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com