Multi-modal interaction behavior identification method based on RGB and three-dimensional skeleton

A recognition method and multi-modal technology, applied in the field of artificial intelligence and computer video understanding, can solve the problems of misclassification probability, small action discrimination, interference, etc., and achieve the effect of improving recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] In order to make the purpose, content and advantages of the present invention clearer, the specific implementation manners of the present invention will be further described in detail below.

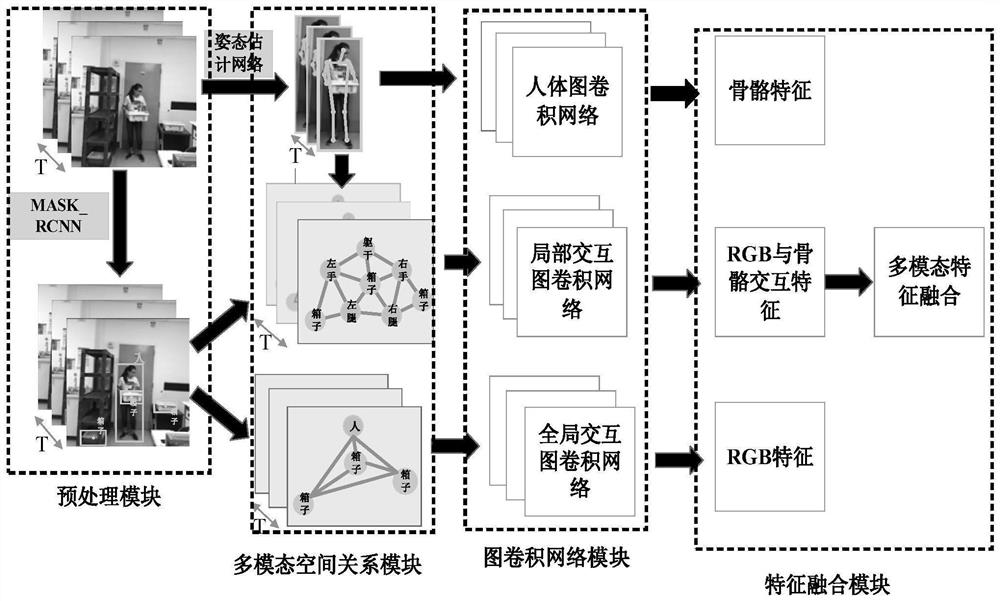

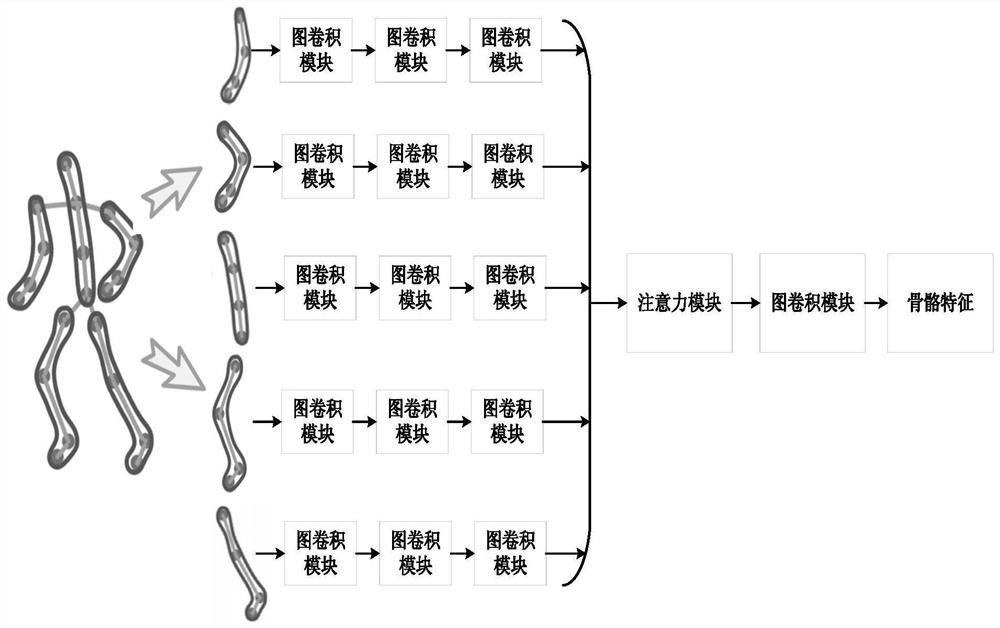

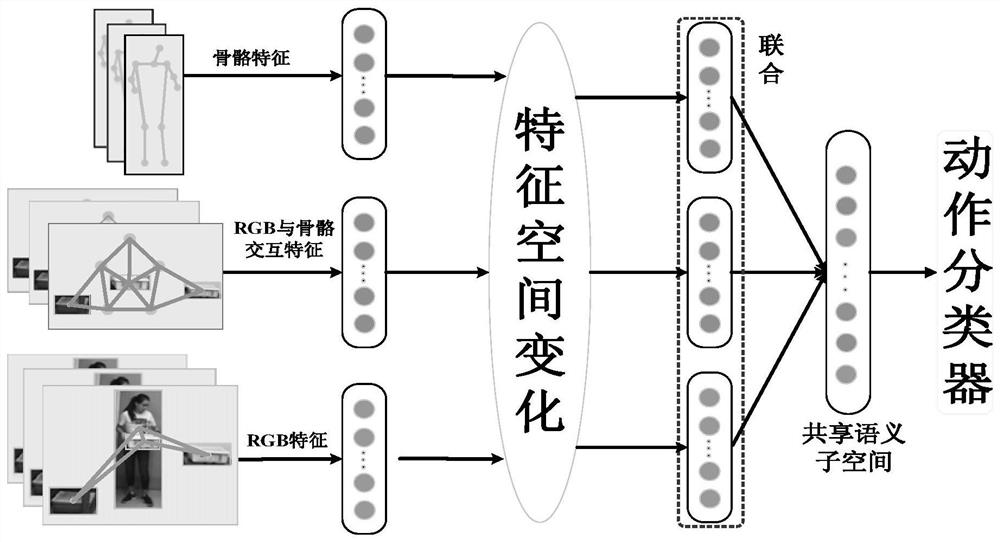

[0016] A multi-modal interactive behavior recognition method based on RGB and three-dimensional skeleton proposed by the present invention mainly includes the following steps: video preprocessing, multi-modal spatial relationship, feature extraction and feature fusion of graph convolutional network; Perform preprocessing to extract the information of people and objects in the video, and then use multi-modality to construct the spatial relationship between people and objects from the global to the local; and use the graph convolutional network to extract the corresponding deep features, and finally in the feature layer and decision layer Fusion of various modal features is used to identify human interaction behavior, as follows:

[0017] (1) Video preprocessing: extraction of objec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com