Resource scheduling implementation method based on energy consumption and QoS collaborative optimization

A resource scheduling and collaborative optimization technology, applied in resource allocation, energy-saving computing, program startup/switching, etc., can solve problems such as unrealistic, slow convergence speed, complicated solution process, etc., and achieve the effect of improving efficiency and optimizing the total time cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0091] A resource scheduling implementation method based on collaborative optimization of energy consumption and QoS, comprising the following steps:

[0092] S1. Construct a cloud task arrival queuing model for multiple virtual machines (VMs) in a cloud computing data center environment;

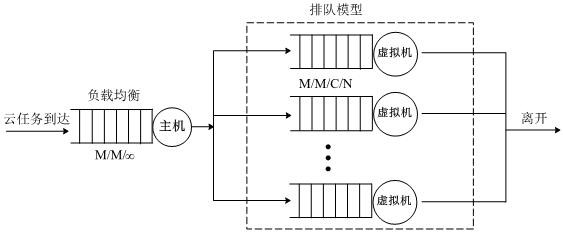

[0093] Such as figure 1 As shown, the cloud task arrival queuing model is composed of a host queuing model and a virtual machine (VM) queuing model in series, and is used to optimize the relationship between the backlog length of the virtual machine cloud task queue and system energy consumption;

[0094] In the host queuing model, after the cloud task is submitted to the data center, the data center will adopt the load balancing strategy of the least loaded (least loaded) criterion, and assign the cloud task to the host with the least number of unfinished cloud task requests, and thus constitute A queuing model in which the inter-arrival time of cloud tasks is exponentially distributed an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com