Memory management method based on deep learning network

A deep learning network and memory management technology, applied in neural learning methods, biological neural network models, electrical digital data processing, etc. Achieve the effect of improving speed and performance, saving memory and saving resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

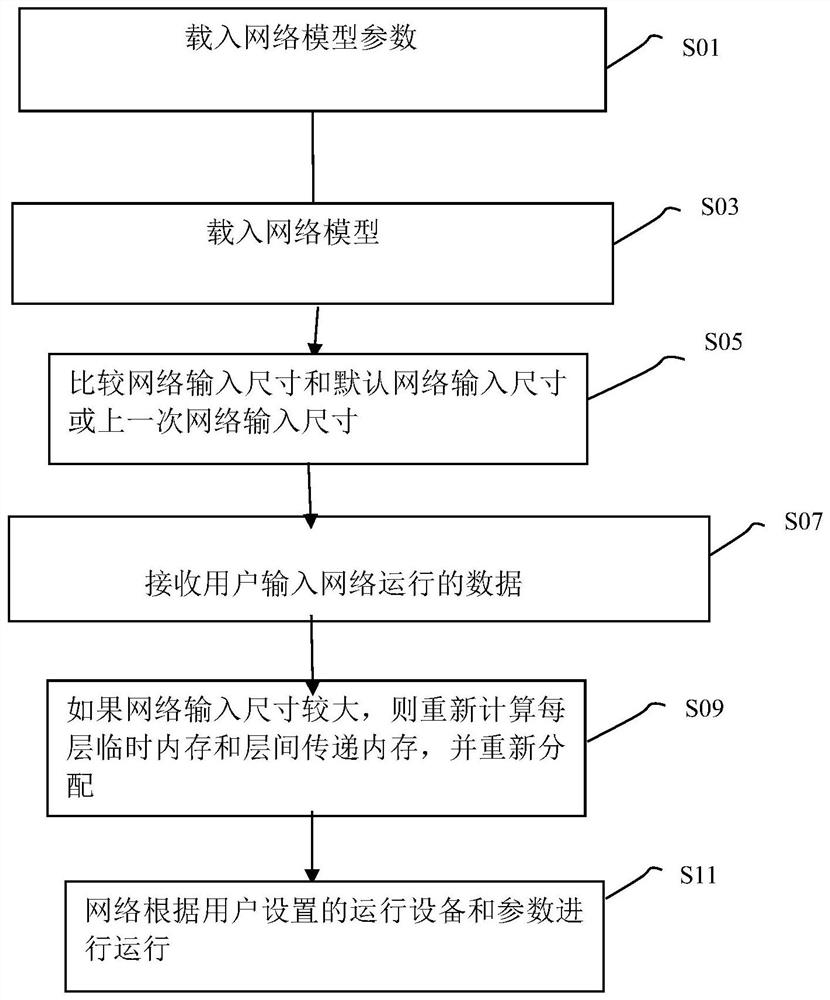

[0029] According to attached figure 1 As shown, a memory management method based on a deep learning network in an embodiment of the present invention includes:

[0030] S01, load network model parameters;

[0031] S03, load the network model;

[0032] S05, receiving data input by the user for network operation;

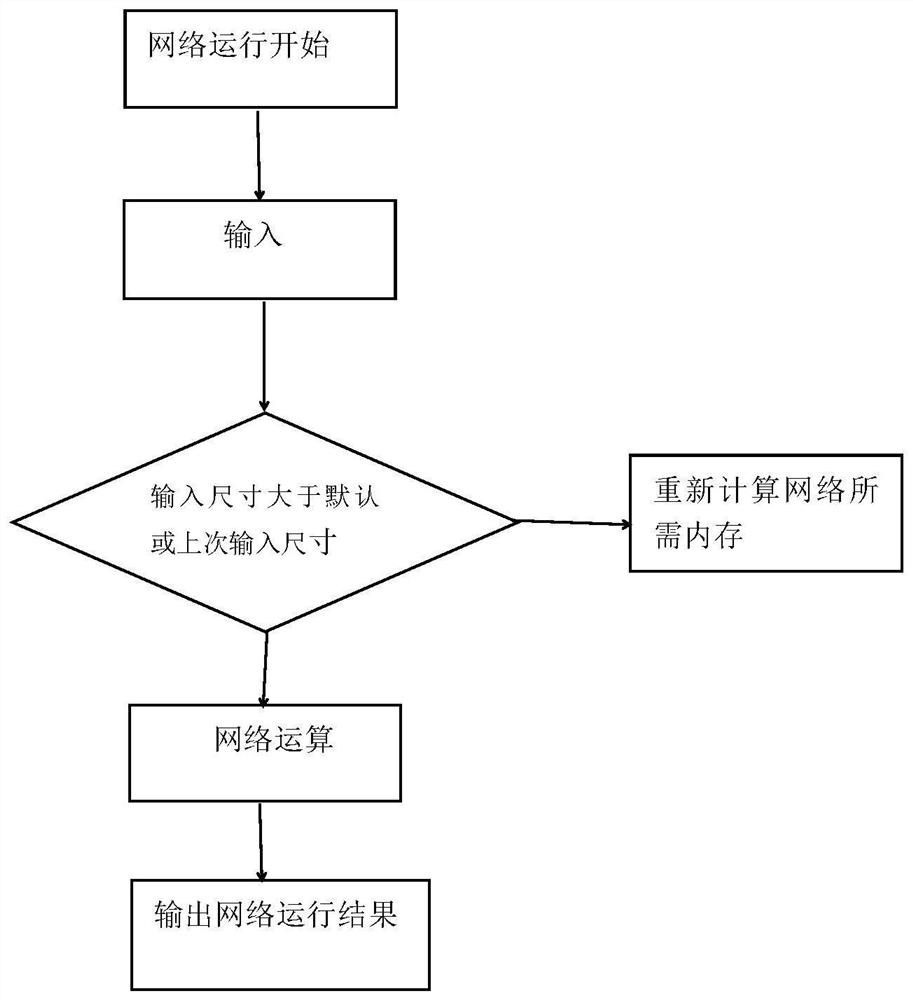

[0033] S07, comparing the network input size with the default network input size or the last network input size;

[0034] S09, if the network input size is large, recalculate the temporary memory of each layer and the transfer memory between layers, and re-allocate;

[0035] S11, the network operates according to the operating equipment and parameters set by the user.

[0036] Further, the above method also includes: if the network input size is large, the memory size remains unchanged.

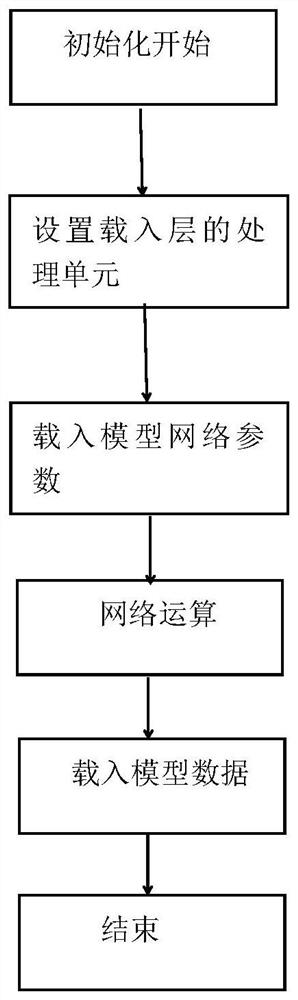

[0037] Further, the above method also includes: setting the processing unit of the model network according to the user, and the setting method includes any one of the following:...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com