Robot positioning and grabbing method and system based on laser visual guidance

A robot positioning and visual guidance technology, applied in the field of robotics, can solve the problems of being unable to support the bottom of objects, and achieve the effect of easy promotion and use and reasonable structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention.

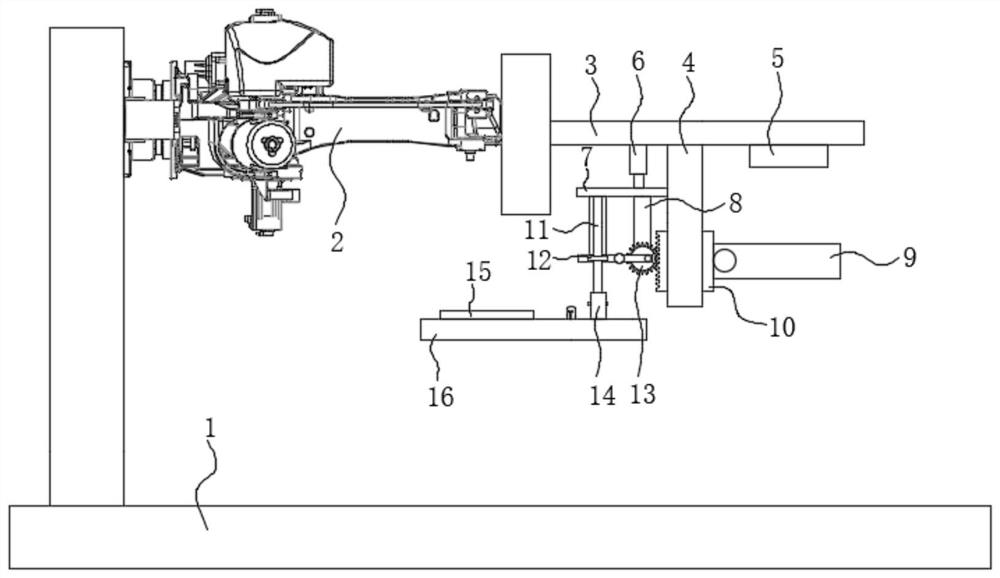

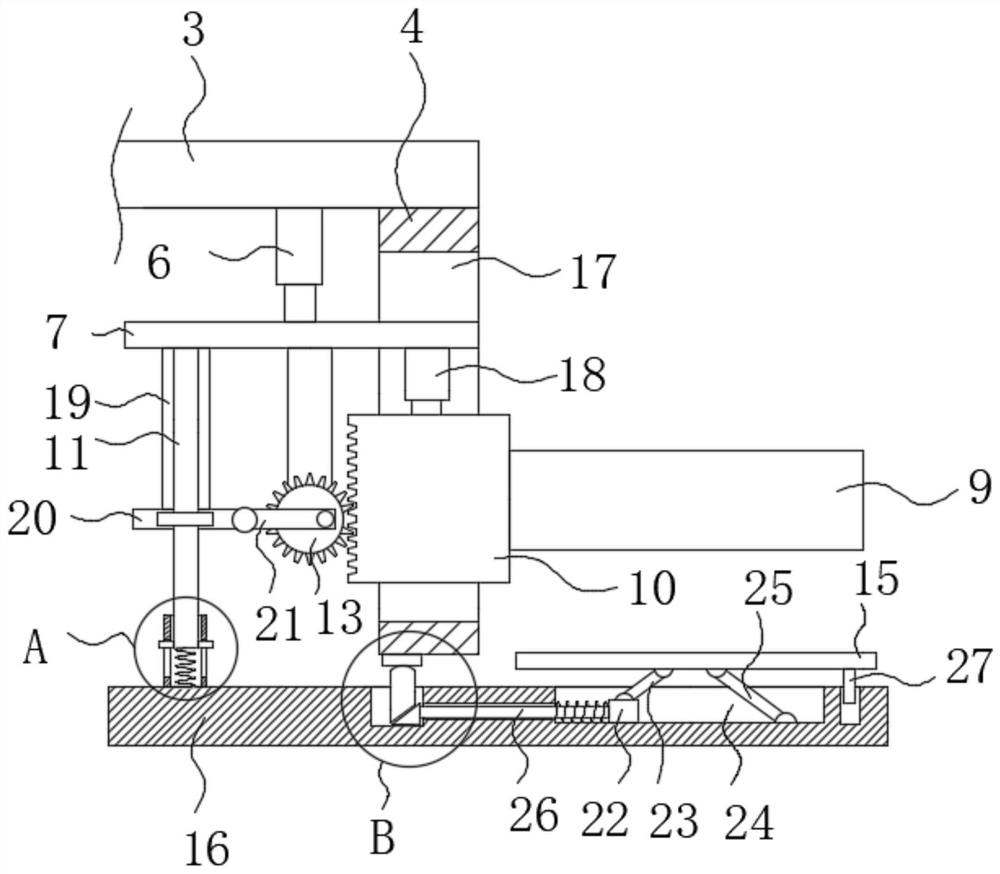

[0033] refer to Figure 1-7 , a robot positioning system based on laser vision guidance, including a base 1, a host computer 37 installed on the top of the base 1, a robot arm 2 and a grabbing mechanism installed on the robot arm 2, the output end of the host computer 37 is connected to the robot arm 2 The input end of the gripper is electrically connected with the input end of the grasping mechanism;

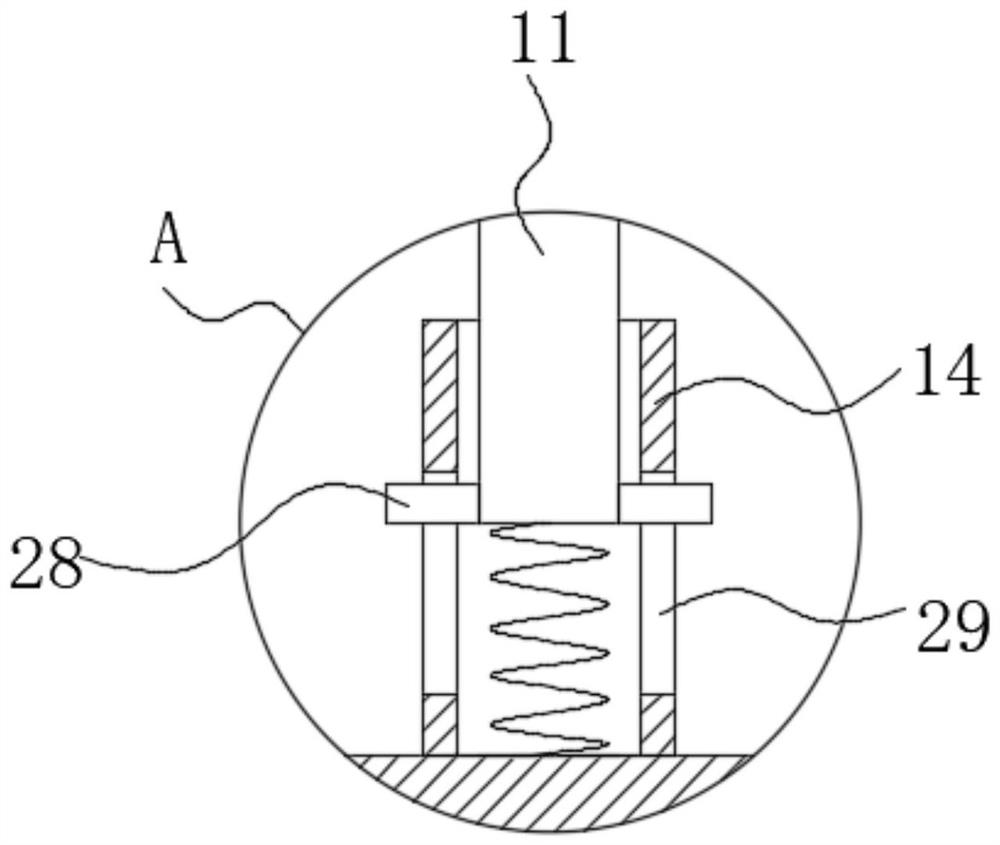

[0034] The grabbing mechanism includes a top plate 3 installed on the mechanical arm 2, a visual positioning device 5 installed on the bottom of the top plate 3, a fixed plate 4, and a mounting plate 10 movably installed on the fixed plate 4. The positioning device 5 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com