Human body posture estimation method and recognition device

A technology of human body posture and recognition device, which is applied in the field of human-computer interaction and can solve the problems of loss of effective information, poor real-time performance of posture estimation algorithm, and low degree of visualization of recognition results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

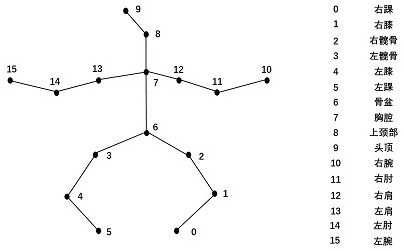

[0031] An embodiment of the present disclosure provides a human body pose estimation method.

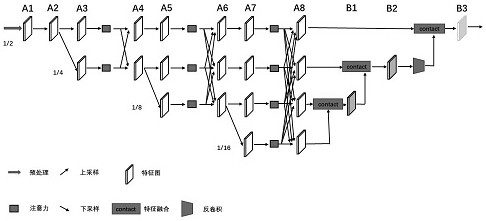

[0032] Specifically, see figure 1 , a human pose estimation method comprising:

[0033] Step 1. Pass the preprocessed image into the high-resolution network, and generate feature maps in each branch of the network after downsampling, upsampling, and convolution operations;

[0034] In this embodiment, a high-resolution network model is constructed, and the model is divided into 1 / 2 resolution layer, 1 / 4 resolution layer, 1 / 8 resolution layer and 1 / 16 resolution layer. Among them, the 1 / 2 resolution layer is the backbone layer, and the resolution is half of the resolution of the transmitted image, which can handle the pose estimation of small target characters in the image, and the other three resolution layers are the branch layers of the network. The 1 / 2 resolution layer is down-sampled to get the 1 / 4 resolution layer, the 1 / 4 resolution layer is down-sampled to get the 1 / 8 resolu...

Embodiment 2

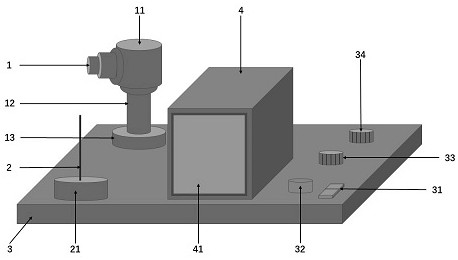

[0044] An embodiment of the present disclosure provides a human body pose estimation and recognition device.

[0045] Specifically, see figure 2 , the human body posture estimation and recognition device includes: 1. camera; 11. compensation light source; 12. lifter; 13. lifting platform; 2. receiving antenna; 21. receiver; Power indicator light; 33. Elevation adjustment knob; 34. Angle adjustment knob; 4. Main control box; 41. Display screen.

[0046] In this embodiment, the camera 1 and the compensating light source 11 are placed immediately above the lifter 12, the receiver 21 is facing the lifter 12, the receiver 21 and the lifter 12 are installed on the left end of the base bracket 3, and the main control box 4 is located in the middle position directly above the bottom bracket 3, and above the right end of the bottom bracket 3 is the adjustment knob; the camera 1 is used to capture external portrait information and take pictures, and the adjustment knob is used to adju...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com