Parallel sentence pair extraction method based on pre-training language model and bidirectional interactive attention

A language model and parallel sentence pair technology, applied in neural learning methods, biological neural network models, semantic analysis, etc., can solve problems such as lack of training data, and achieve the effect of improving prediction results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

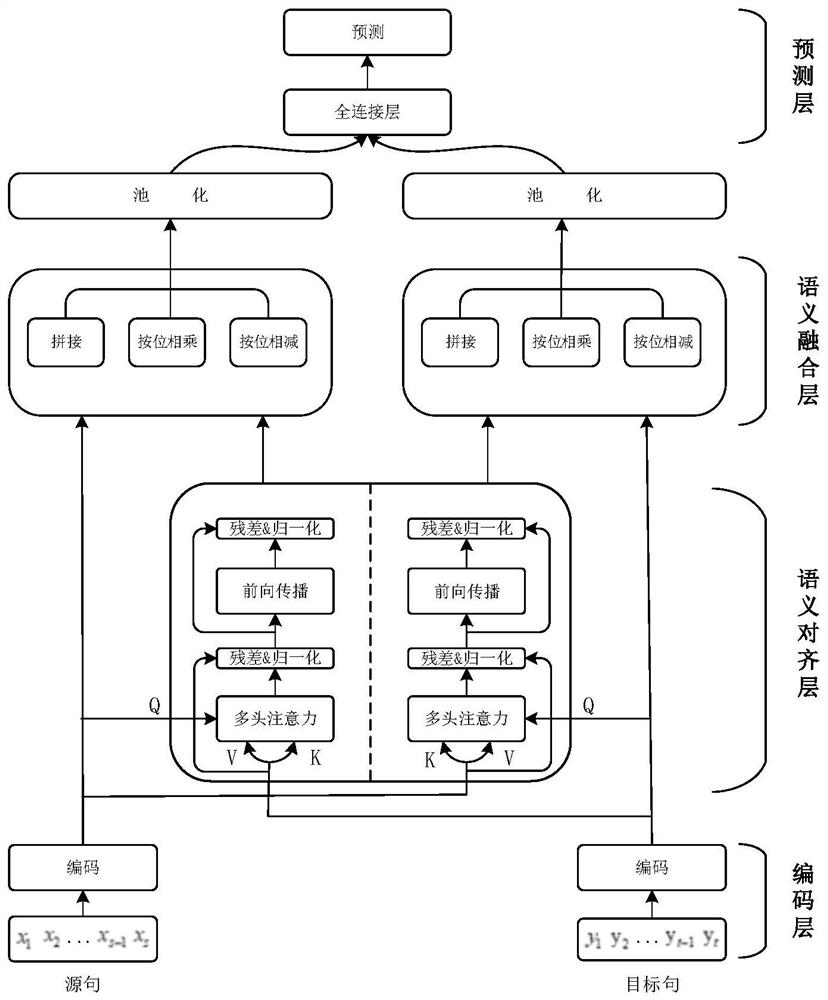

[0046] Embodiment 1: as figure 1 As shown, based on the pre-trained language model and the two-way interactive attention parallel sentence pair extraction method, the specific steps of the method are as follows:

[0047] Step1. Collect and construct Chinese-Vietnamese parallel data through web crawler technology, use negative sampling to obtain non-parallel data, and manually mark the data set to obtain a Chinese-Vietnamese comparable corpus data set. The main sources of Chinese-Vietnamese parallel data include Wikipedia, bilingual news websites, Movie subtitles and more.

[0048] As a further solution of the present invention, the specific steps of the step Step1 are:

[0049] Step1.1. Obtain parallel data between China and Vietnam through web crawler technology; data sources include Wikipedia, bilingual news websites, movie subtitles, etc.;

[0050] Step1.2. After cleaning and aligning the crawled data, they are used as positive samples for model training. In order to main...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com