Cooperative localization method based on multi-modal map

A collaborative positioning and multi-modal technology, applied in the direction of electromagnetic wave re-radiation, measuring devices, instruments, etc., can solve the problems of large matching error, low precision, strong light dependence, etc., and achieve the effect of precise positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to achieve more accurate positioning and navigation of unmanned vehicles, the present invention fuses different environmental information obtained by laser radar and visual camera scanning, combines the advantages of rapid matching of laser radar and accurate matching of visual marks, and obtains multi-type observations The global map of the data to achieve the effect of precise positioning and navigation of unmanned vehicles in the environment.

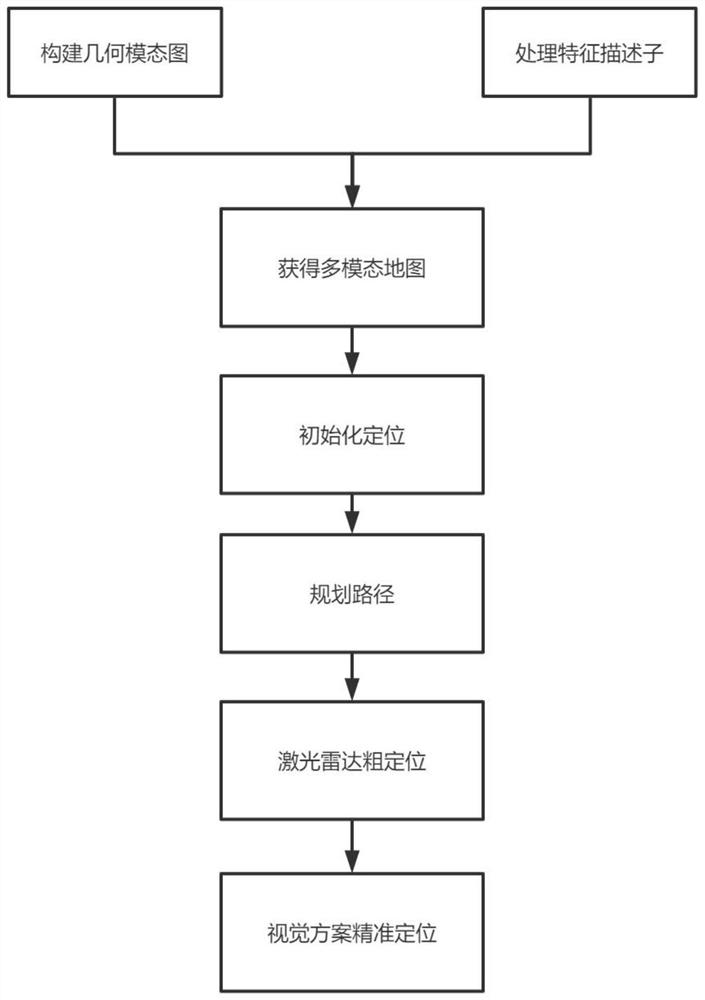

[0028] The implementation flow chart is as follows figure 1 As shown, a co-location method based on multi-modal maps, the steps are as follows:

[0029] Step 1: Obtain scene information through lidar scanning, and construct scene geometric mode map;

[0030] The unmanned vehicle is equipped with lidar to move inside the site, and the whole scene is scanned through the lidar to obtain the environmental information of the current unmanned vehicle location, and the geometric information of the surrounding corners, plane...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com