Register attack method and system for generating uniformly distributed disturbance by taking pulse as probability

A uniformly distributed and adversarial technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of large number of pixels, the extreme limitations of generated samples that do not consider adversarial attacks, and insufficient utilization. , to achieve the effect of reducing complexity, improving invisibility, and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

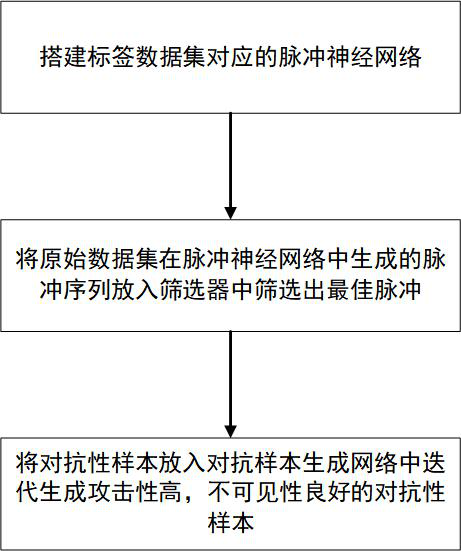

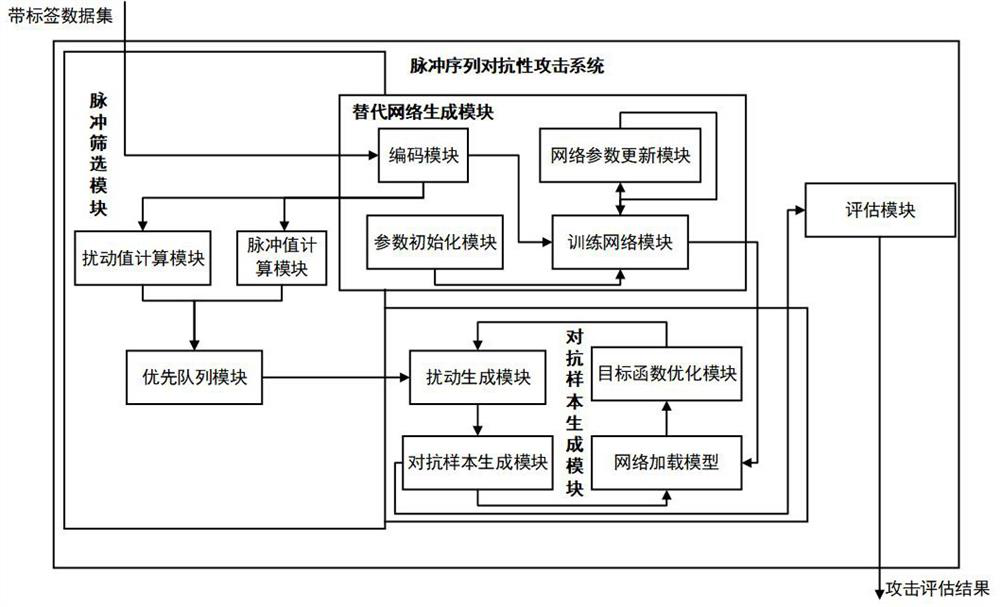

[0075] Please refer to figure 1 , the present invention provides an adversarial attack method that uses pulses as probability to generate uniformly distributed disturbances, such as figure 1 shown, including the following steps:

[0076] Step A: First preprocess the input image X, and then in the self-compiler F of the spiking neural network enc Use Poisson code conversion to convert it into a sequence of pulse data format γ=(γ 1 , gamma 2 ,…, γ T ), where T is the simulation time step;

[0077] Step A1: Perform maximum and minimum normalization on the input data X of the spiking neural network, that is, normalize the pixel value of each original pixel to x∈[0,1];

[0078] Step A2: Set a time step T, and set the pulse emission probability p=x at a time step T, thereby generating a pulse sequence γ with a time step T.

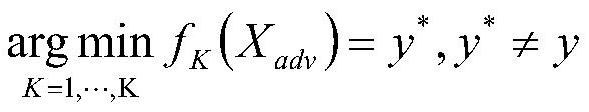

[0079] Step B: Based on the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com