Positioning mapping method and system based on UWB and visual SLAM fusion algorithm

A fusion algorithm and vision technology, applied in the direction of location information-based services, navigation, instruments, etc., can solve problems affecting positioning accuracy and achieve the effect of reducing cumulative location errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

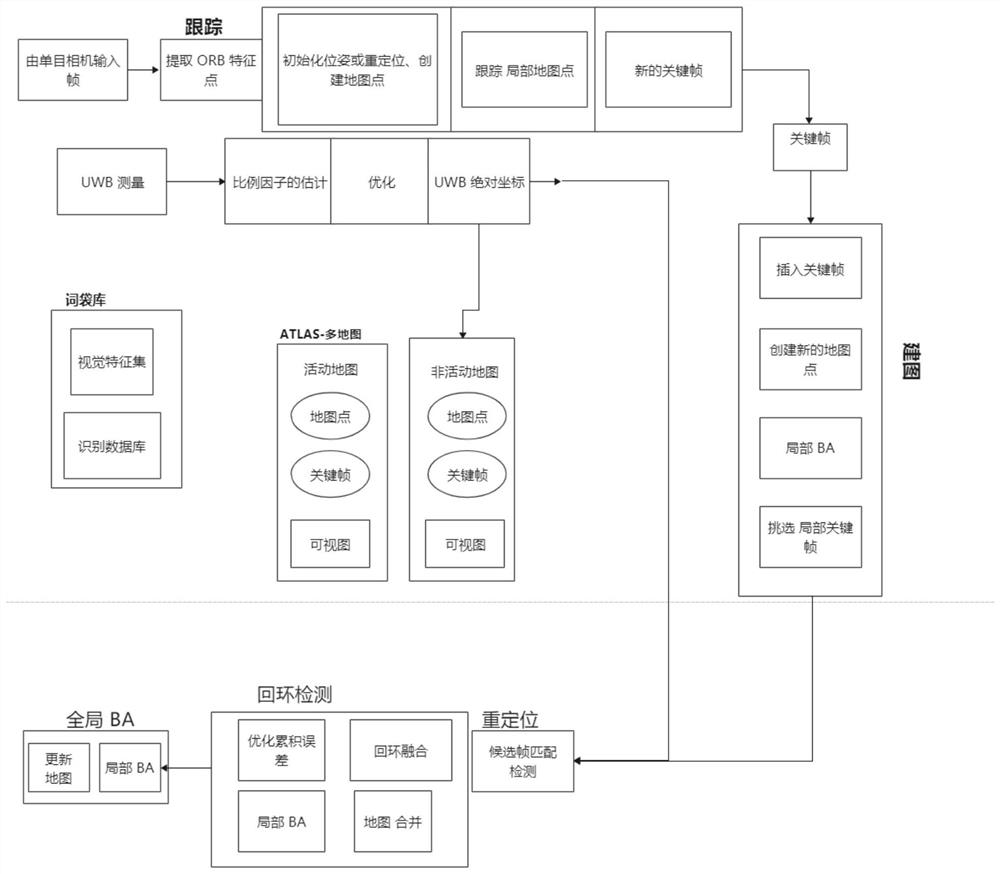

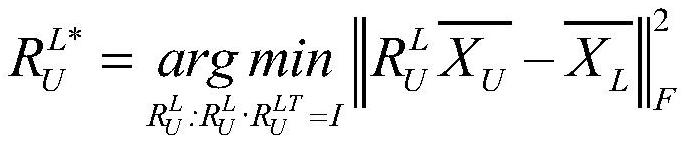

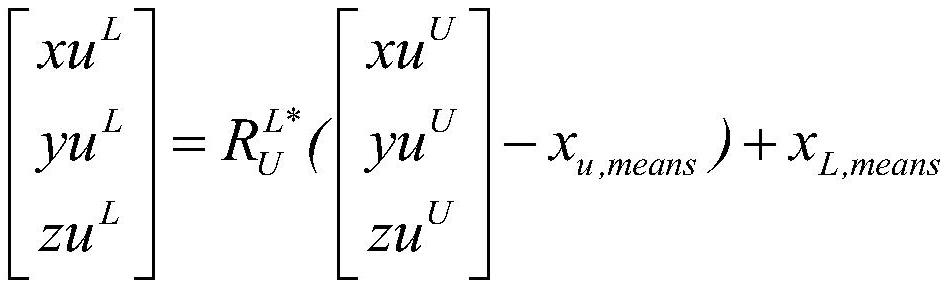

[0048] Such as figure 1 As shown, Embodiment 1 of the present invention proposes a positioning and mapping method for UWB and camera sensor fusion, the method includes the following steps:

[0049]1. Take the initial position of the mobile robot as the origin, and the initial orientation as the X coordinate axis to establish a world coordinate system. The UWB positioning tag is located at the origin of the body frame, which is the center of gravity of the rear axle, and other UWB positioning anchors are on the ground. During the movement of the mobile robot, by obtaining the UWB signal transmitted by the UWB positioning tag and the UWB positioning anchor point in real time, the classic MDS algorithm (Multiple Dimensional Scalling, dimensionality reduction algorithm) is used to initialize the first UWB position, and the EKF algorithm (Extended Kalman Filter, extended Kalman filter) to update and obtain UWB coordinates. During the operation of the trolley, the camera runs in re...

Embodiment 2

[0085] Embodiment 2 of the present invention proposes a positioning and mapping system based on the fusion algorithm of UWB and visual SLAM, which is implemented based on the method of Embodiment 1. The system includes: an acquisition module, a key frame extraction module, a coordinate system conversion module, and detection and mapping module; among them,

[0086] The acquisition module is used to obtain the UWB signal transmitted by the UWB positioning tag and the UWB positioning anchor point in real time, obtain the coordinates of the moving vehicle in the UWB coordinate system, acquire the image containing the environmental information collected by the camera synchronously, and establish the camera coordinate system;

[0087] The key frame extraction module is used to process the image containing the environment information, calculate the inter-frame motion according to the feature point matching between the frames, extract the feature point, and select the image satisfying...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com