Transform algorithm-based single-mode label generation and multi-mode emotion discrimination method

A single-modal and multi-modal technology, applied in character and pattern recognition, computing, computer parts, etc., can solve the problem of time-consuming and laborious manual labeling of single-modal labels, and achieve the effect of improving understanding and generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

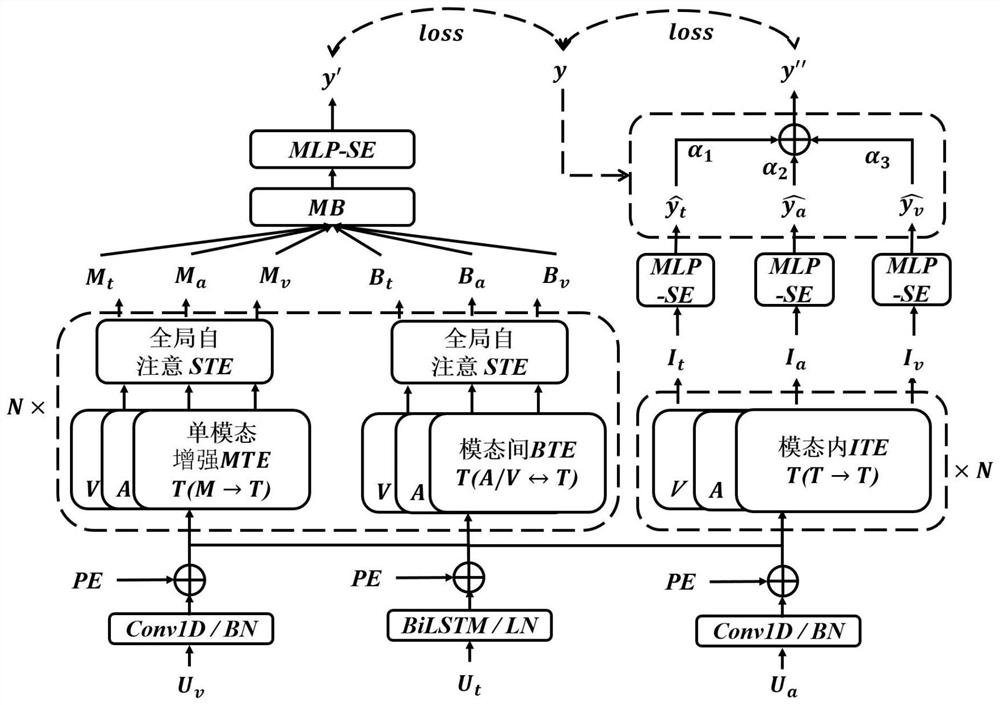

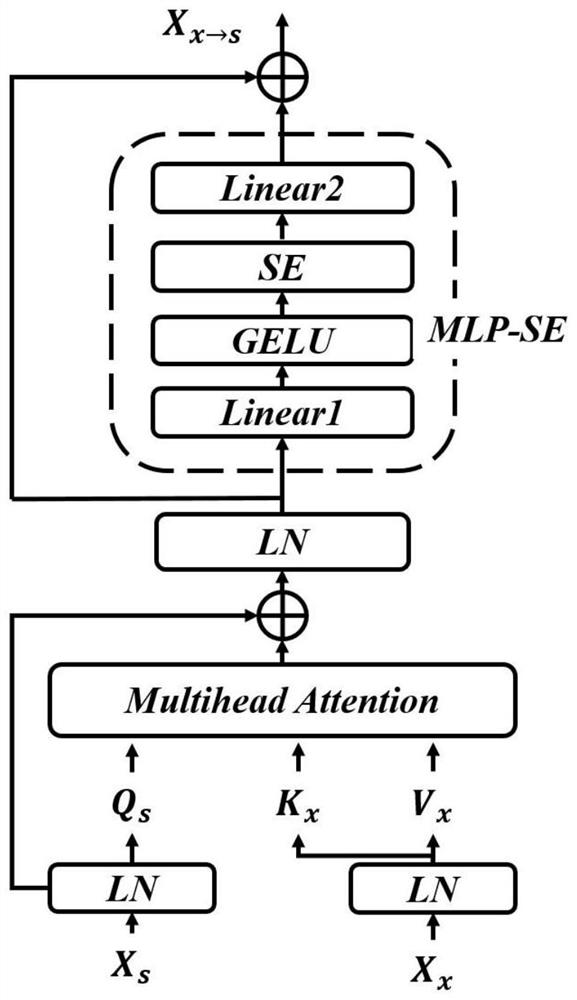

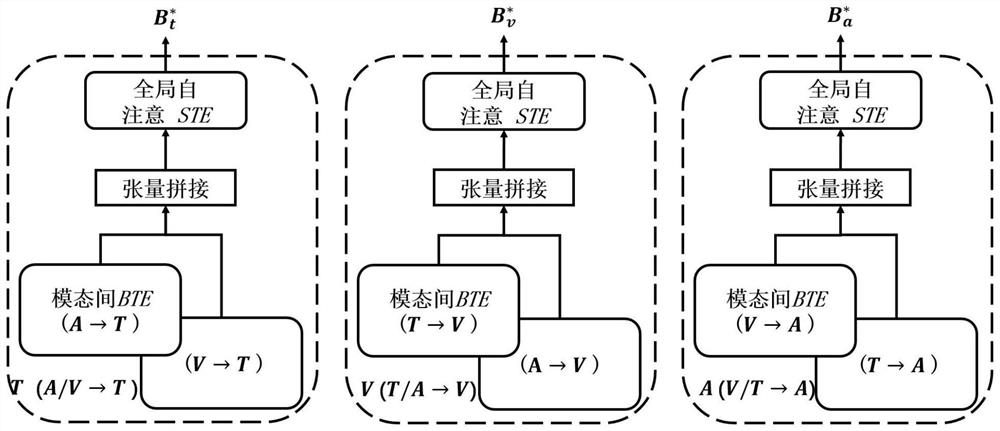

[0069] In this embodiment, a single-modal label generation and multi-modal emotion discrimination method based on the Transformer algorithm, the overall algorithm flow is as follows figure 1 As shown, the steps include: first obtain multi-modal non-aligned data sets, and perform preprocessing to obtain embedded expression features of corresponding modalities; then establish ITE network modules to extract intra-modal features; combine single-modal label prediction with multi-modal Fusion generation of modal emotion decision-making discriminant labels, establishment of inter-modal BTE network module and modal enhanced MTE network module, and acquisition of inter-modal features and modal enhancement features through the global self-attention STE network module to obtain multi-modal emotions The label of the deep prediction; finally, iterative training is carried out in combination with the design of the loss function. Specifically, it is characterized in that it proceeds in the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com