Convolutional neural network accelerator based on FPGA

A convolutional neural network and accelerator technology, applied in the field of convolutional neural network accelerators, to achieve the effects of optimizing energy efficiency ratio, ensuring real-time performance and stability, and low power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

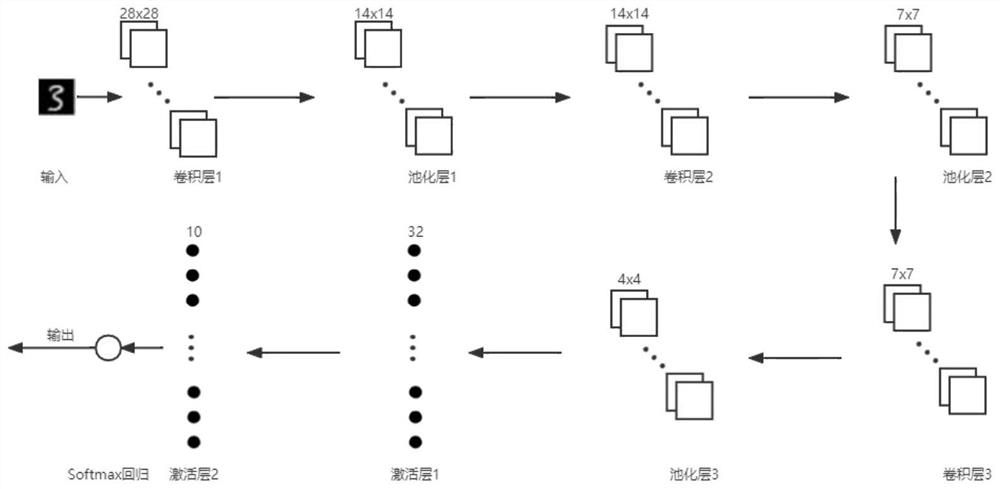

[0032] In order to make the purpose, content, and advantages of the present invention clearer, the specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

[0033] In order to solve the speed and energy consumption problems of the convolution operation processor at the same time, accelerate the convolution operation, and reduce hardware power consumption, the present invention provides an FPGA-based convolution neural network accelerator, which optimizes the energy efficiency ratio of the hardware and ensures the target The real-time and stability of detection tasks.

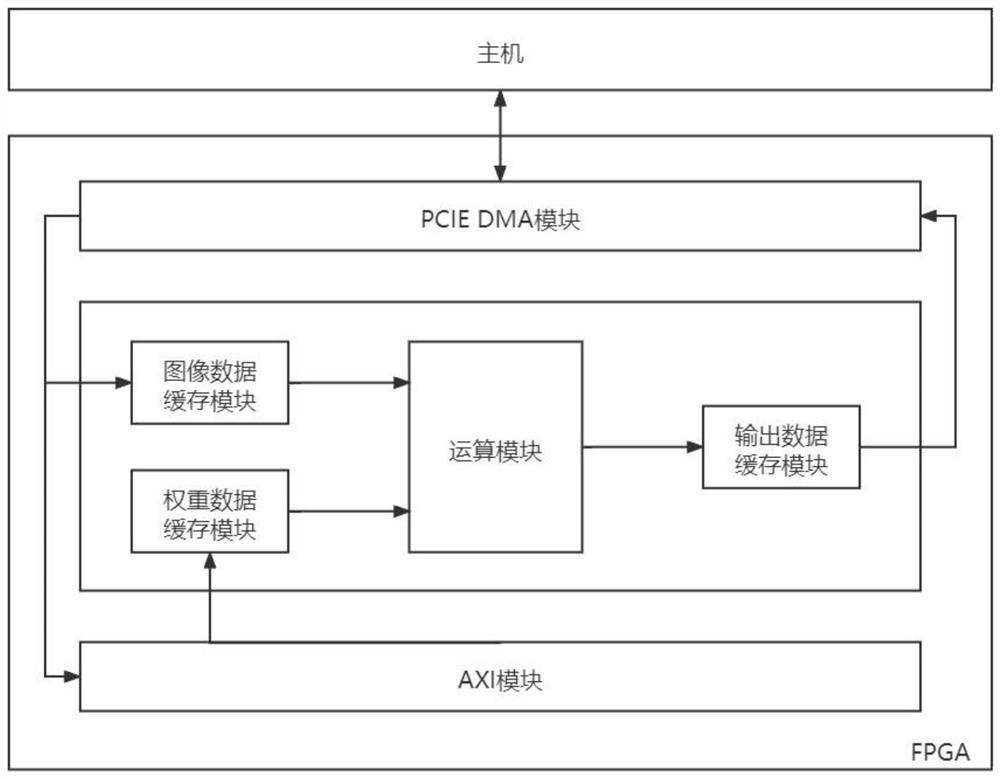

[0034] like figure 1 Shown, a kind of FPGA-based convolutional neural network accelerator provided by the present invention comprises:

[0035] (1) Host: responsible for the management of slave devices composed of other modules in the accelerator, sending input image data and weight parameters to the PCIE D...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com