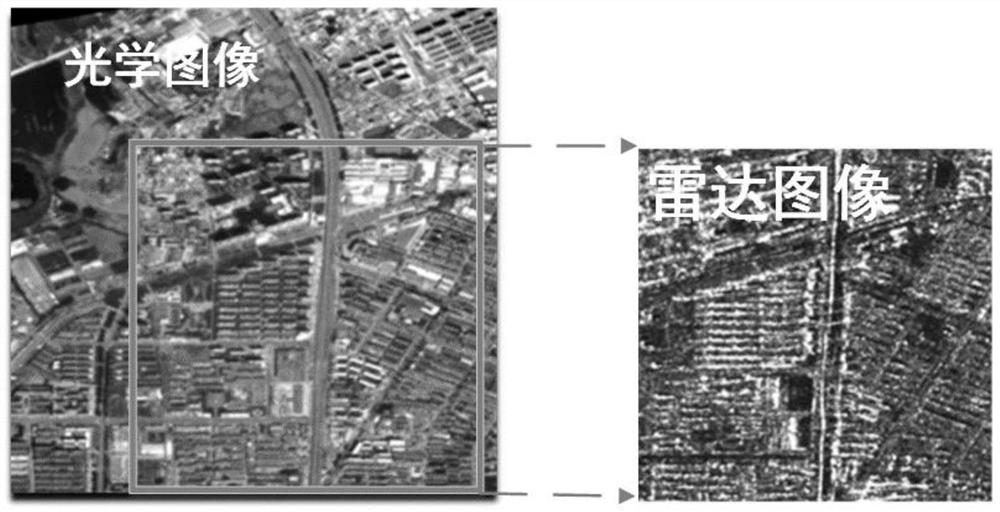

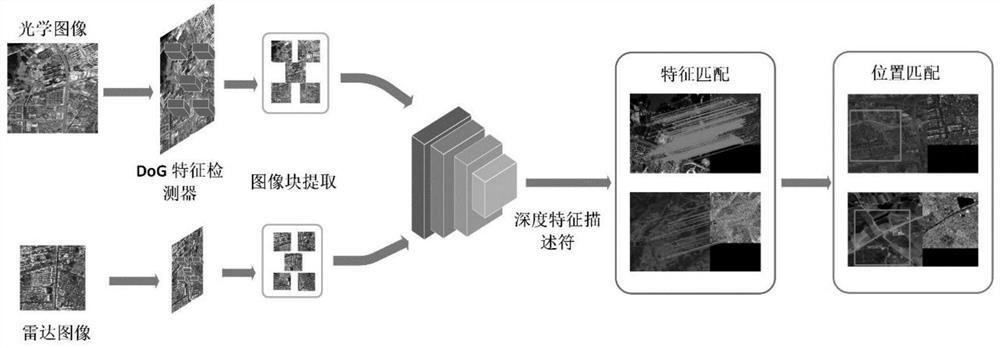

SAR image and optical image matching method based on feature matching and position matching

An optical image and feature matching technology, applied in the field of image processing, to achieve the best matching ability, best matching accuracy, and high practical value.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

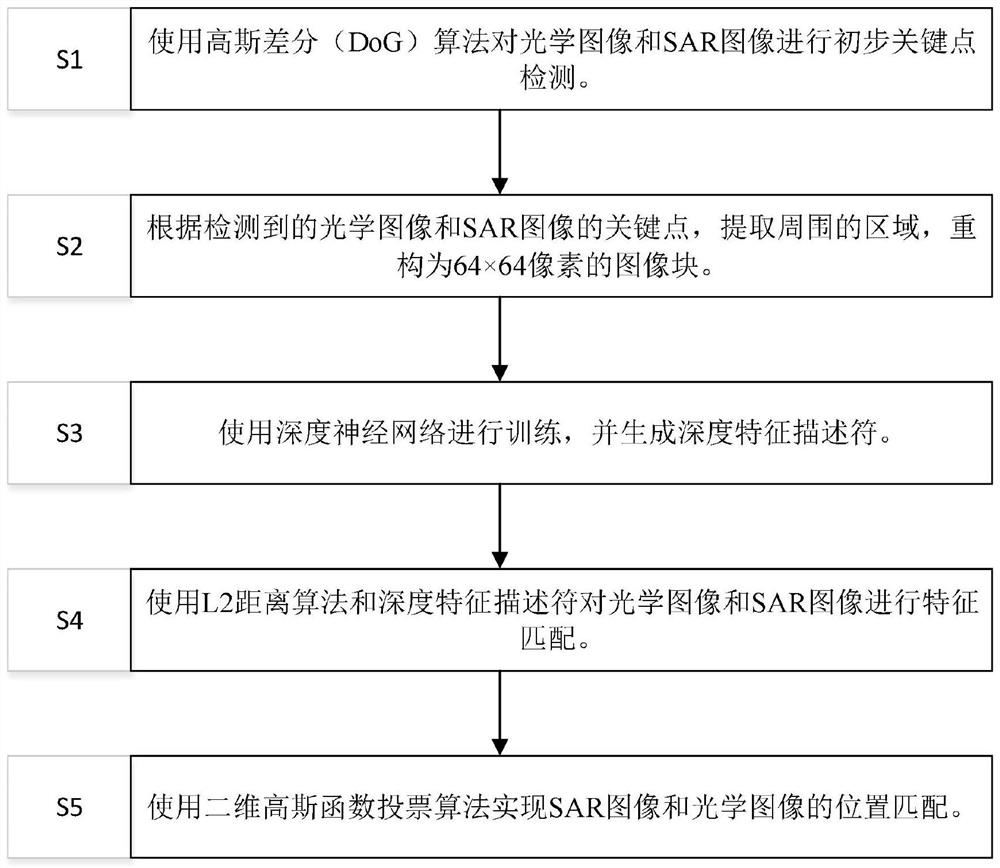

[0165] The present embodiment discloses a kind of SAR image and optical image matching method (MatchosNet) and has realized position matching, and it comprises the following steps:

[0166] A. Design a two-dimensional Gaussian distribution voting algorithm to achieve position matching between SAR images and optical images.

[0167] The coordinates of the upper left pixel of the SAR image on the optical image can be obtained by matching each pair of feature points. Such as Figure 10 As shown, since each set of images has many different feature matching points, it is possible to obtain multiple candidate location coordinates.

[0168] A location matching voting algorithm is designed using two-dimensional Gaussian distribution. x, y are respectively the horizontal coordinate and the vertical coordinate of the prediction point, and the two-dimensional Gaussian function of the present invention can be expressed as:

[0169]

[0170] Among them, σ 1 , σ 2 is the variance of...

Embodiment 3

[0182] In this embodiment, different deep convolutional network structures are used to experiment with the feature matching and position matching methods in Embodiment 1 and Embodiment 2, so as to verify the performance of the network structure designed in the present invention.

[0183] Table 8 The average error xrmse, yrmse, xyrmse and the number of pictures matched by the correct position of the method using different depth convolutional networks.

[0184]

[0185] By comparing the results of different models, the influence of the network structure on the feature detection model and the validity of the MatchosNet structure designed by the present invention are verified. Table 8 shows the position matching results of MatchosNet and the other two methods. It can be seen that MatchosNet has the lowest Xrmse, Yrmse, and XYrmse, and the number of images with correct position matches in the batch is the largest. The above experiments prove that the network architecture design...

Embodiment 4

[0187] In this embodiment, different loss functions are used to conduct experiments on the feature matching and position matching methods in Embodiment 1 and Embodiment 2, so as to verify the performance of the loss function designed in the present invention.

[0188] Table 9 The average error xrmse, yrmse, xyrmse and the number of pictures matched by the correct position of the method using different loss functions.

[0189]

[0190] The effect of the loss function on the feature detection model is verified by comparing the results of different models. Table 9 shows the position matching results of MatchosNet and the other two methods. It can be seen that MatchosNet has the lowest Xrmse, Yrmse, and XYrmse, and the number of images with correct position matches in the batch is the largest. The above experiments prove that the loss function designed by MatchosNet of the present invention is very effective when dealing with feature matching and position matching problems.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com