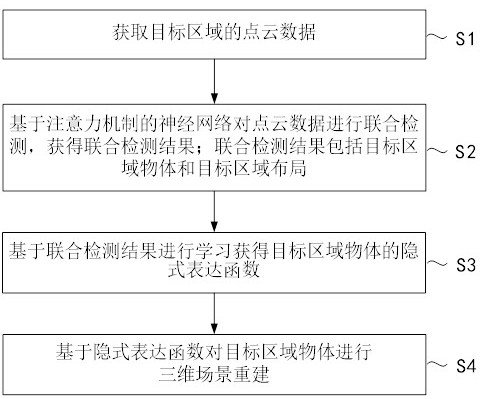

A three-dimensional scene reconstruction method, device and electronic device based on lidar

A 3D scene and laser radar technology, applied in the field of computer vision, can solve the problems of long processing time, less cooperative detection, and high hardware requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

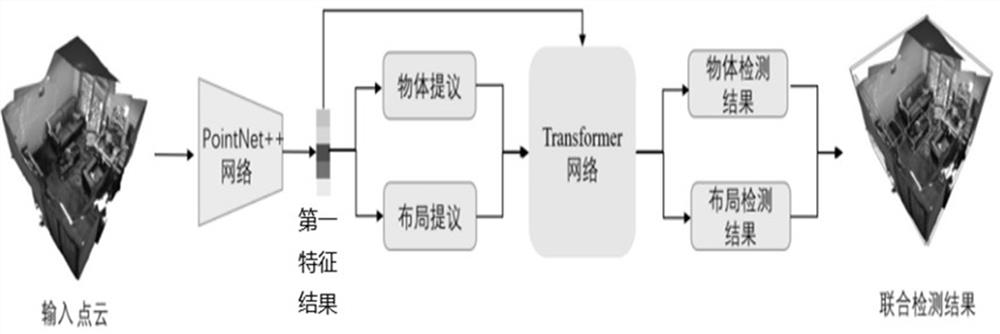

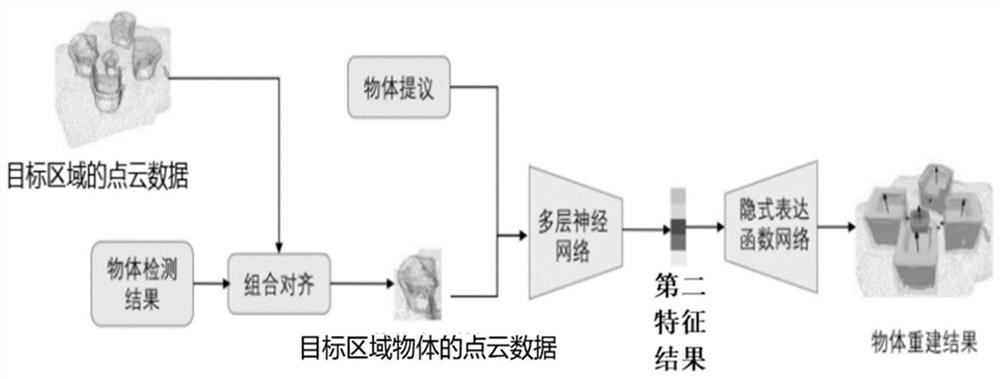

Method used

Image

Examples

Embodiment Construction

[0061] In the description of the present invention, it should be understood that the terms "first" and "second" are only used for description purposes, and cannot be interpreted as indicating or implying relative importance or the number of indicated technical features. Thus, a feature defined as "first" or "second" may expressly or implicitly include one or more of that feature. In the description of the present invention, "plurality" means two or more, unless otherwise expressly and specifically defined.

[0062] The "plurality" mentioned in this embodiment refers to two or more. "And / or", which describes the association relationship of the associated objects, indicates that there can be three kinds of relationships, for example, A and / or B, which can indicate that A exists alone, A and B exist at the same time, and B exists alone. Words such as "exemplary" or "such as" are used to denote an example, illustration, or illustration, are intended to present the relevant concep...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com