Virtual reality video emotion recognition method and system based on time sequence characteristics

A technology of virtual reality and emotion recognition, applied in neural learning methods, character and pattern recognition, instruments, etc., to achieve the effects of avoiding noise interference, reducing data subjectivity, and reducing individual differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

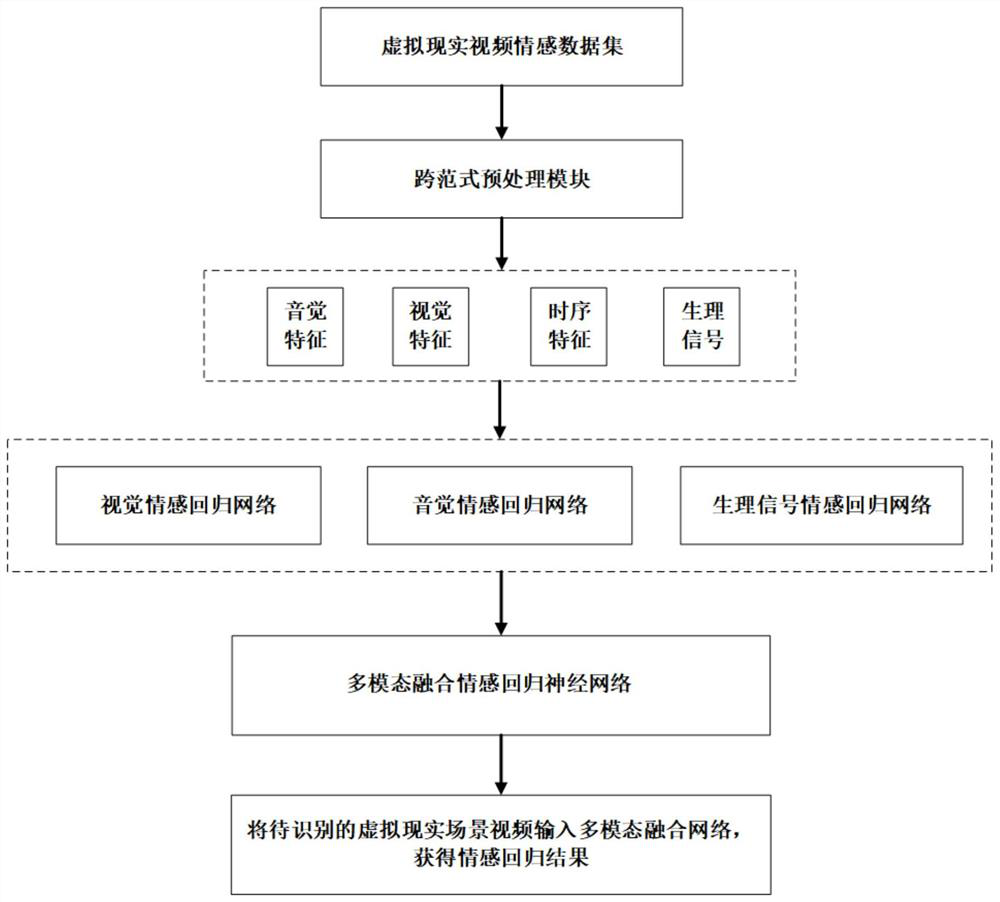

[0027] like figure 1 As shown, this embodiment provides a virtual reality video emotion recognition method based on time series features, and the method mainly includes the following steps:

[0028] S1. Establish a virtual reality scene audio and video data set with continuous emotional labels. The content of the data set includes manually extracted continuous emotional labels, audio features, visual features, physiological signal features, etc. (EEG, BVP, GSR, ECG).

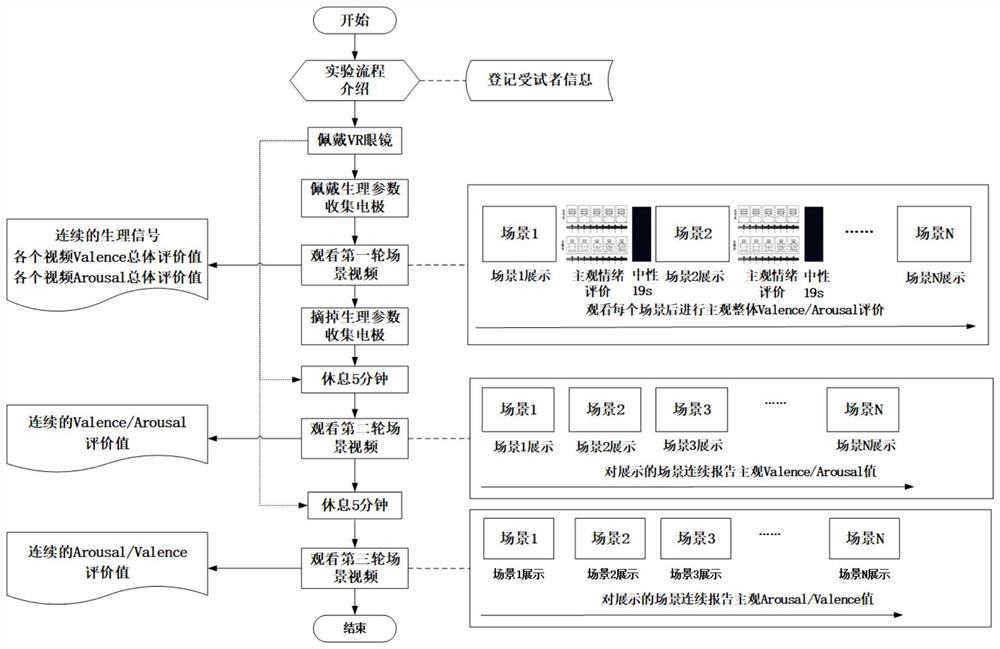

[0029] This step builds a virtual reality scene audio and video dataset with continuous emotional labels, such as figure 2 As shown, the specific process includes:

[0030] S11. Collect virtual reality scene videos containing different emotional contents, conduct SAM self-evaluation on the collected N virtual reality scene videos by M healthy subjects, and screen out F virtual reality scenes in each emotional quadrant according to the evaluation scores. scene video.

[0031] S12. Set up a continuous SAM self...

Embodiment 2

[0053] Based on the same inventive concept as Embodiment 1, this embodiment provides a virtual reality video emotion recognition system based on time series features, including:

[0054] The data set establishment module is used to establish a virtual reality scene audio and video data set with continuous emotional labels. The content of the data set includes manually extracted continuous emotional labels, audio features, visual features and physiological signal features;

[0055] The preprocessing module is used for cross-paradigm data preprocessing of the virtual reality scene video to be recognized;

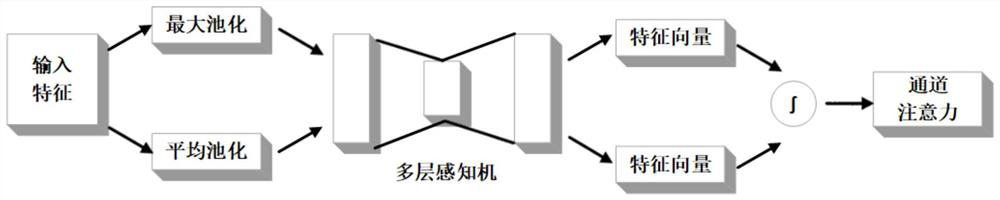

[0056] The feature extraction module performs feature extraction on the preprocessed data, and uses a deep learning network to extract deep features from audio, visual, time series and physiological signals;

[0057] The multi-modal regression model generation and training module trains a single-modal virtual reality scene video emotion regression model, and integrates to gene...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com