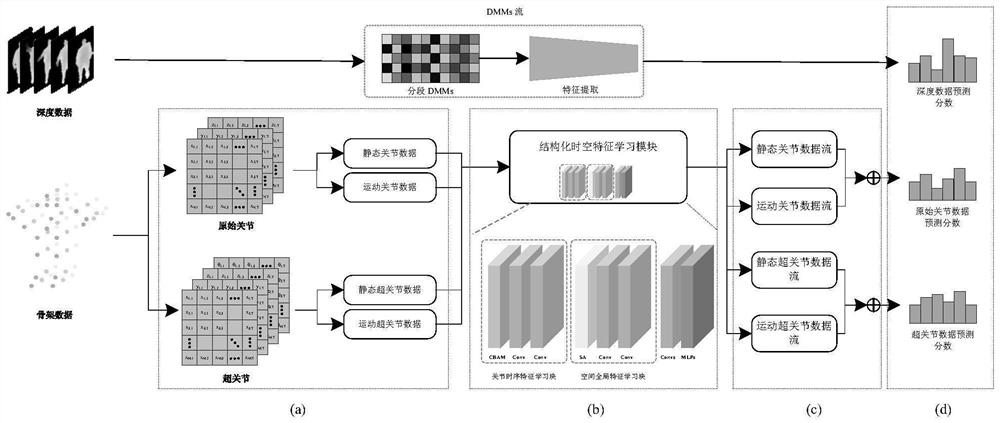

Super-joint and multi-modal network and behavior identification method thereof

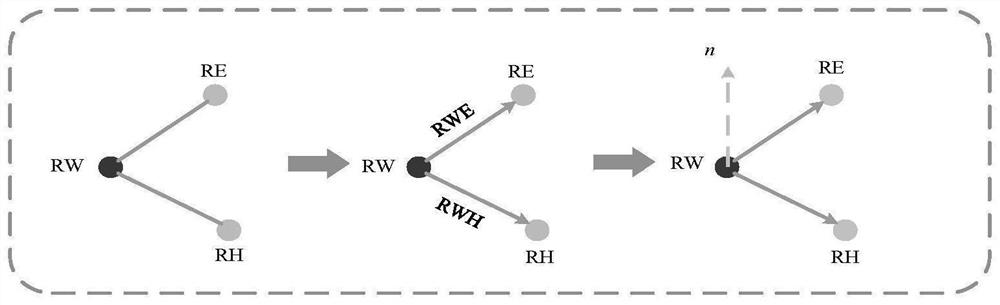

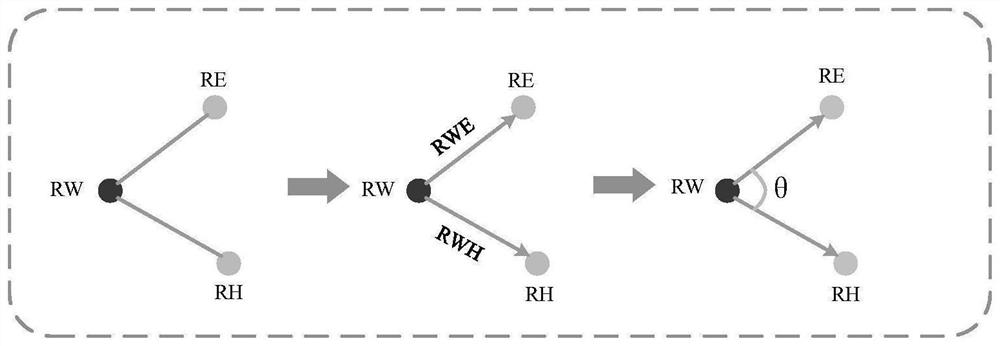

A recognition method and super-joint technology, applied in the field of neural networks, can solve the problems of ignoring joint dependencies, unable to express dependencies with adjacent points, etc., to improve the recognition effect and improve the performance of action recognition.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The present invention will be further described below with reference to the accompanying drawings and embodiments. This figure is a simplified schematic diagram, and only illustrates the basic structure of the present invention in a schematic manner, so it only shows the structure related to the present invention.

[0061] In order to evaluate the effectiveness of the method of the present invention, experiments are carried out on a public dataset based on the depth map and skeleton information; a large public dataset can provide a wider range of training data for the model, making the model stronger; in order to verify the robustness of the method of the present invention Due to the nature of the dataset, a classic small dataset is used in the selection of the dataset. Therefore, experiments are conducted on several datasets with distinct scales: UTD-MHAD and NTU-RGB+D.

[0062] The invention is established based on the PyTorch framework, wherein the Python version is 3...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com