Text classification method and system based on dynamic multilayer semantic perceptron

A technology of text classification and perceptron, applied in the field of text classification method and system based on dynamic multi-layer semantic perceptron, can solve the problems of reducing the amount of model parameters, large and complex models, etc., to reduce the amount of model parameters and alleviate overfitting , the effect of reducing time complexity and space complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] This embodiment provides a text classification method based on a dynamic multi-layer semantic perceptron, which specifically includes the following steps:

[0049] Step 1. Get the training set x i Indicates the ith sample, n is the number of samples, m is the length of the word, C is the number of categories, the training set contains several texts (samples), each text corresponds to a real label y i (true category).

[0050] Among them, a text can be a sentence or an article.

[0051] In this embodiment, due to the problem of insufficient training samples in the classification of news texts, each text is a piece of news, and the category y includes "politics", "economics", "military", "sports", and "entertainment", respectively. 1 to 5 numbers, so C=5.

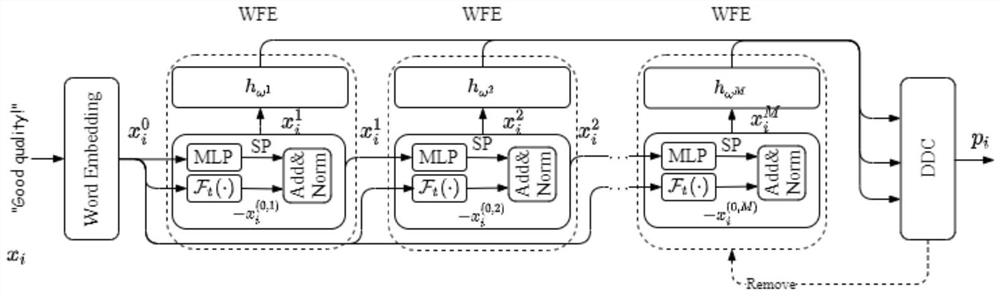

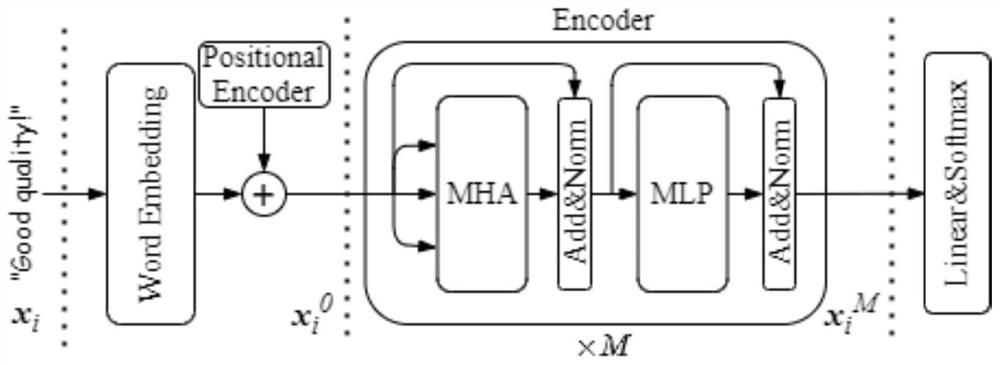

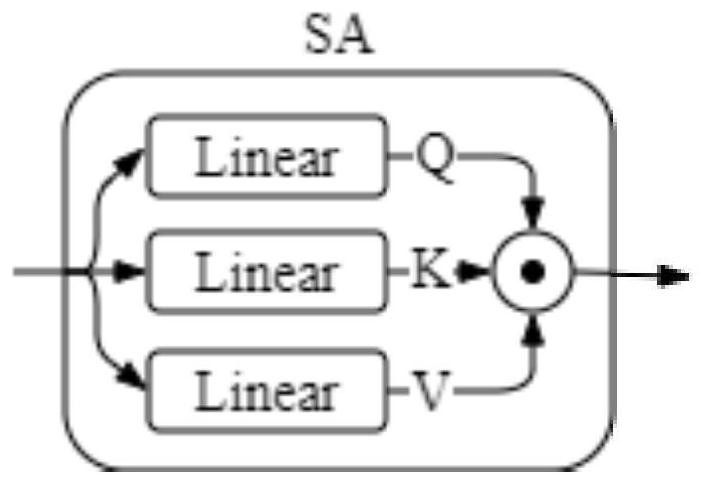

[0052] Step 2, using the training set to train a dynamic multi-layer semantic perceptron model (Dynamic Multi-Layer Semantics Perceptron, DMSP) to obtain a trained dynamic multi-layer semantic perceptron model. A...

Embodiment 2

[0218] This embodiment provides a text classification system based on a dynamic multi-layer semantic perceptron, which specifically includes the following modules:

[0219] A text acquisition module, which is configured to: acquire the text to be classified;

[0220] a classification module, which is configured to: adopt a dynamic multi-layer semantic perceptron model to obtain the category to which the text to be classified belongs;

[0221] The dynamic multi-layer semantic perceptron model includes a word embedding layer, a dynamic depth controller, and several layers of weighted feature learners connected in sequence, and each layer of weighted feature learners is composed of sequentially connected semantic perceptrons and base classifiers; the The output of the word embedding layer is used as the input of the semantic perceptron of all weighted feature learners, and the output of the semantic perceptron of each layer of weighted feature learners is used as the input of the...

Embodiment 3

[0228] This embodiment provides a computer-readable storage medium on which a computer program is stored, and when the program is executed by a processor, implements the text classification method based on the dynamic multi-layer semantic perceptron as described in the first embodiment above. A step of.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com