Apparatus and method for indicating target by image processing without three-dimensional modeling

A target and image technology, applied in the field of image recognition, can solve problems such as assembly trouble

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

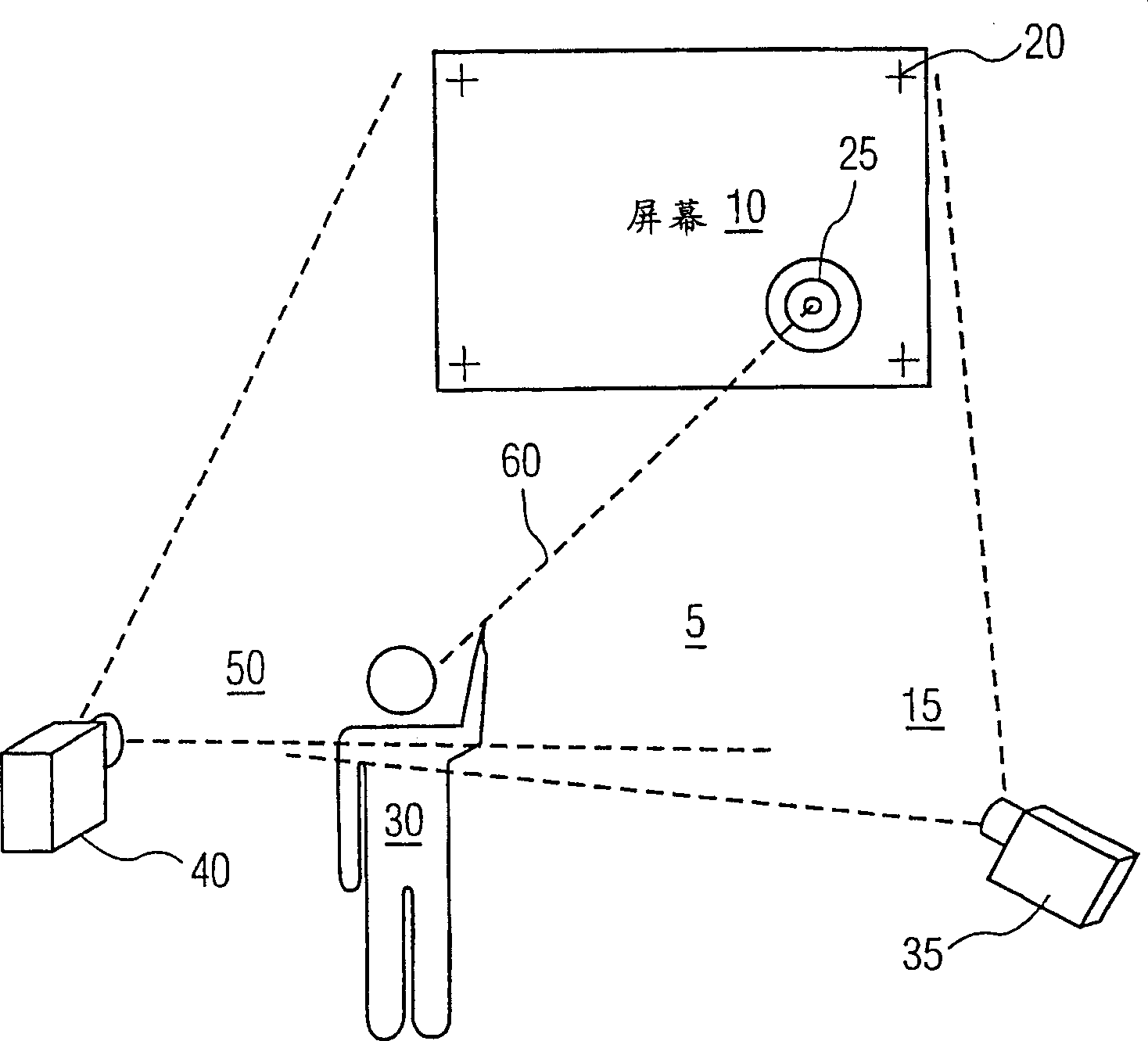

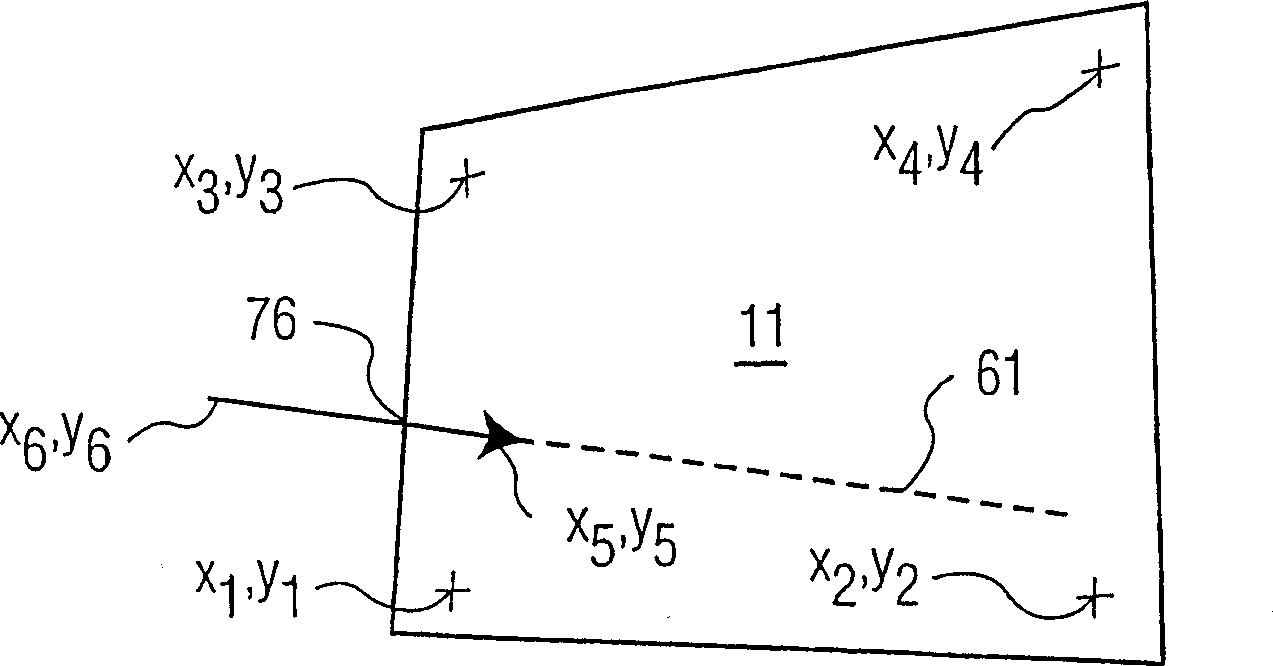

[0036] refer to figure 1 , the user 30 indicates an object 25 located in / on a plane like a television or projection screen 10 or a wall (not shown). Combining the images from the two cameras 35 and 40 in the manner described below enables identification of the target location in either of the two cameras 35 and 40 . The illustration shows user 30 pointing at target 25 with a pointing gesture. It has been experimentally determined that the user's gesture for pointing at the target is: the user's fingertip, the user's right (left) eye and the target are in a straight line. This means that the planar projection of objects in the field of view of either camera follows the rectilinear planar projection defined by the user's eyes and fingertips. In the present invention, the two plane projections are transformed into common plane projections, which can be any one of the cameras 35 and 40 or any third plane.

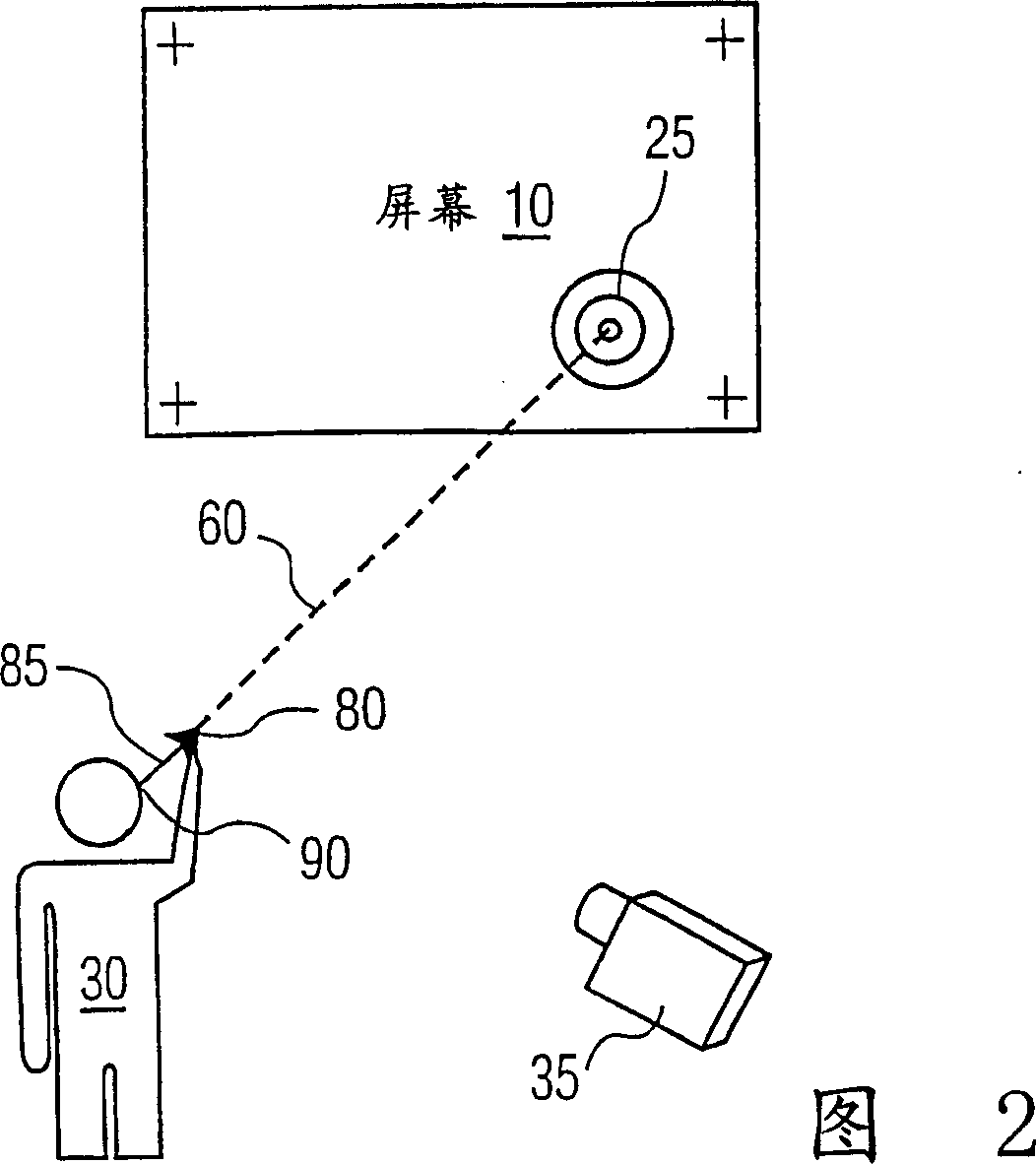

[0037] Referring to FIG. 2 , the cameras are aimed so that they each ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com