Multi-description video coding method based on transformation and data fusion

A technology of data fusion and video coding, applied in the field of video coding, can solve different problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

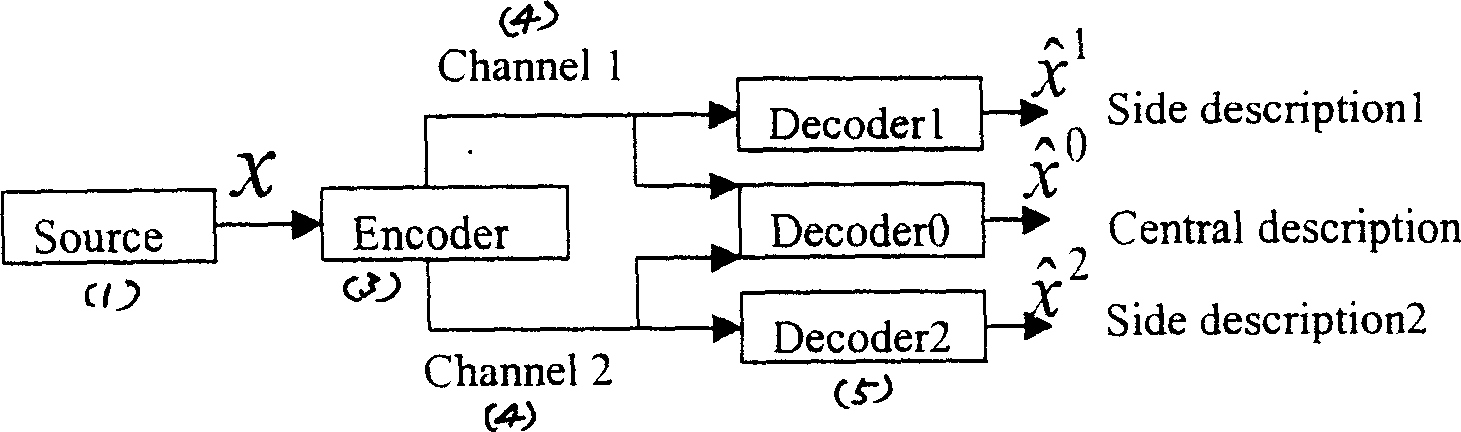

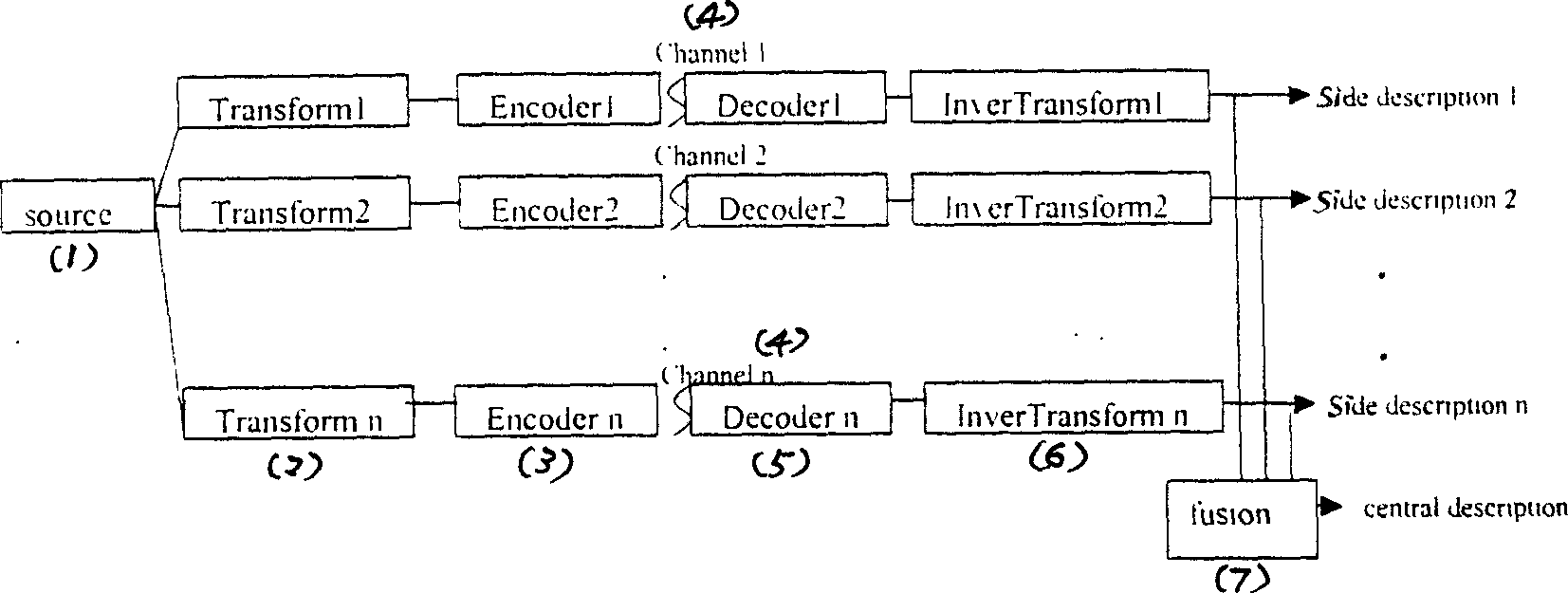

[0025] Example 1. A multi-description video coding method based on transformation and data fusion, such as figure 2 shown. It includes the following steps:

[0026] ① Implement transformation 1 to transformation n (2) on the signal to be encoded (1);

[0027] ② Perform quantization and entropy coding on the signals transformed from 1 to n (2) respectively (3);

[0028] ③ Decoding (5) the quantized and entropy encoded (3) signals 1 to n according to their respective paths 1 to n (4);

[0029] ④ Inversely transform the decoded signals 1 to n respectively (6);

[0030] ⑤ Obtain side descriptions 1~n respectively after inverse transformation, and fuse (7) the 1~n data after inverse transformation (6) into a central description.

[0031] Each description is a code stream conforming to the MPEG video standard, and the position order of the I frames described by each side is exactly the same, and multiple description coding based on transformation and data fusion is applied to ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com