Speech processing system based upon a representational state transfer (REST) architecture that uses web 2.0 concepts for speech resource interfaces

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

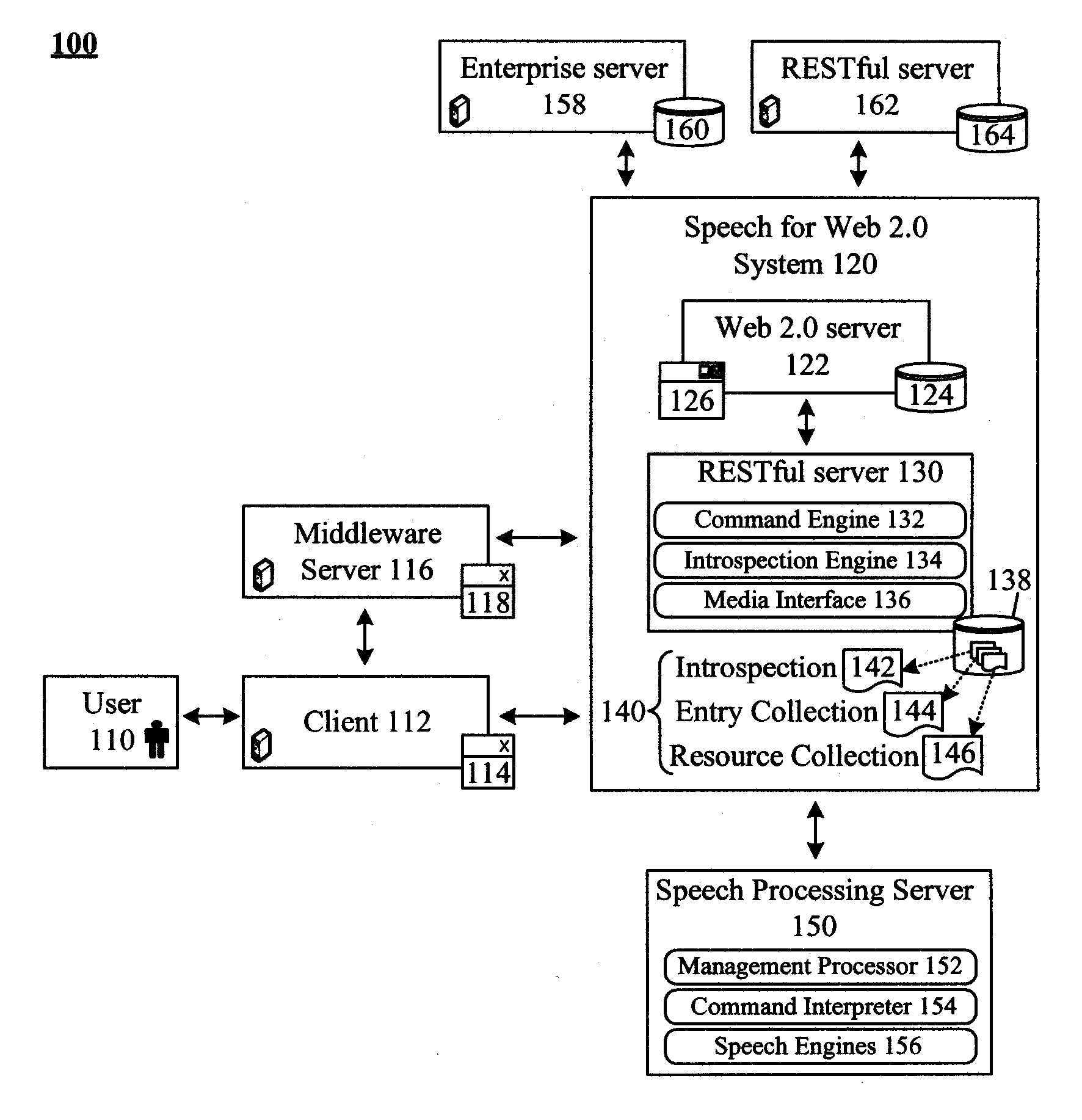

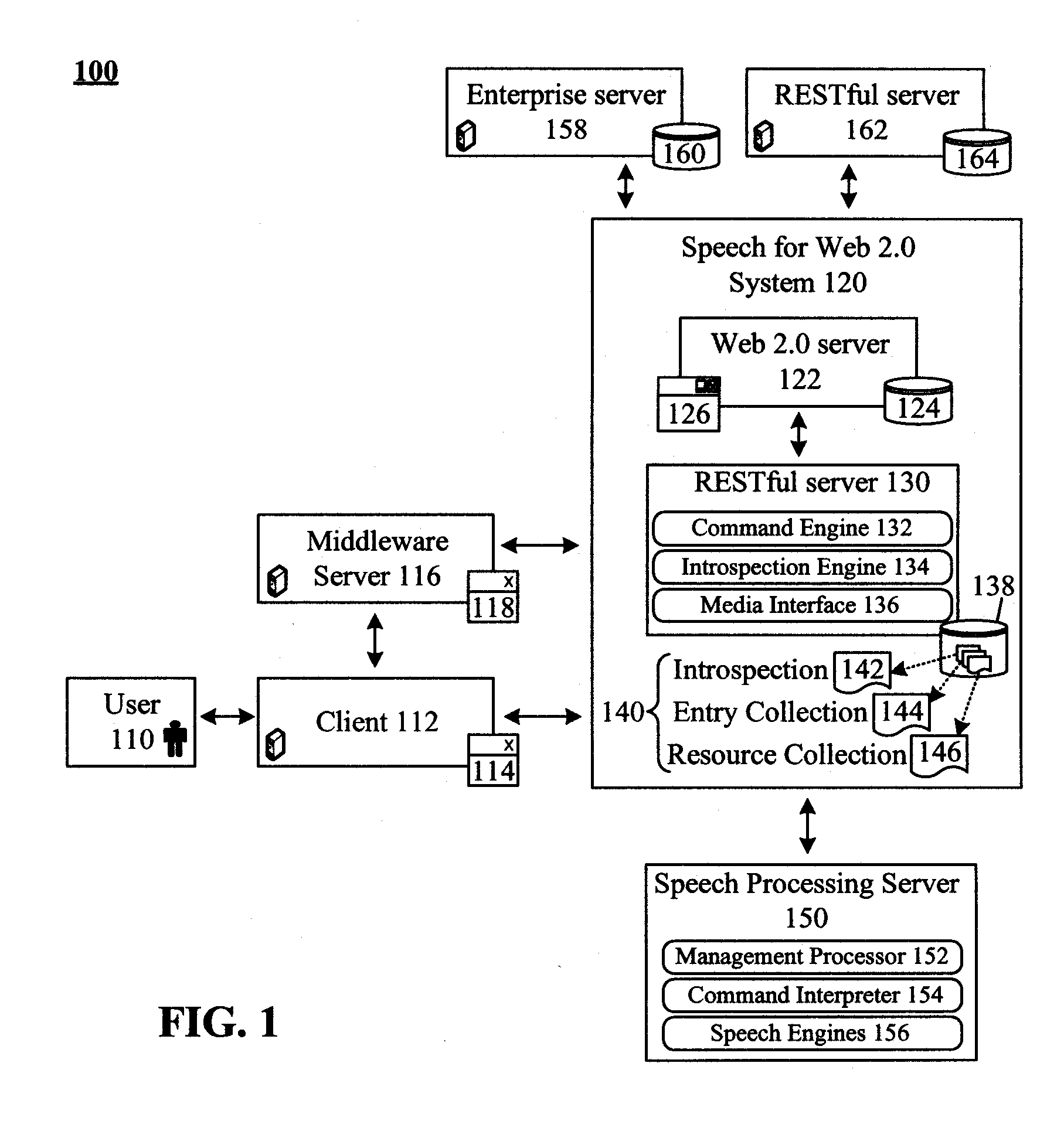

[0022]FIG. 1 is a schematic diagram of a system 100 that utilizes Web 2.0 concepts for speech processing operations in accordance with an embodiment of the inventive arrangements disclosed herein. In system 100, a user 110 can use an interface 114 of client 112 to communicate with the speech for Web 2.0 system 120, which can include a Web 2.0 server 122 and / or a RESTful server 130. When the client 112 is a basic computing device (e.g., a telephone), a middleware server 116 can provide an interface 118 to system 120. Interface 114 and / or 118 can be a Web or voice browser, which communicates directly with system 120 using Web 2.0 conventions. Applications 126, which the client 112 accesses, can be voice-enabled applications stored in data store 124. A type of browser (e.g., interface 114 and / or 118) used to access the applications 126 can be transparent to the system 120, or can be transparent at least to RESTful server 130 of system 120.

[0023]The RESTful server 130 can provide speech...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com