System and method for displaying selected garments on a computer-simulated mannequin

a computer-simulated and clothing technology, applied in image data processing, 3d modelling, cathode-ray tube indicators, etc., can solve the problem of three-dimensional modeling being impractical in the most commonly available computing environment by itself, and achieve the effect of efficient production of images

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

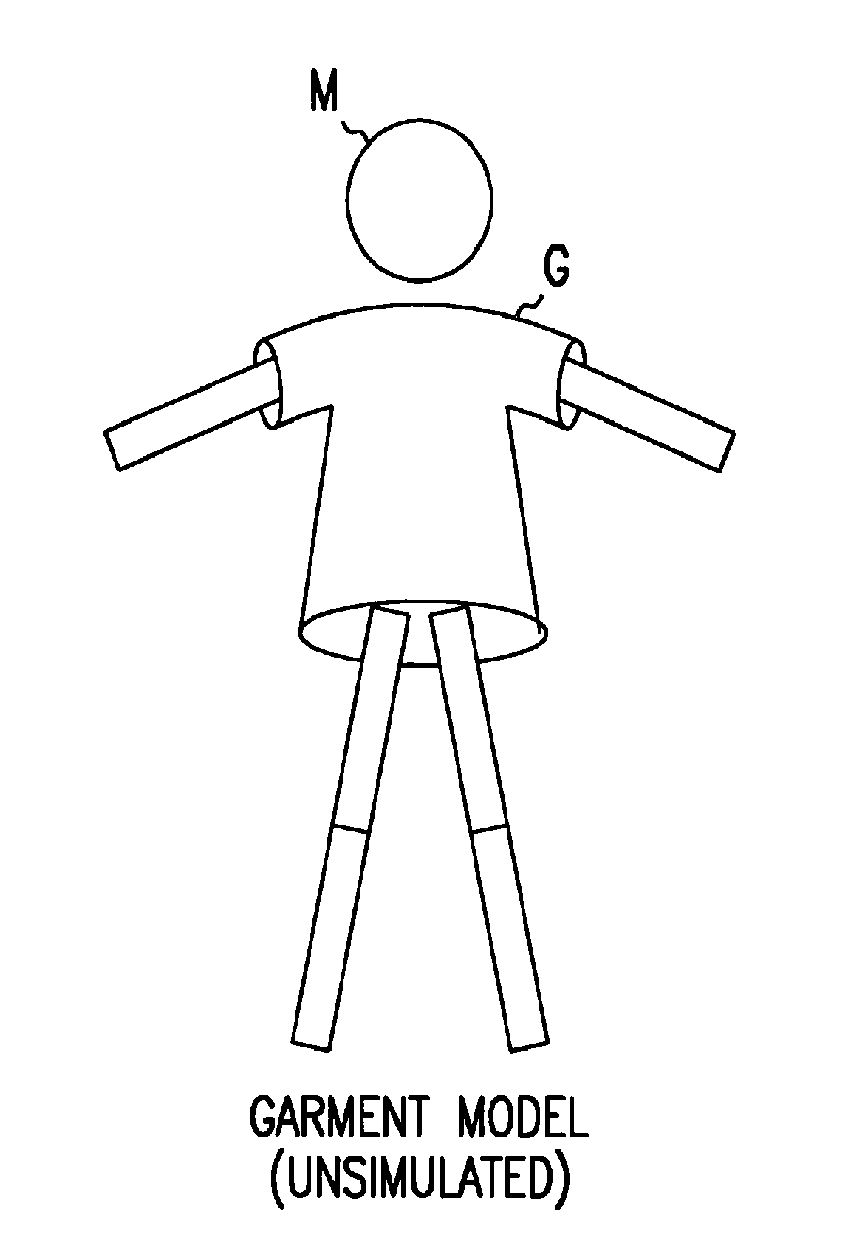

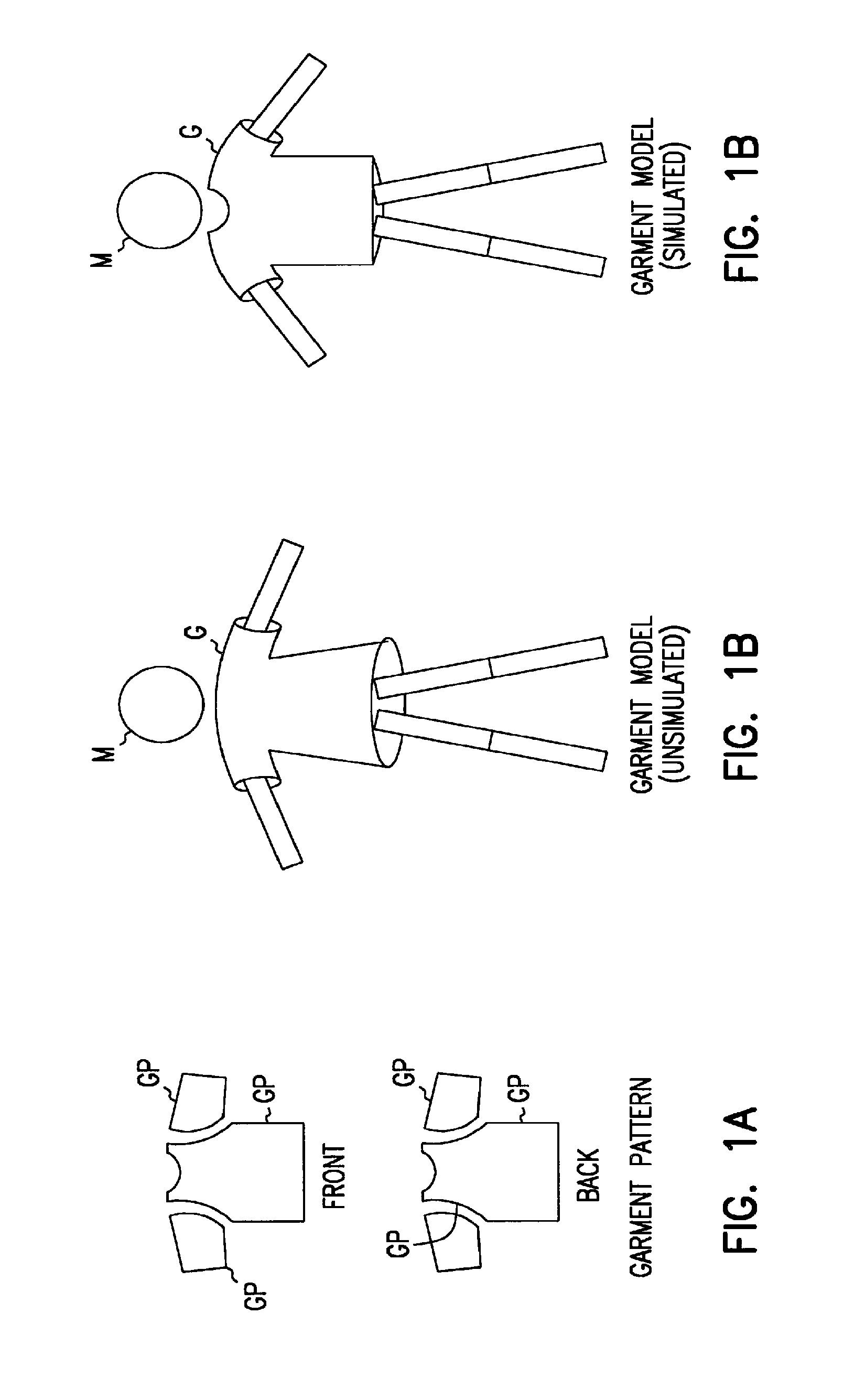

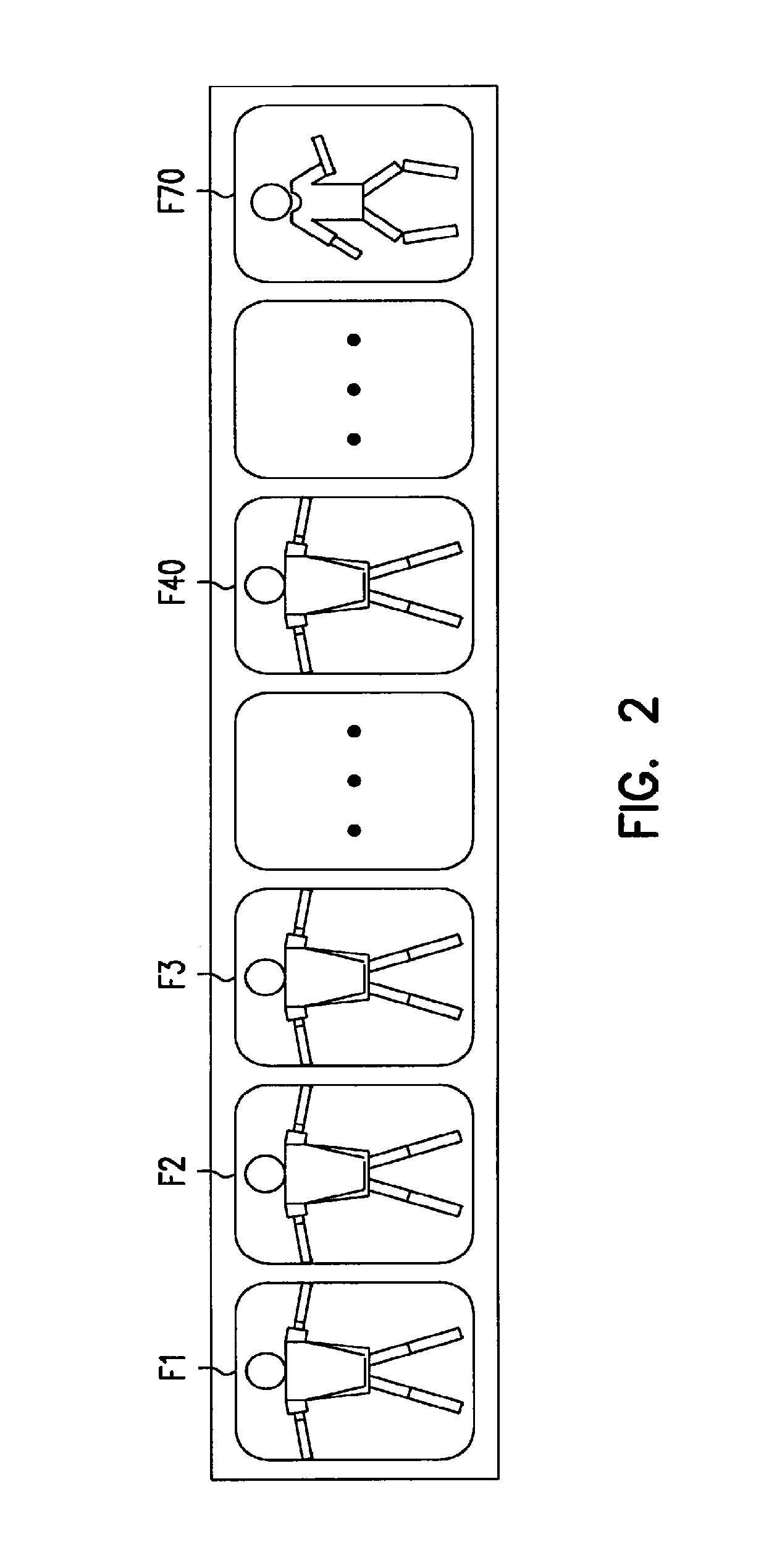

[0022]The present invention is a system and method for efficiently providing a computer-simulated dressing environment in which a user is presented with an image of a selected human figure wearing selected clothing. In such an environment, a user selects parameter values that define the form of the human figure, referred to herein as a virtual mannequin, that is to wear the selected clothing. Such parameters may be actual body measurements that define in varying degrees of precision the form of the mannequin or could be the selection of a particular mannequin from a population of mannequins available for presentation to the user. One type of user may input parameter values that result in a virtual mannequin that is most representative of the user's own body in order to more fully simulate the experience of actually trying on a selected garment. Other types of users may select mannequins on a different basis in order to obtain images such as for use in animated features or as an aid ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com