Face detecting and tracking method and device and method and system for controlling rotation of robot head

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

embodiment 1

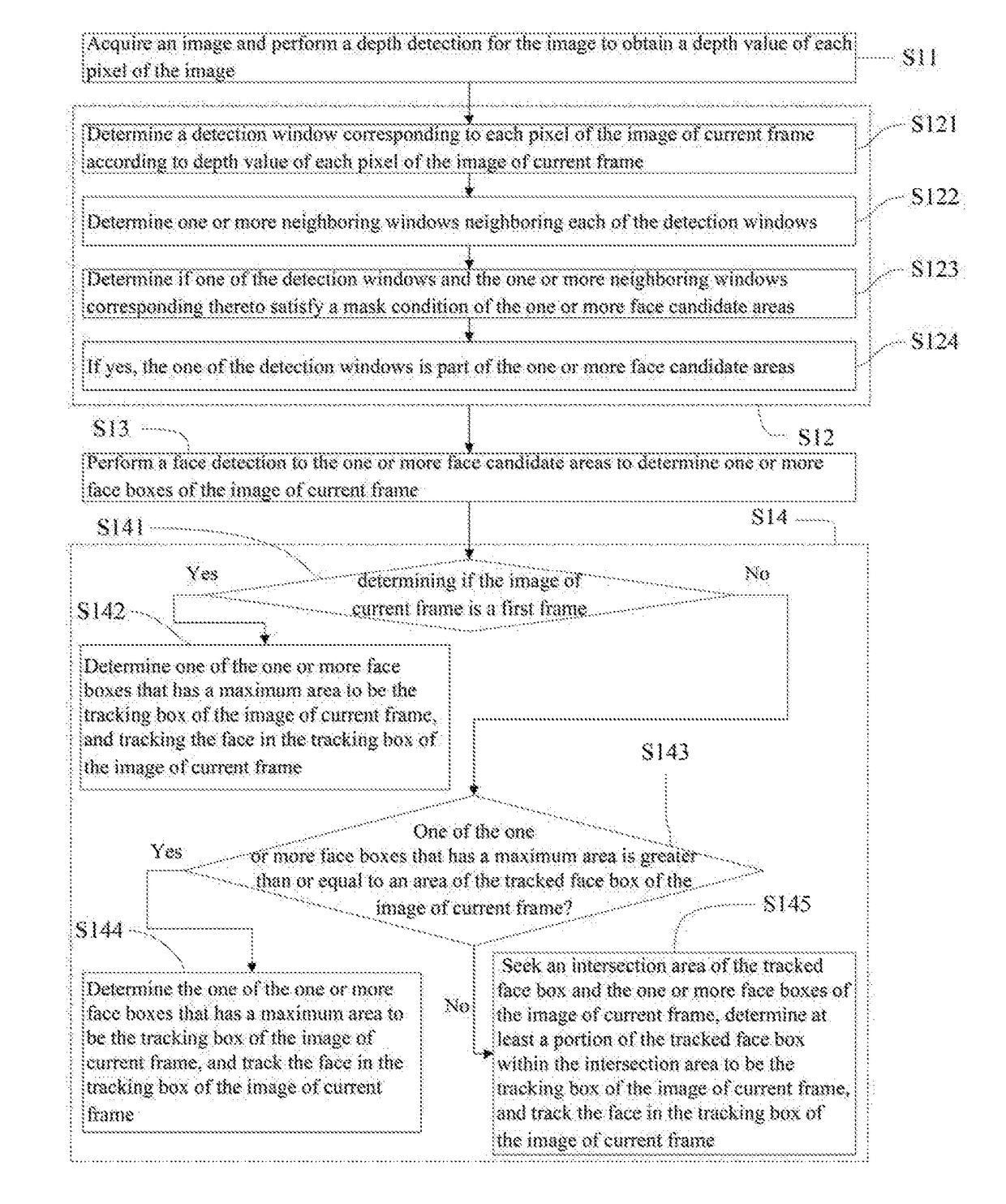

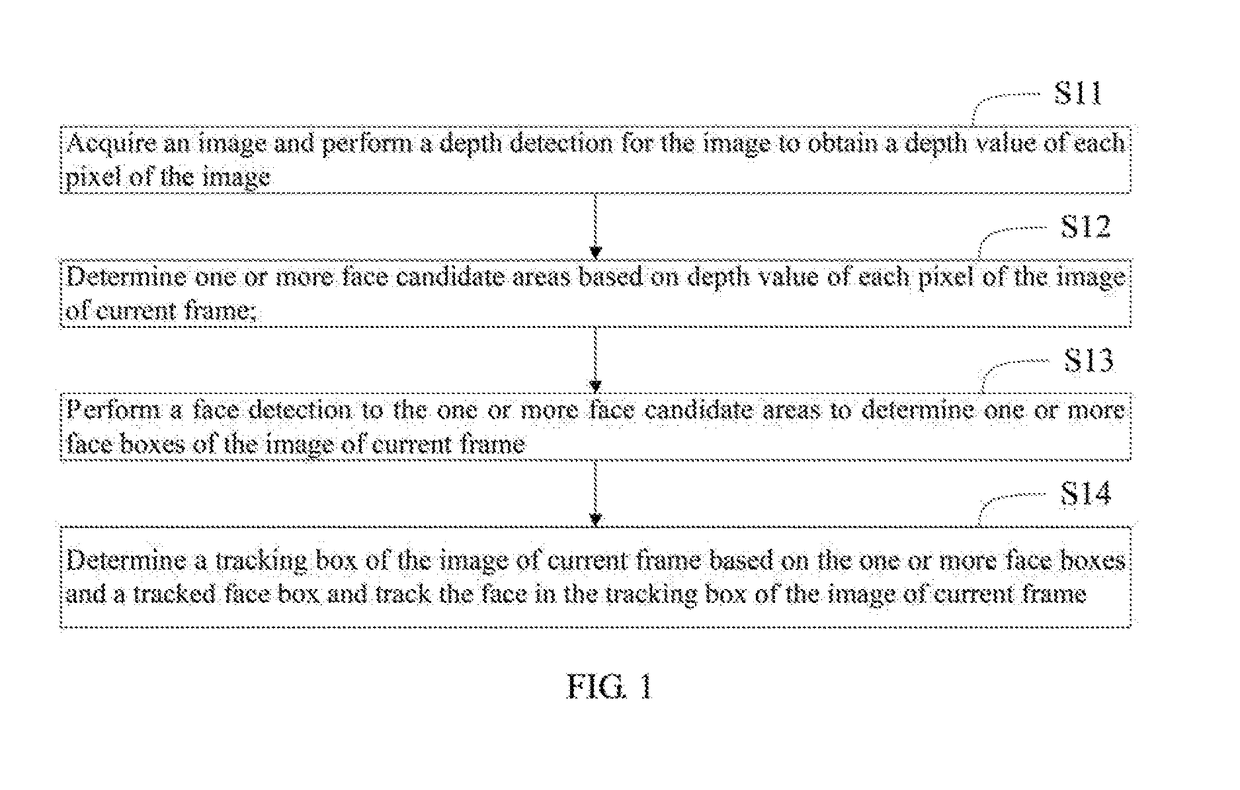

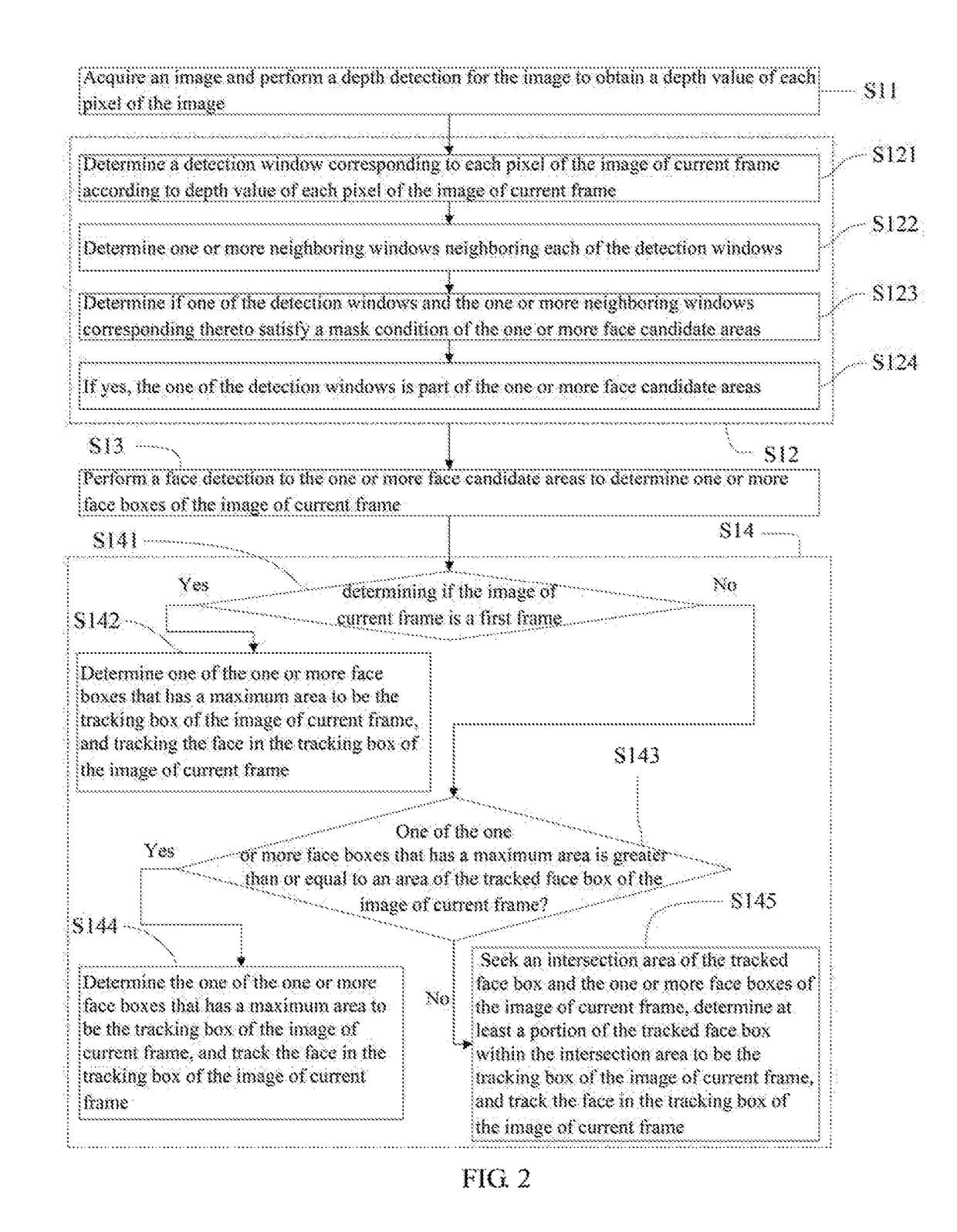

[0016]FIGS. 1 and 2 show flow charts of a human face detecting and tracking method according to one embodiment As shown in FIG. 1, the method includes the following steps:

[0017]Step S11: acquiring an image and performing a depth detection for the image to obtain a depth value of each pixel of the image. In the embodiment, each image includes I×J pixels, i.e., I rows and J columns of pixels. A pixel (i, j) refers to the pixel in column i, row j of the image, and the depth value of the pixel (i, j) is represented as d (i, j). One or more depth sensors are used to perform depth detection and process to the captured images to acquire the depth value d (i, j) of each pixel (i, j).

[0018]Step S12: determining one or more face candidate areas based on depth value d (i, j) of each pixel (i, j) of the Image of current frame. A face candidate area is an area that may include a human face. A human face detecting method is used to detect a human face in the face candidate area, which increases t...

embodiment 2

[0037]FIG. 4 shows a flow chart of a method for controlling rotation of a robot head. The robot is equipped with a camera device for capturing images, and a depth sensor and a processor for detecting depth of the captured images. The method for controlling rotation of a robot head is performed by the processor and includes the following steps:

[0038]Step S21: determining a tracking box of a first image using the method described in embodiment 1, and a center of the tracking box. As shown in FIG. 5, the coordinates (x0, y0) of the center B of the tracking box of the first image are read in real-time. It can be understood when tracking a face using the face detecting and tracking method of the embodiment 1, the steps of determining firstly one or more face candidate areas according to the depth value of each pixel, and then performing a face detection in the one or more face candidate areas, can reduce detection error and increase face detection accuracy. The face detecting and trackin...

embodiment 3

[0047]FIG. 6 shows a block diagram of a face detecting and tracking device 1. The face detecting and tracking device 1 includes a depth detecting and processing module 10 that is used to acquire an image and perform a depth detection for the image to obtain a depth value of each pixel of the image. In the embodiment, each image includes I×J pixels, i.e., I rows and J columns of pixels. A pixel (i, j) refers to the pixel in column i, row j of the image, and the depth value of the pixel (i, j) is represented as d (i, j). One or more depth sensors are used to perform depth detection and process to the captured images to acquire the depth value d (i, j) of each pixel (i, j).

[0048]The face detecting and tracking device 1 also includes a face candidate area determining module 20 that is used to determine one or more face candidate areas based on depth value d (i, j) of each pixel (i, j) of the image of current frame. A face candidate area is an area that may include a human face. A human ...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap