Methods and systems for image and voice processing

a voice processing and voice technology, applied in the field of systems and techniques for digital image and voice processing, can solve the problems of inordinate time and large amount of computer resources required for conventional techniques for processing computer generated videos, and achieve the effect of reducing the number of images and minimizing or reducing errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

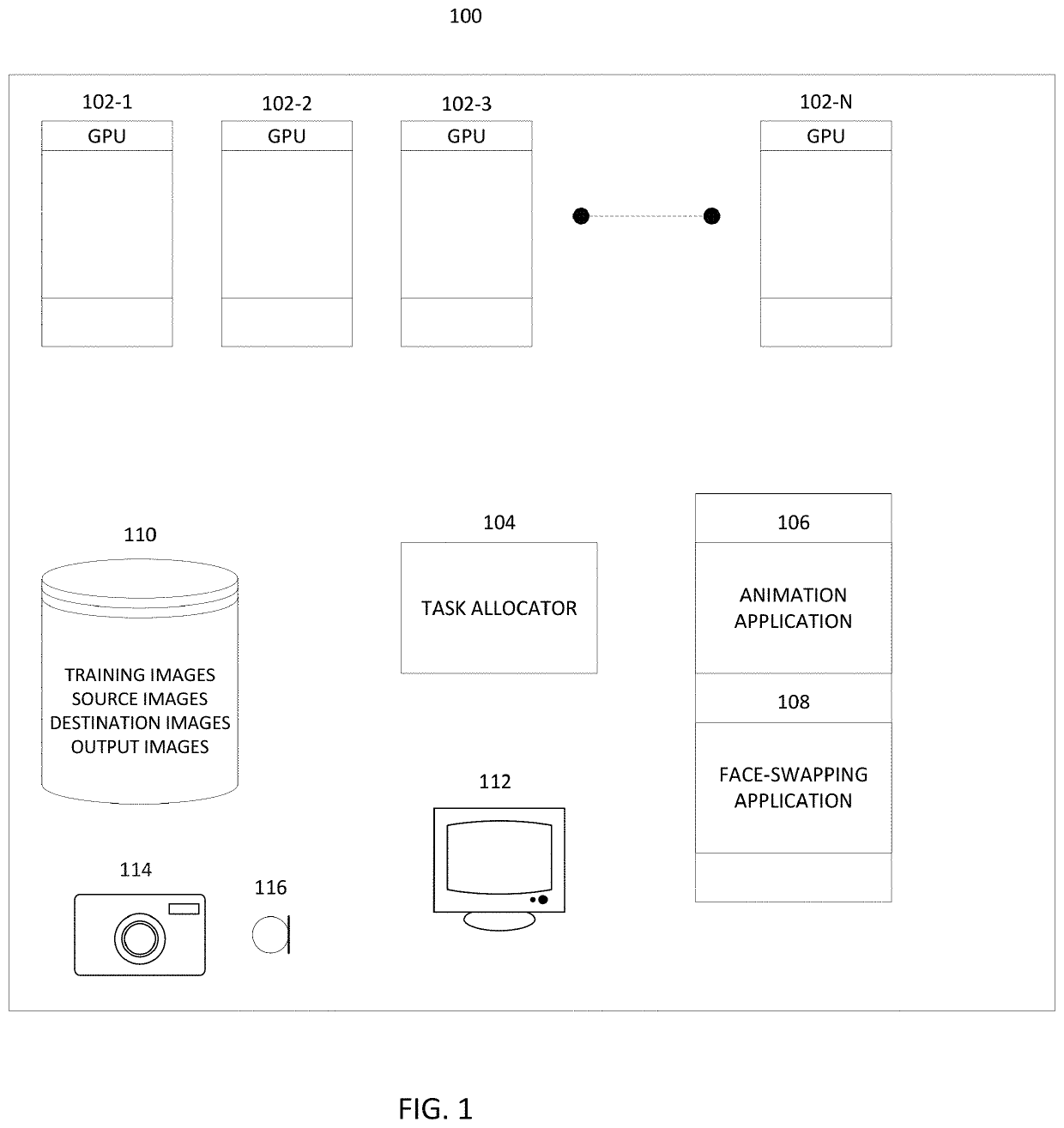

[0035]As discussed above, conventional techniques for processing computer generated videos require large amounts of computer resources and take an inordinate amount of time. Further, certain relatively new applications for digital image processing, such as face-swapping, are becoming ever more popular, creating further demand for computer resources.

[0036]Conventionally, face-swapping is performed by capturing an image or a video of a person (sometimes referred to as the source) whose face is to be used to replace a face of another person in a destination video. For example, a face region in the source image and target image may be recognized, and the face region from the source may be used to replace the face region in the destination, and an output image / video is generated. The source face in the output preserves the expressions of the face in the original destination image / video (e.g., has lip motions, eye motions, eyelid motions, eyebrow motions, nostril flaring, etc.). If insuff...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com