Reconstruction of human emulated robot working scene based on multiple information integration

A technology of multi-information fusion and operation scenarios, applied in the direction of manipulators, manufacturing tools, etc., can solve the problems of large delay, inability to truly reflect the operation situation of robots, limited viewing angle, etc., to achieve the effect of maintaining continuity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

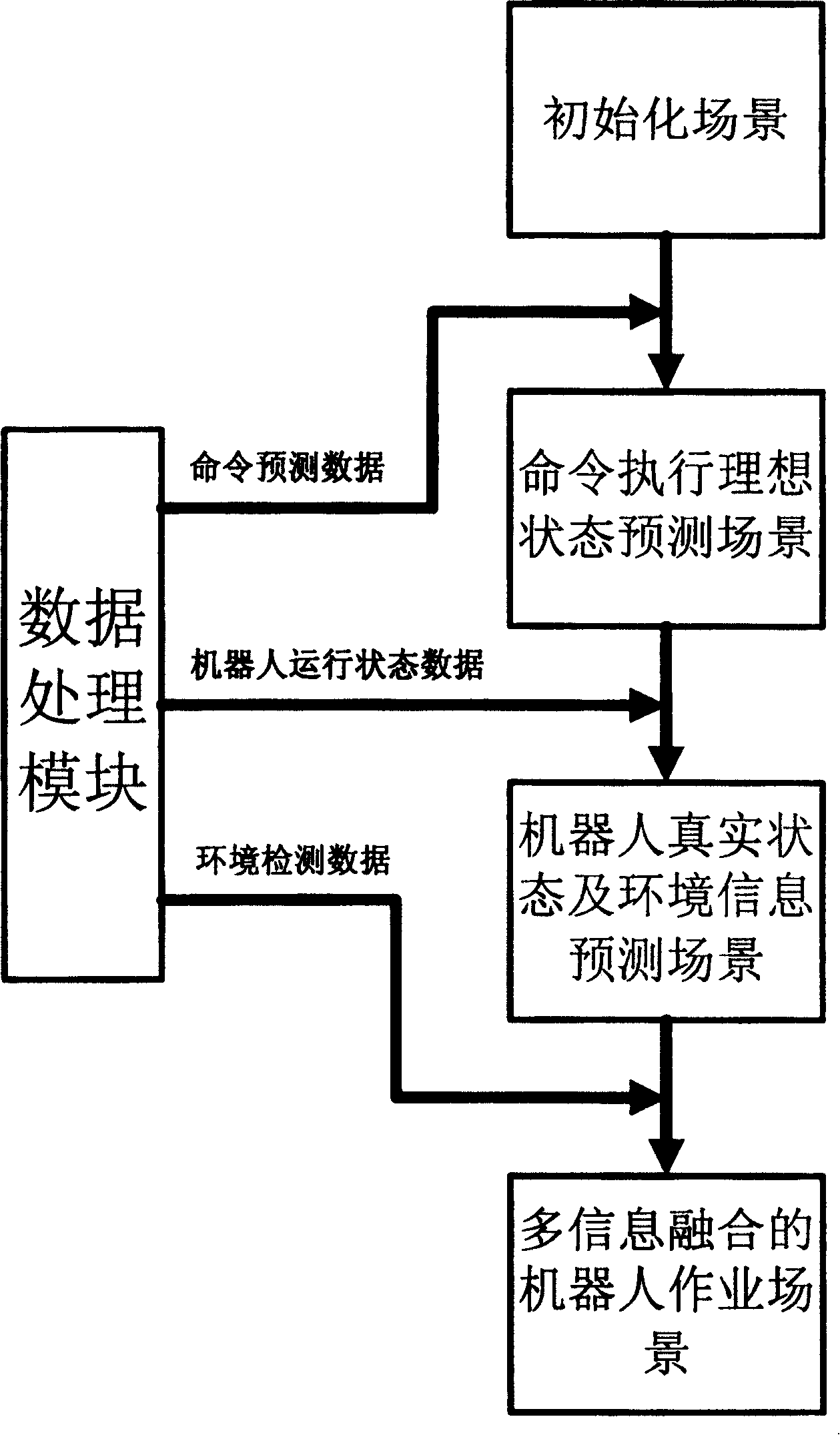

[0026] The working process of the whole humanoid robot working scene is as follows:

[0027] In the first step, the robot starts running and the teleoperation control starts. Start the computer program to display the established scene model. Use the initialization data to determine the initial position of the robot model and its operation target model, and determine the initial angle between the connecting rods of the robot model. What is generated in this step is the initialization interface of the scene.

[0028] In the second step, the scene data processing module receives the operation commands issued by the teleoperator in real time, interprets and generates predicted trajectory data. Use the prediction data to drive each model in the virtual scene to form a three-dimensional virtual scene. The scene generated in this step can show the ideal motion picture of the robot executing the commands issued by the operator.

[0029] In the third step, the sensor of the robot i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com