Human eye positioning and human eye state recognition method

A state recognition, human eye positioning technology, applied in character and pattern recognition, instruments, computer parts and other directions, can solve the problems of low accuracy, large external interference, low gray value, etc., to improve the accuracy and speed, The effect of narrowing the detection range and reducing the complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

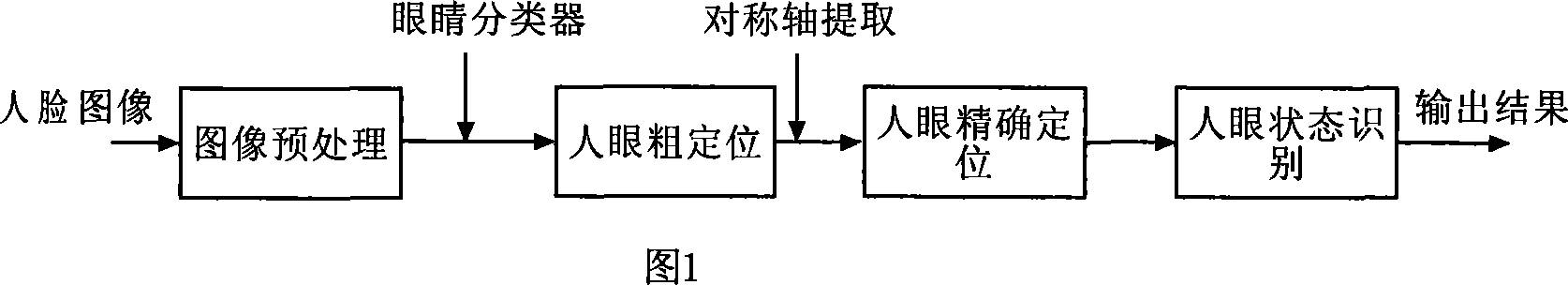

[0035] In conjunction with the accompanying drawings, the block diagram of the human eye positioning and human eye state recognition method proposed by the present invention is as shown in Figure 1, and the specific implementation steps are as follows:

[0036] Step 1: face image preprocessing;

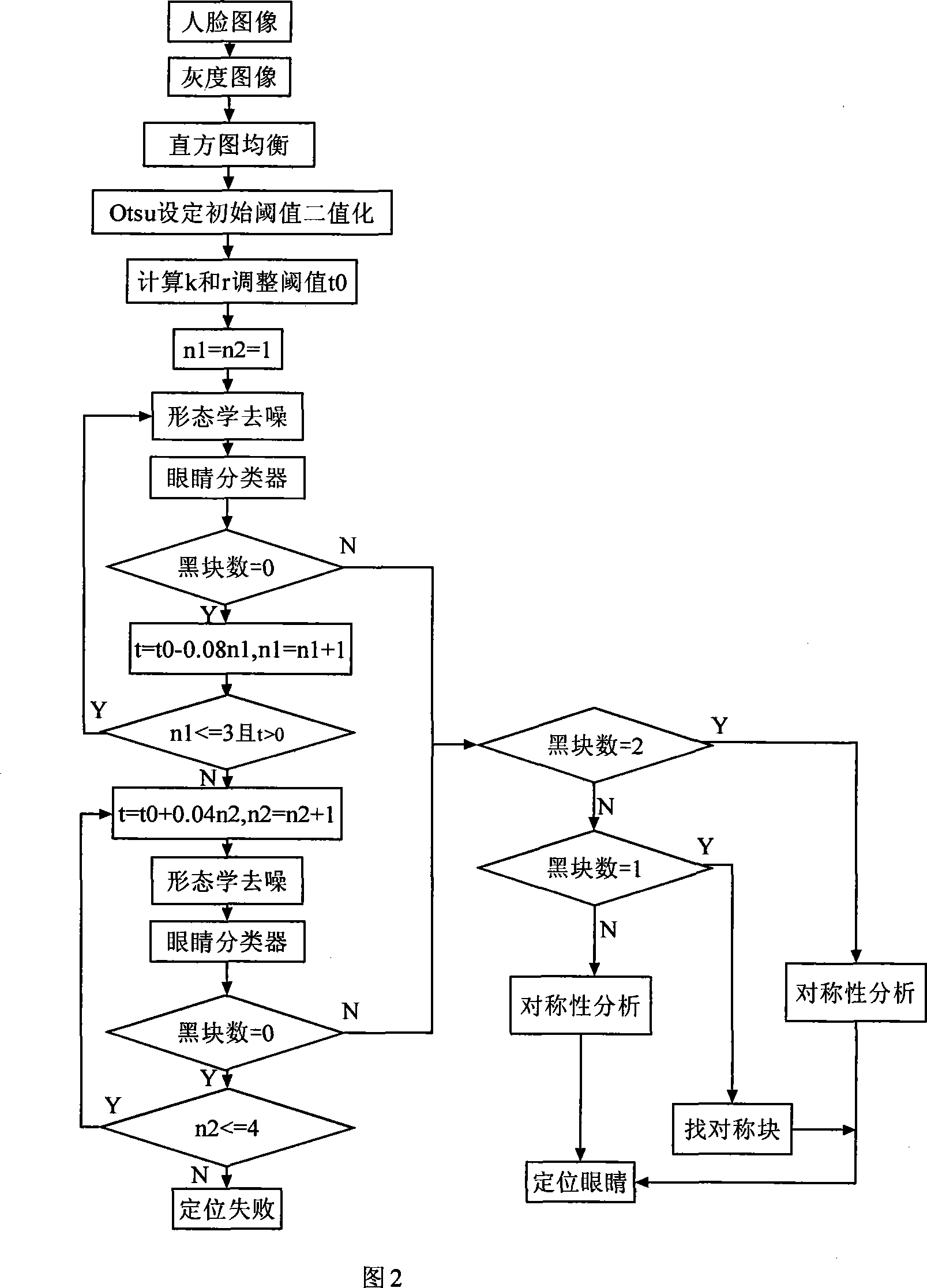

[0037]Step 2: Coarse positioning of human eyes;

[0038] Step 3: Precise positioning of the human eye;

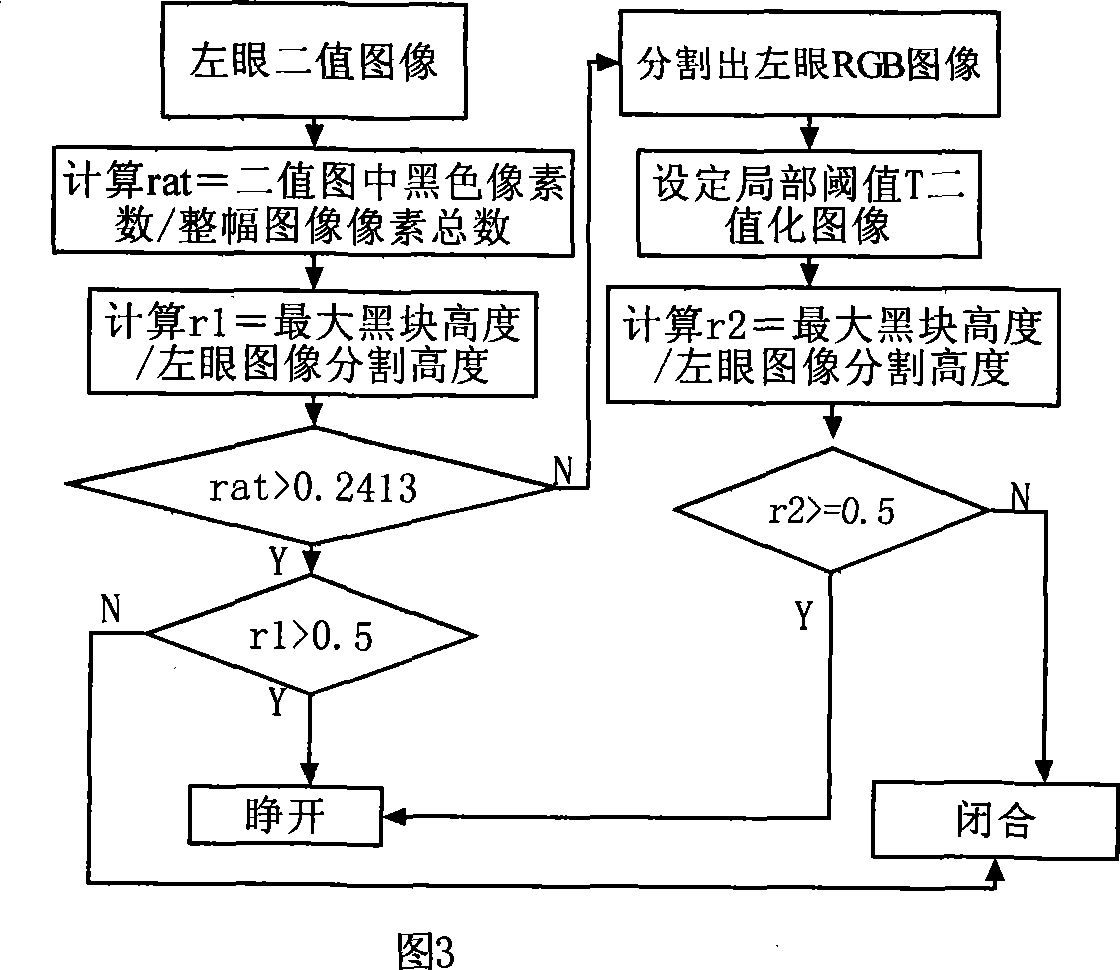

[0039] Step 4: Human eye state recognition.

[0040] Wherein the specific implementation steps of Step 1 are:

[0041] The first step is to grayscale the face color image after positioning;

[0042] The camera collects color images with R, G, and B components. Based on the needs of subsequent image processing, the color images need to be converted into grayscale images. The color of each pixel in the grayscale image only has 256 grayscale levels from black to white, and there is no color. The conversion format is as follows: v=0.259R+0.587G+0.144B; where v represents the conver...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com