A 3D face animation manufacturing method based on region segmentation and segmented learning

A 3D face and area segmentation technology, which is applied in animation production, 3D image processing, image data processing, etc., can solve the problem that animation is difficult to transfer

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

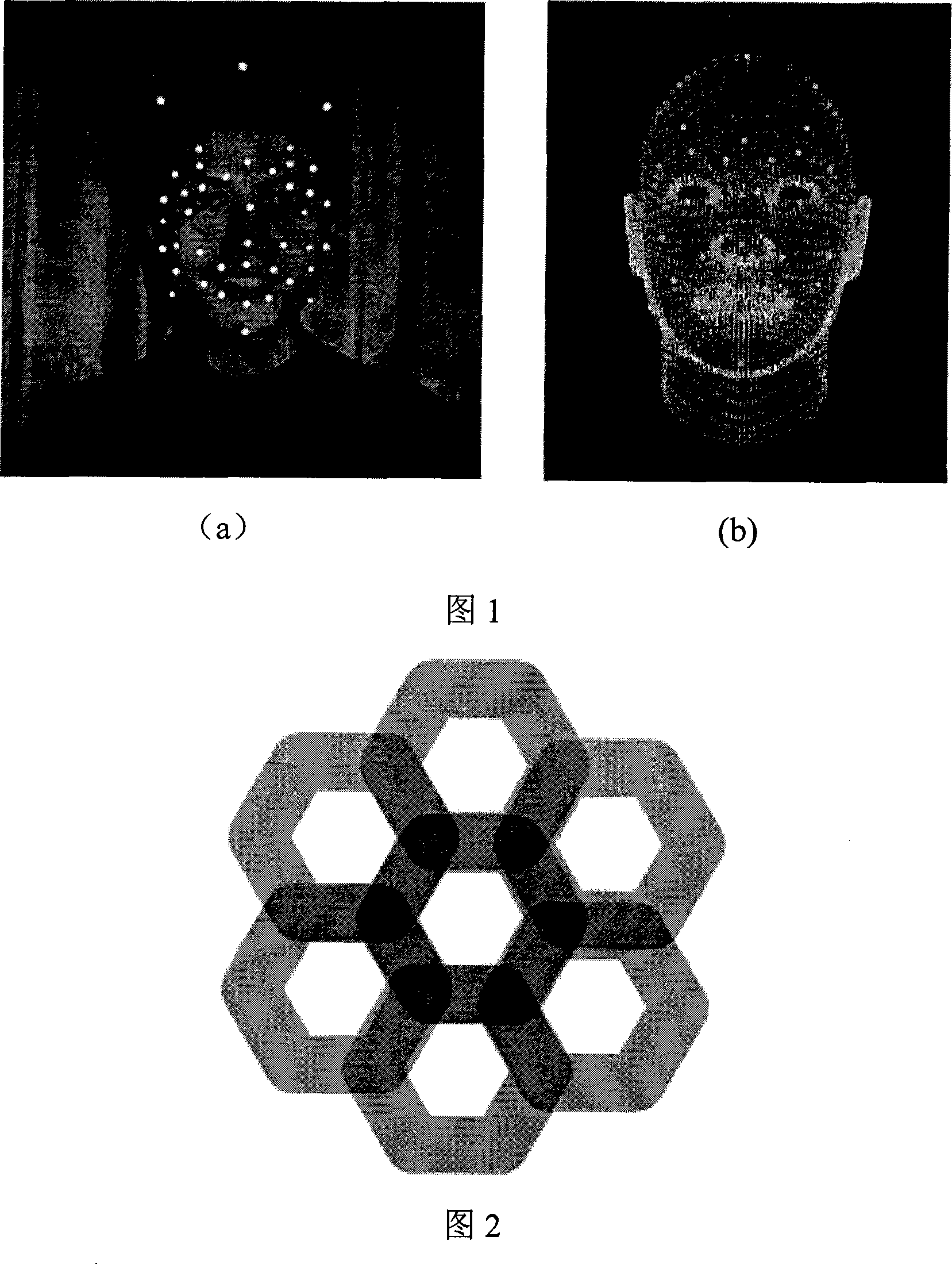

[0043] First, the motion data of the human face can be collected through the Hawk three-dimensional motion data capture device of the American MotionAnalysis formula. The main feature points of the performer’s face are marked with reflective materials, as shown in Figure 1 (left), or read from a computer storage device 3D motion data that has been captured; in addition, establish a 3D face mesh model through a 3DCamega 3D scanner, or read an existing 3D face mesh model from a computer storage device, and use a 3D software package to convert the 3D face mesh The model is drawn on the display. Calibrate the corresponding control points of the motion capture data on the 3D face grid through the computer input device, that is, mark all or part of the marker points in the motion capture data on the 3D face surface grid correspondingly, and establish the motion capture data and the 3D surface The corresponding relationship of the grid is shown in Fig. 1 (right).

[0044] After buil...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com