Mutually translating system and method of sign language and speech

A sign language and voice technology, applied in the application field of image pattern recognition, can solve the problem of weak ability to process time series, etc., and achieve the effect of low cost, high recognition rate and convenient use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention is described in further detail below in conjunction with accompanying drawing:

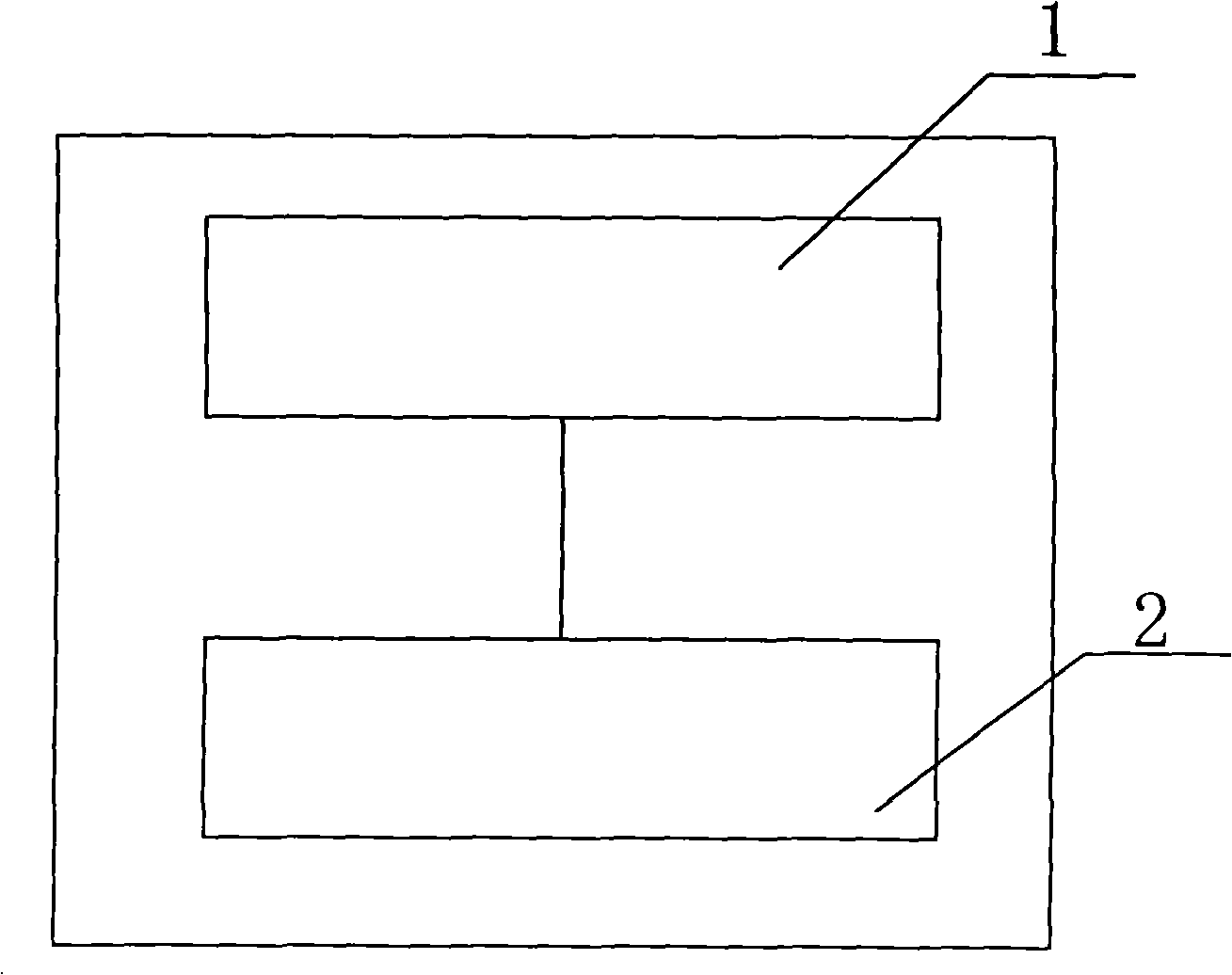

[0032] see figure 1 , 2 , 3, 4, 5, 6, according to the requirements of two-way interaction between normal people and deaf-mute people, the present invention divides the whole system into two subsystems of sign language recognition based on vision and voice translation to realize.

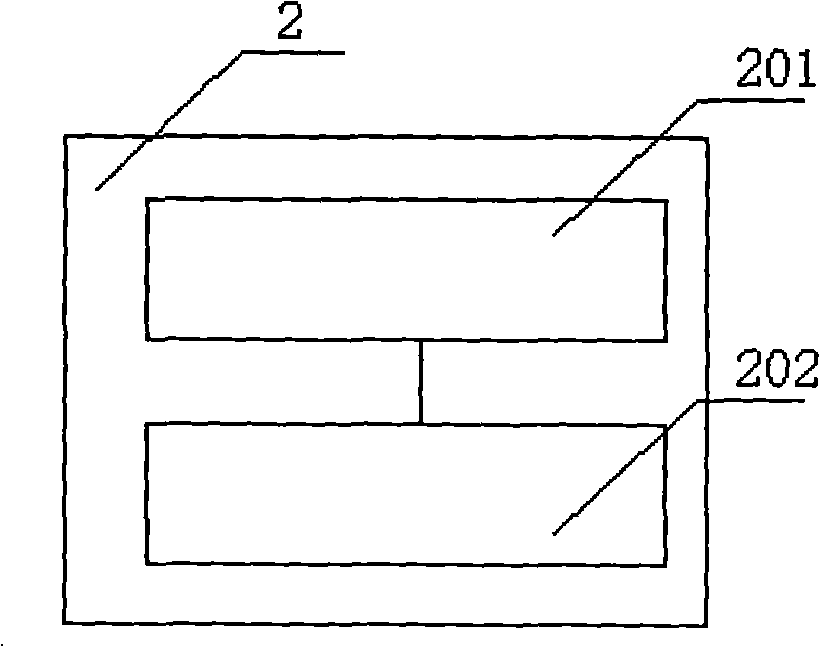

[0033] A sign language-to-speech inter-interpretation system, the system is composed of a vision-based sign language recognition subsystem 1 and a speech translation subsystem 2.

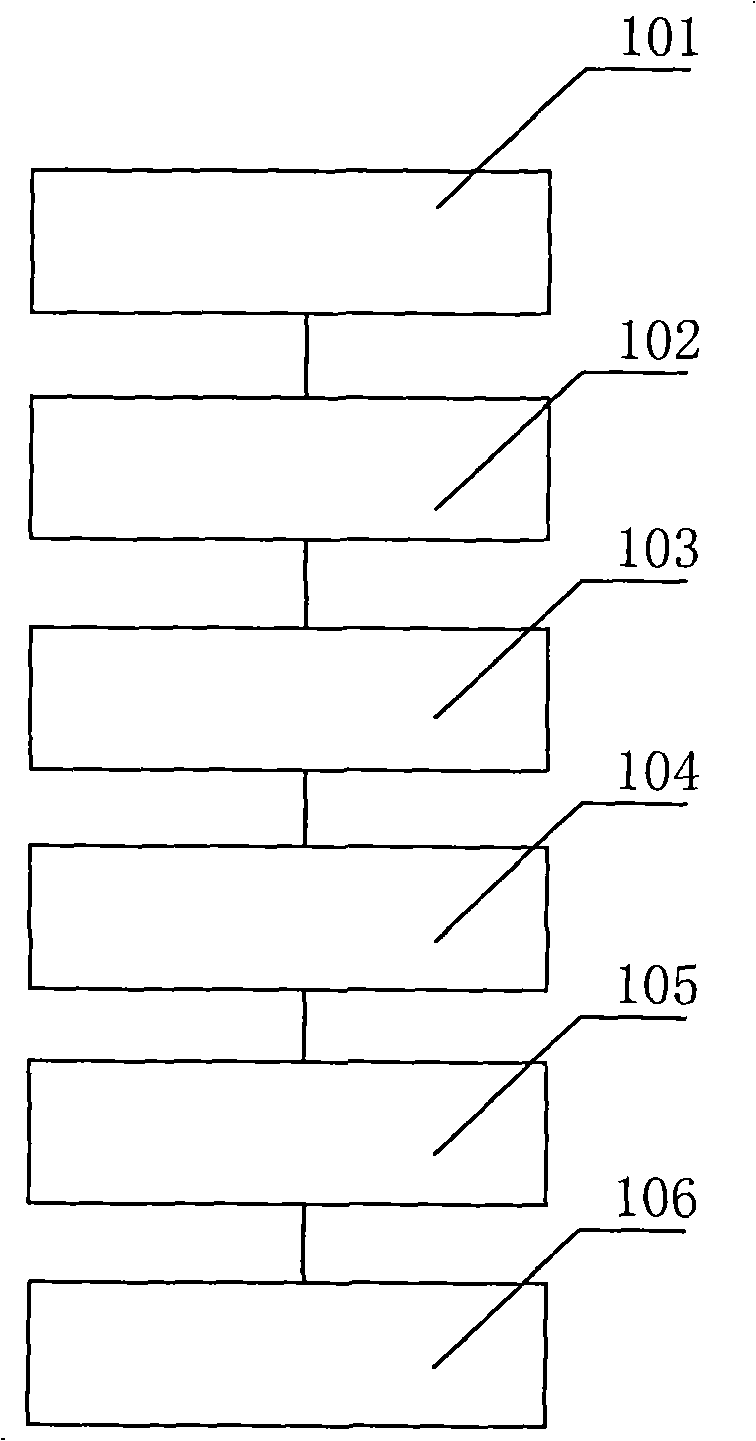

[0034] The vision-based sign language recognition subsystem 1 is made up of a gesture image acquisition module 101, an image preprocessing module 102, an image feature extraction module 103, a sign language model 104, a continuous dynamic sign language recognition module 105, and a Chinese sounding module 106; the gesture image acquisition module 101 Collect gesture video data and input it into image preprocessi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com