Vehicle speed measurement method, supervisory computer and vehicle speed measurement system

A measuring method, technology of monitoring machine

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

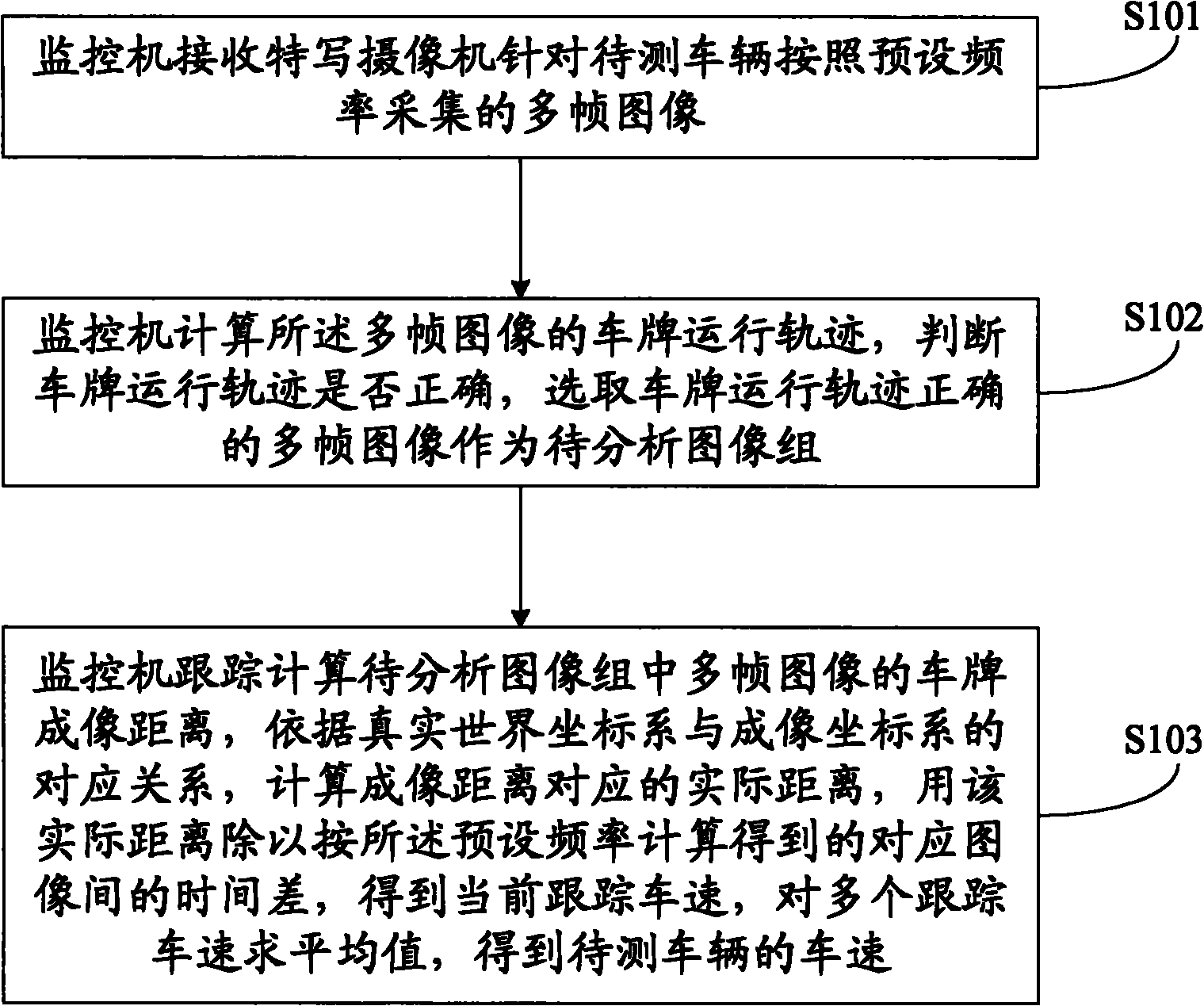

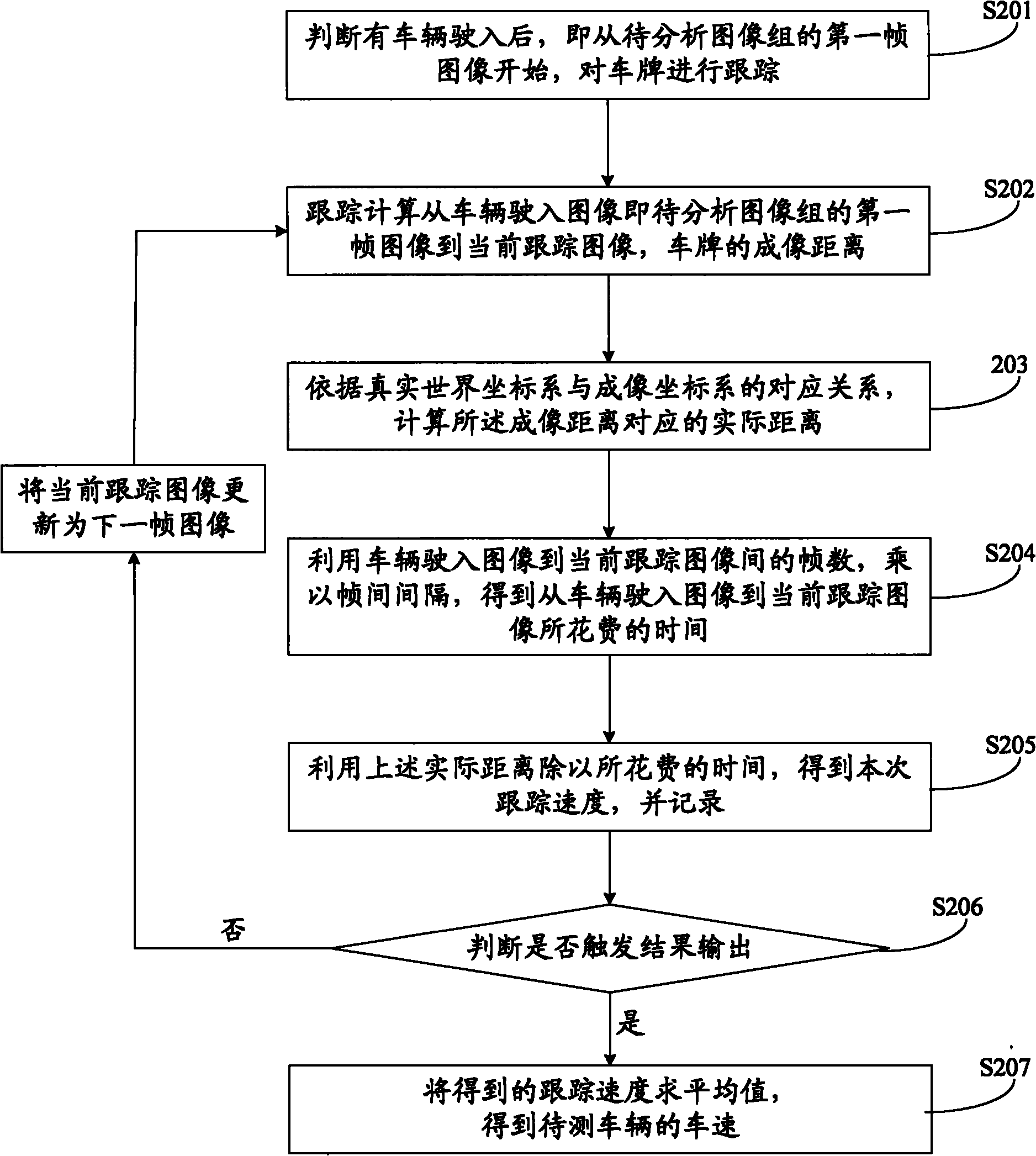

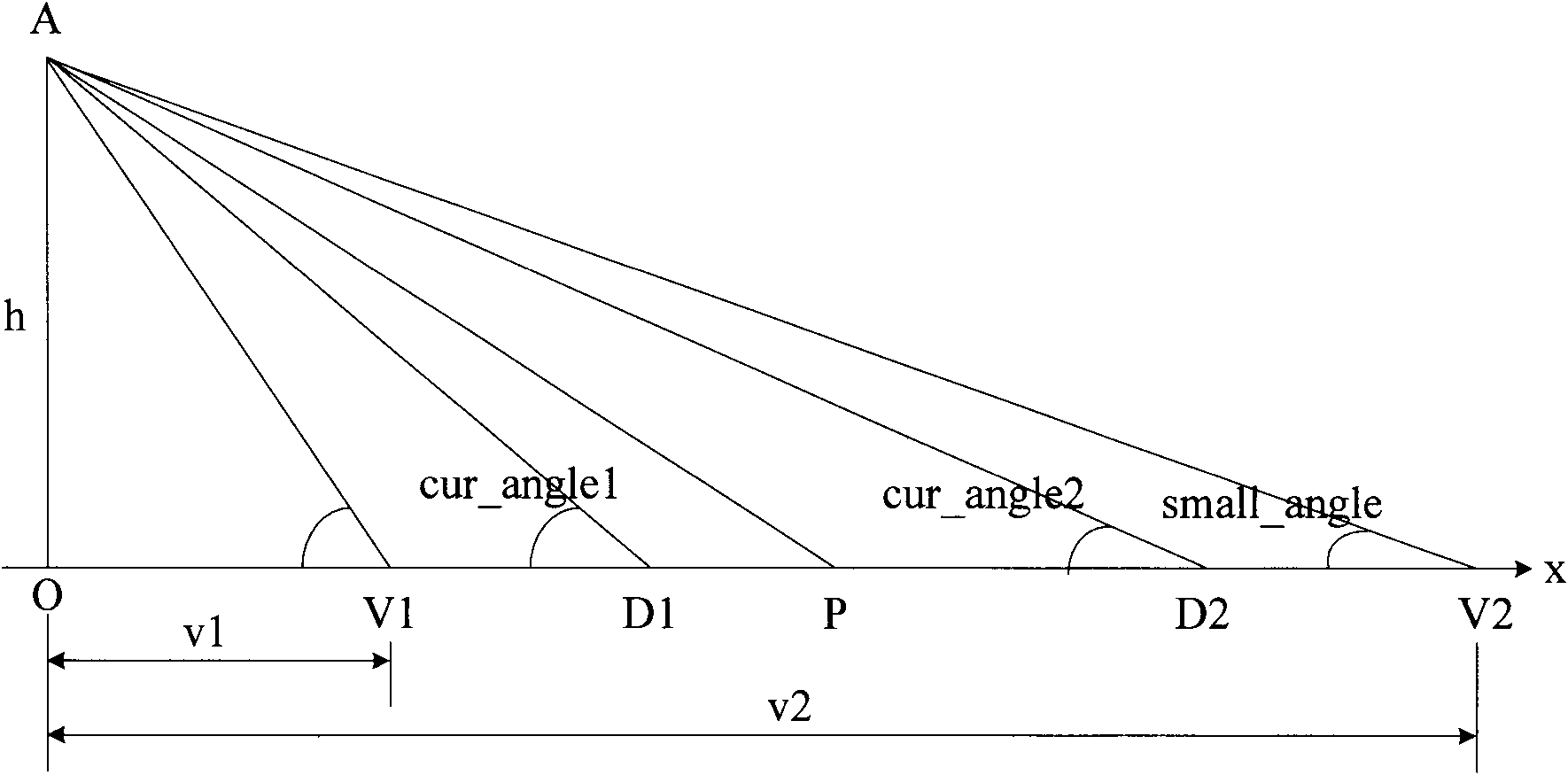

[0065] see figure 1 , a method for measuring vehicle speed provided by an embodiment of the present invention, comprising the following steps:

[0066] Step S101: The monitoring machine receives multiple frames of images collected by the close-up camera according to a preset frequency for the vehicle under test.

[0067] Because the time for the vehicle to pass through the field of view is very short, generally less than 1 second, in order to ensure real-time requirements, preferably, the close-up camera collects 25 frames of images per second, and requires that the frame intervals of the close-up camera image collection be as equal as possible, so that Used to evaluate the time spent in terms of the number of frames the vehicle passes by.

[0068] Step S102: The monitoring computer calculates the running track of the license plate of the multi-frame images, judges whether the running track of the license plate is correct, and selects the multi-frame images with the correct r...

Embodiment 2

[0132] see Figure 4 , a monitoring machine provided by an embodiment of the present invention, used for vehicle speed measurement, including:

[0133] Receiving module 401: used to receive multi-frame images collected by the close-up camera according to a preset frequency for the vehicle to be tested;

[0134] Image selection module 402: used to calculate the license plate running trajectory of the multi-frame images, determine whether the license plate running trajectory is correct, and select the correct multi-frame images of the license plate running trajectory as the image group to be analyzed;

[0135]Vehicle speed calculation module 403: used to track and calculate the license plate imaging distance of multiple frames of images in the image group to be analyzed, calculate the actual distance corresponding to the imaging distance according to the corresponding relationship between the real world coordinate system and the imaging coordinate system, and use the actual dist...

Embodiment 3

[0146] see Figure 5 , a vehicle speed measurement system provided in an embodiment of the present invention, comprising: a close-up camera 501, a supplementary light source 502, and the monitoring machine 503 described in the above embodiment,

[0147] The optical axis of the close-up camera 501 is facing the running route of the vehicle to be tested, and images are collected according to a preset frequency and sent to the monitoring machine 503;

[0148] The supplementary light source 502 is used to supplement light brightness for the close-up camera 501 when the light is insufficient;

[0149] The monitoring machine 503 is used to calculate the license plate track of the multi-frame images sent by the close-up camera 501, judge whether the license plate track is correct, and select the correct multi-frame images of the license plate track as the image group to be analyzed; track and calculate the track to be analyzed. Analyzing the license plate imaging distance of multipl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com