Bidirectional and parallel decoding method of convolutional Turbo code

A decoding and convolution technology, which is applied in the field of channel coding and Turbo code decoding, can solve the problem of long decoding delay of component decoders, and achieve the effect of reducing storage space

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

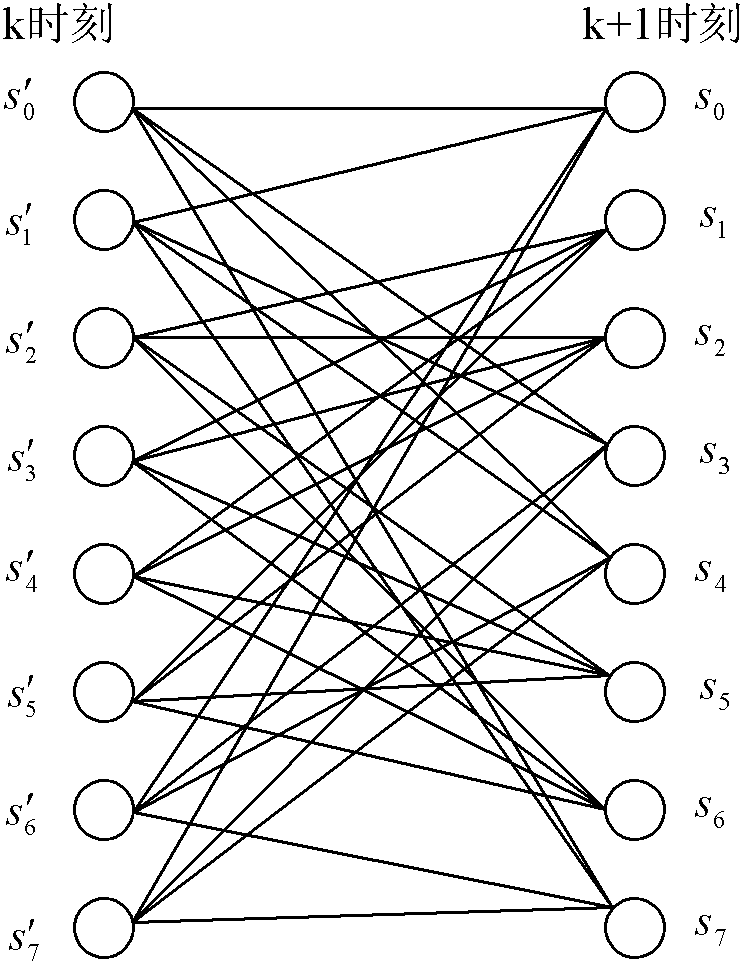

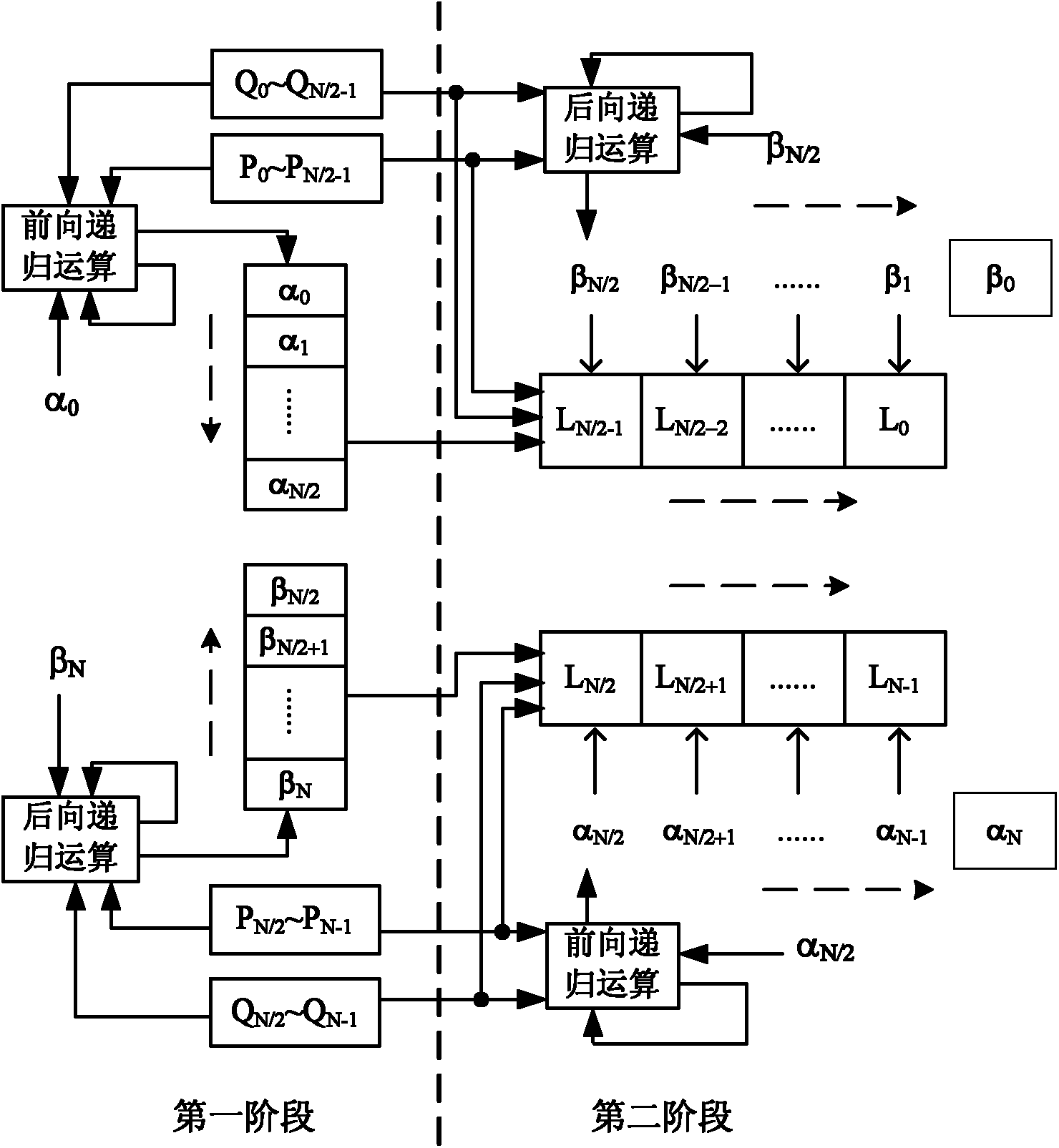

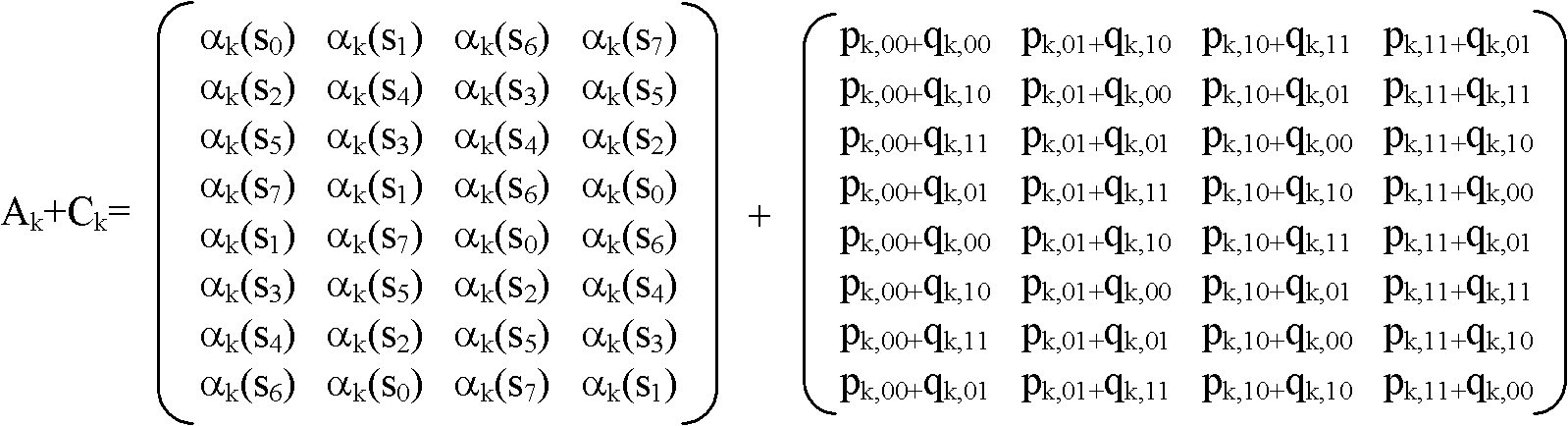

[0027] The present invention improves the decoding process in the component decoder in convolution Turbo decoding, and other processing processes remain unchanged. The convolution Turbo decoding process includes:

[0028] The data to be decoded and the prior likelihood ratio information are input into an iterative decoder in which two component decoders are connected in parallel;

[0029] When the preset maximum number of iterations is not reached, the component decoder outputs the a posteriori likelihood ratio information after decoding, converts it into external information, and after interleaving or deinterleaving, it is input to the other as the a priori likelihood ratio information a component decoder;

[0030] When the preset maximum number of iterations is reached, the last working component decoder decodes and outputs the posterior likelihood ratio information, and after deinterleaving and hard decision, the decoding result is obtained.

[0031] This embodiment improv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com