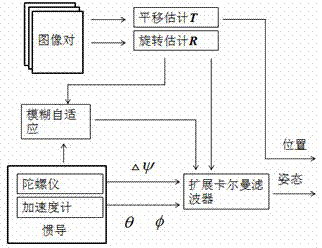

Machine vision and inertial navigation fusion-based mobile robot motion attitude estimation method

A technology for robot motion and mobile robots, used in instrumentation, navigation, surveying and navigation, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

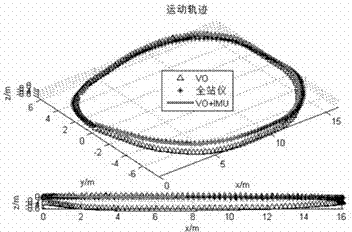

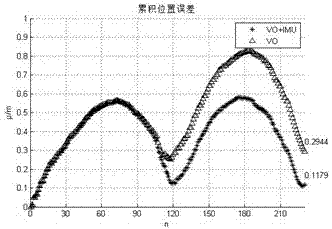

Examples

Embodiment

[0072] 1. Synchronously collect mobile robot binocular camera images and three-axis inertial navigation data

[0073] It adopts mobile robot Pioneer 3 (Pioneer 3), Naiwei NV100 strapdown inertial navigator and Bumblebee2 binocular stereo camera. The inertial navigation sampling frequency is 100Hz, and it is placed at the geometric center of the robot. The Z-axis direction is vertical to the ground, the X-axis direction is directly in front of the robot, and the Y-axis direction is the right side of the robot and perpendicular to the X and Z axes. axis direction; the binocular stereo camera is placed directly in front of the robot, the pitch angle is 45 degrees, and the camera sampling frequency is 1Hz.

[0074] 2. Extract the features of the front and rear frame image pairs and match and estimate the motion pose

[0075] For the left and right images collected by the binocular camera, extract scale-invariant feature transform (SIFT) features, including calculating the extreme...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com