Three-dimensional video color calibration method based on scale invariant feature transform (SIFT) characteristics and generalized regression neural networks (GRNN)

A color correction, stereoscopic video technology, applied in biological neural network models, color signal processing circuits, etc., can solve the problems of not considering the influence of correction information, complex calculation process, limited application range, etc. Simple process and wide-ranging effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

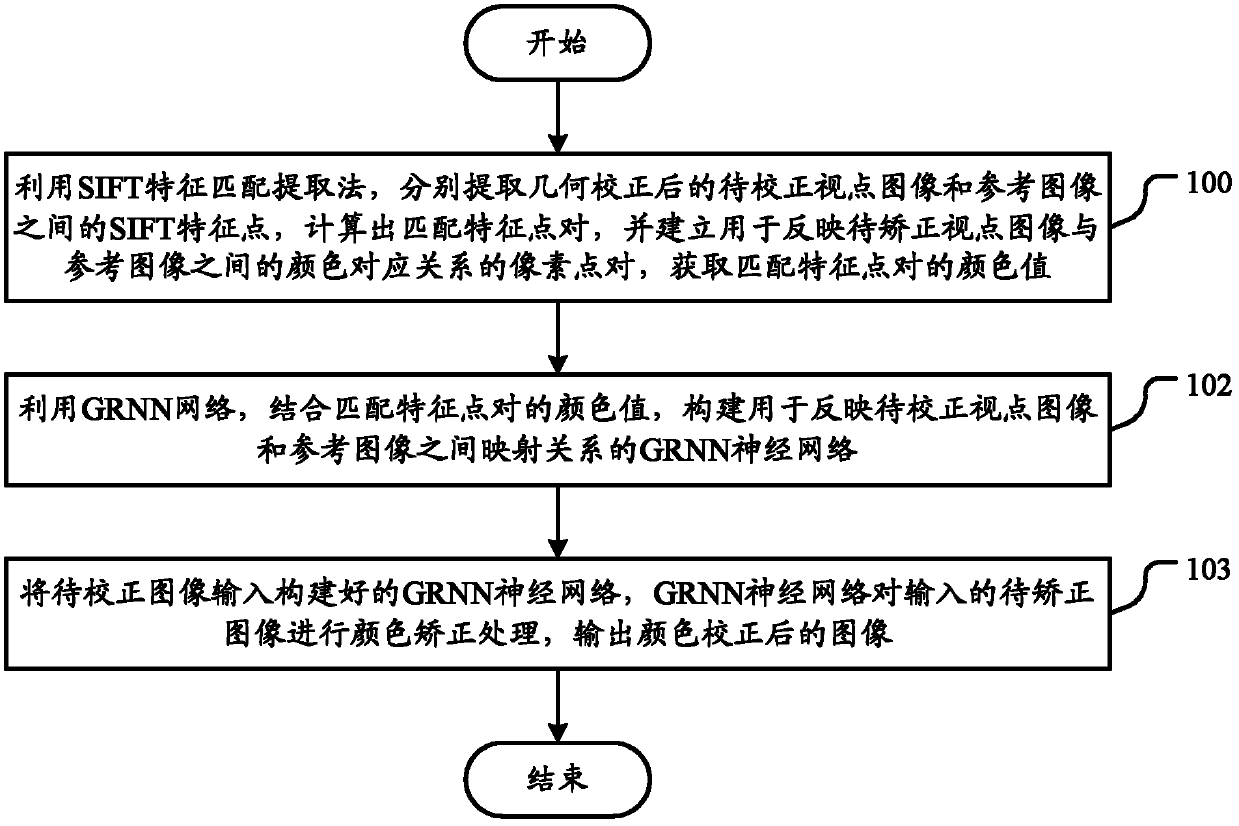

[0041] According to an embodiment of the present invention, a stereoscopic video color correction method based on SIFT features and GRNN network is provided. Such as figure 1 As shown, the stereoscopic video color correction method based on SIFT feature and GRNN network of the present embodiment includes:

[0042] Step 100: Using the SIFT feature matching extraction method, respectively extract the SIFT feature points between the viewpoint image to be corrected and the reference image, calculate matching feature point pairs, and establish a color correspondence between the viewpoint image to be corrected and the reference image The pixel point pair of the relationship, get the color value of the matching feature point pair;

[0043] In step 100, the SIFT feature is a computer vision algorithm used to detect and describe the local features in the image. It looks for extreme points in the spatial scale, and extracts its position, scale, and rotation invariants. The algorithm w...

Embodiment 2

[0049] Such as figure 2 As shown, the stereoscopic video color correction method based on SIFT feature and GRNN network of the present embodiment includes:

[0050] Step 201: Select the RGB color space, and perform color conversion on the viewpoint image to be corrected;

[0051] Step 202: Using the SIFT feature matching extraction method, respectively extract the SIFT feature points between the viewpoint image to be corrected and the reference image after geometric correction, calculate the pair of matching feature points, and establish a relationship between the viewpoint image to be corrected and the reference image. The pixel point pair of the color correspondence between them, and obtain the color value of the matching feature point pair;

[0052] Step 203: using the GRNN network, combined with the color values of the matching feature point pairs, to construct a GRNN neural network for reflecting the mapping relationship between the viewpoint image to be corrected and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com