Method and device for scheduling memory pool in multi-core central processing unit system

A central processing unit and memory pool technology, applied in the computer field, can solve problems such as reducing the timeliness of data processing by multi-core CPU systems and reducing the processing performance of multi-core CPU systems, so as to reduce allocation time, improve performance, and improve timeliness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

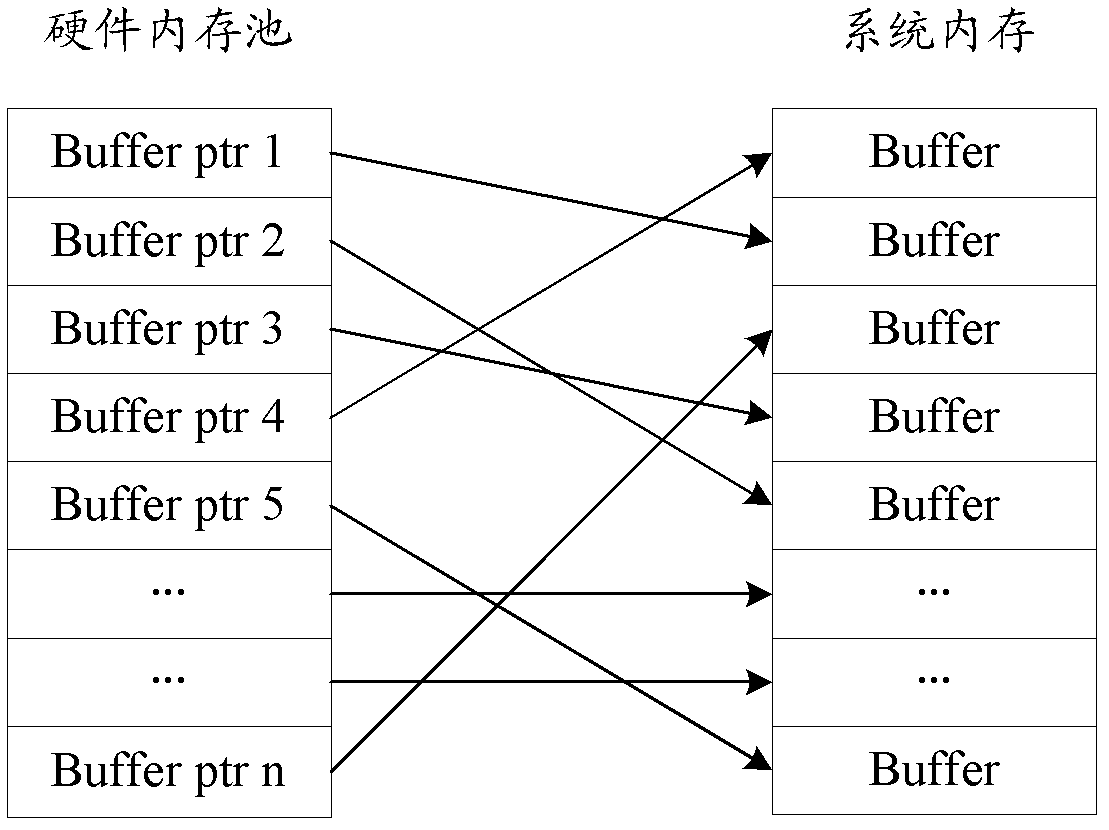

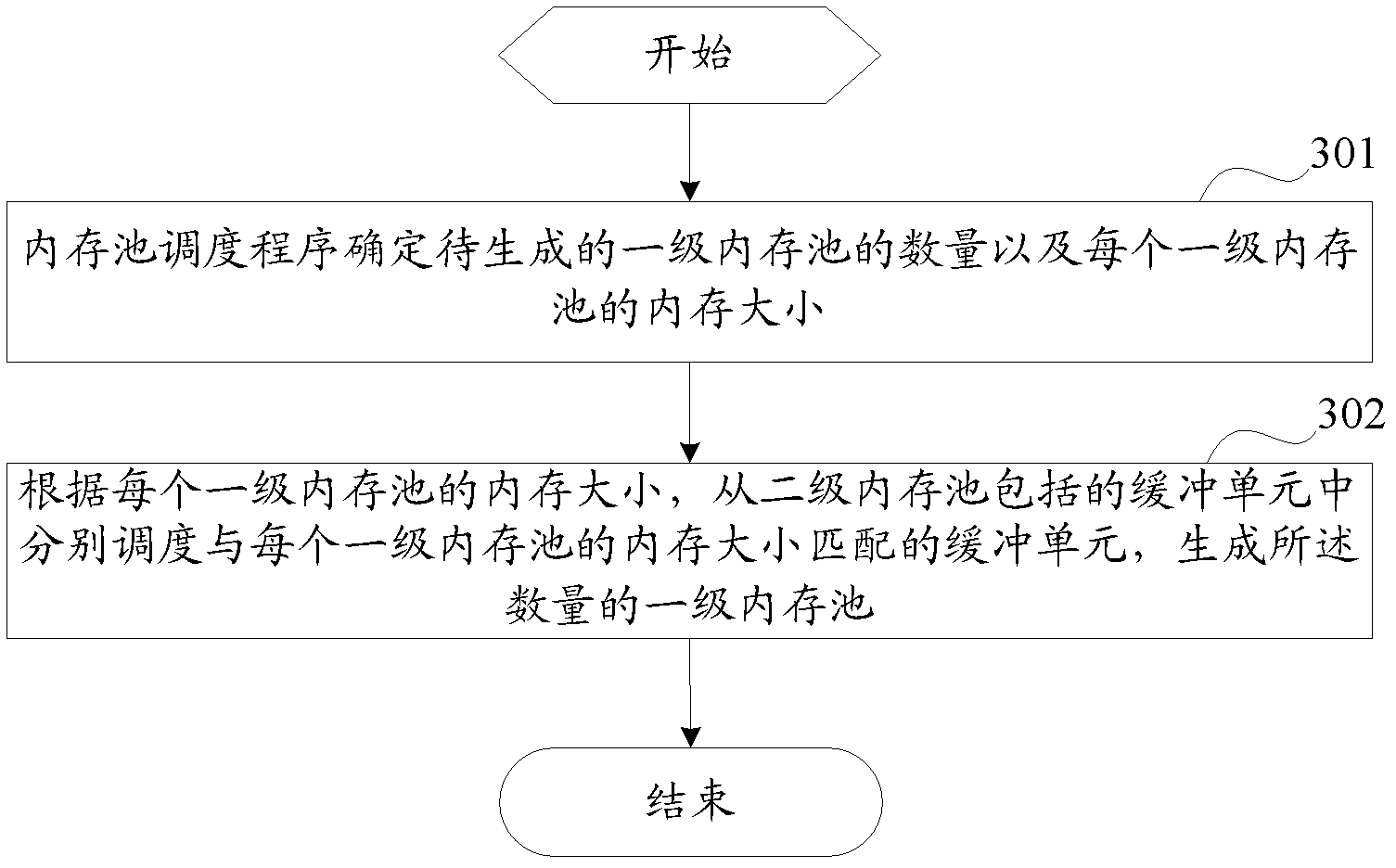

[0036] Embodiment 1 of the present invention provides the generation process of the first-level memory pool. The generation process of the first-level memory pool is generally completed in the system initialization stage, and with the operation of the system, the first-level memory pool can be increased or decreased by one level according to the actual situation. class memory pool.

[0037] image 3 It shows a schematic flow chart of generating a primary memory pool. Specifically, generating a primary memory pool mainly includes the following steps 301 and 302:

[0038] Step 301, the memory pool scheduler determines the number of primary memory pools to be generated and the memory size of each primary memory pool.

[0039] Wherein, the number of primary memory pools to be generated may be determined according to the number of concurrent pipeline threads in the multi-core CPU system. Preferably, the number of primary memory pools to be generated may be equal to that of concurr...

Embodiment 2

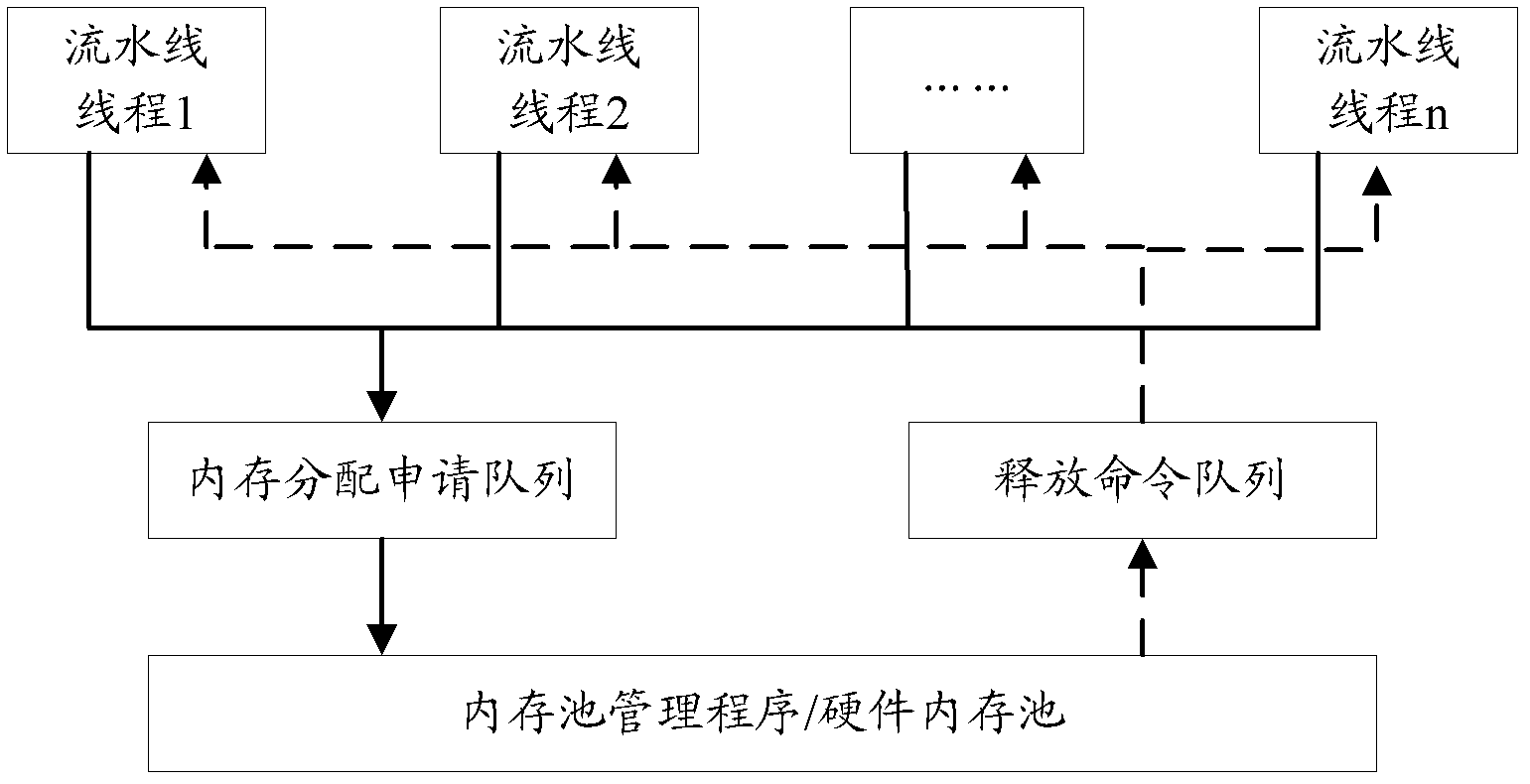

[0077] Embodiment 2 of the present invention provides a method for scheduling memory pools in a multi-core CPU system. The method mainly utilizes at least two primary memory pools generated in Embodiment 1 to implement memory pool scheduling for concurrent pipeline threads.

[0078] Figure 7 It shows a schematic diagram of the relationship between the primary memory pool, the secondary memory pool, and the pipeline threads when the memory pool scheduler function is integrated with the secondary memory pool. Figure 7 In , taking each pipeline thread corresponding to a first-level memory pool as an example, by Figure 7It can be seen that each pipeline will send a memory allocation application to the secondary memory pool through arrow 1. After the secondary memory pool determines the primary memory pool corresponding to the pipeline thread, it will allocate the primary memory pool to the secondary memory pool through arrows 2 and 3. The corresponding pipeline thread, subsequ...

Embodiment 3

[0096] Corresponding to the method for scheduling a memory pool in a multi-core CPU provided in Embodiment 1 and Embodiment 2 above, Embodiment 3 provides a device for scheduling a memory pool in a multi-core CPU. Figure 10 A schematic diagram showing the structure of the scheduling device of the memory pool in the multi-core CPU, as Figure 10 As shown, the device mainly includes:

[0097] A memory allocation application receiving unit 1001, a memory pool generating unit 1002, and a memory pool scheduling unit 1003;

[0098] in:

[0099] A memory allocation application receiving unit 1001, configured to receive memory allocation applications sent by at least two pipeline threads respectively;

[0100] The memory pool generation unit 1002 is configured to generate at least two first-level memory pools, and distribute each generated first-level memory pool to a pipeline thread, wherein, the buffer units included in the first-level memory pool are obtained from the buffer uni...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com