Memory management method and device capable of realizing memory level parallelism

A memory management and storage-level technology, applied in the field of memory management methods and devices that use storage-level parallelism, can solve problems such as limiting the performance of large-capacity main memory

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will now be described in further detail in conjunction with the accompanying drawings and preferred embodiments. These drawings are all simplified schematic diagrams, which only illustrate the basic structure of the present invention in a schematic manner, so they only show the configurations related to the present invention.

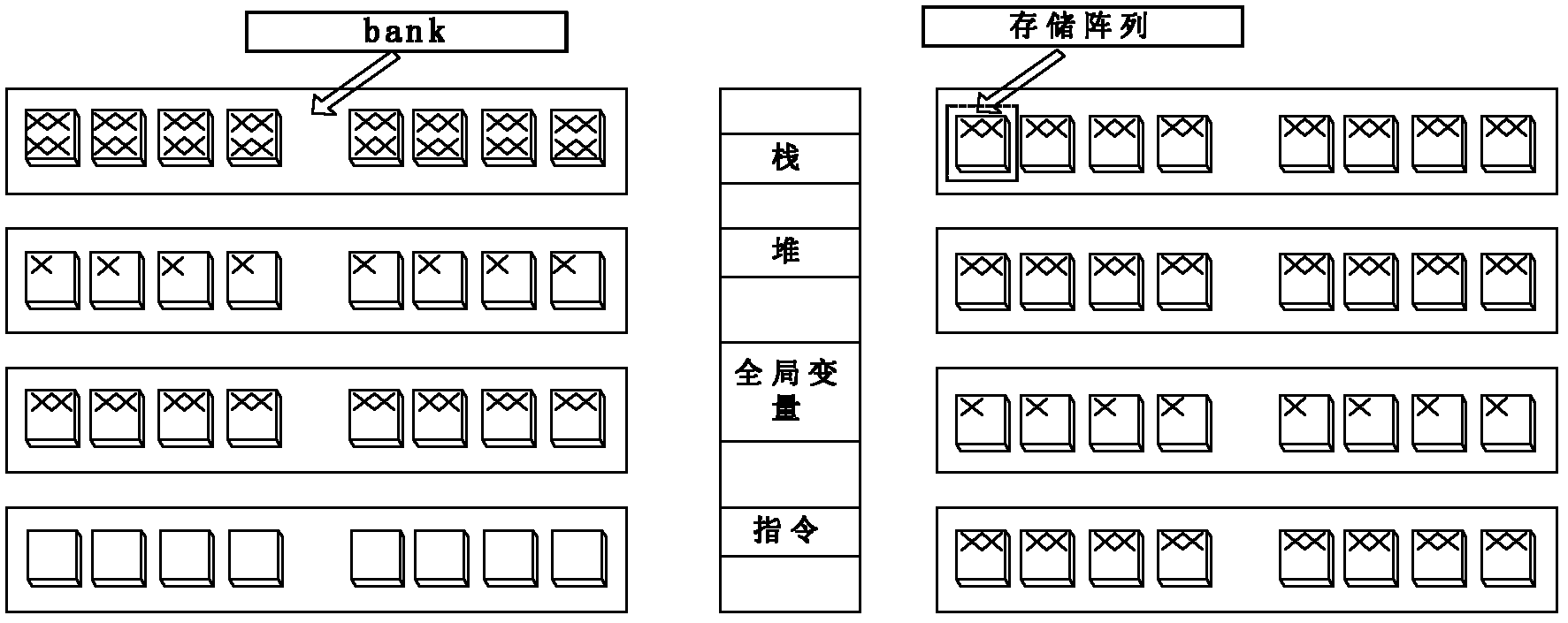

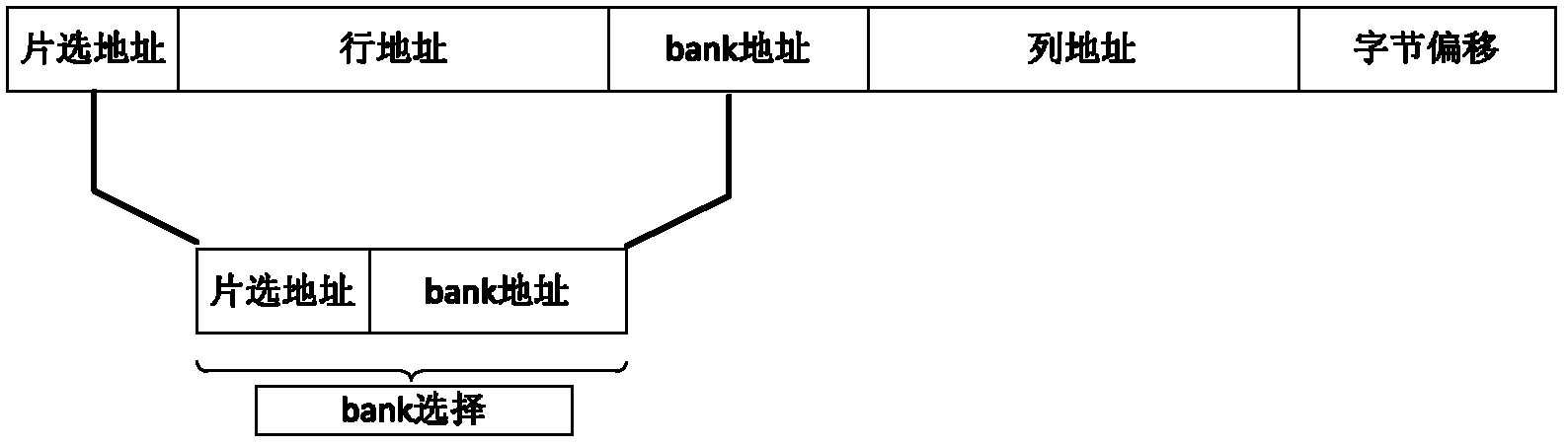

[0028] The invention utilizes the structural feature that the large-capacity main memory contains multiple banks, and divides the main memory into different groups according to the high bit chip selection address in the address. At the same time, the data with high conflict overhead is mapped to different bank leases, thus reducing memory access conflicts during application execution.

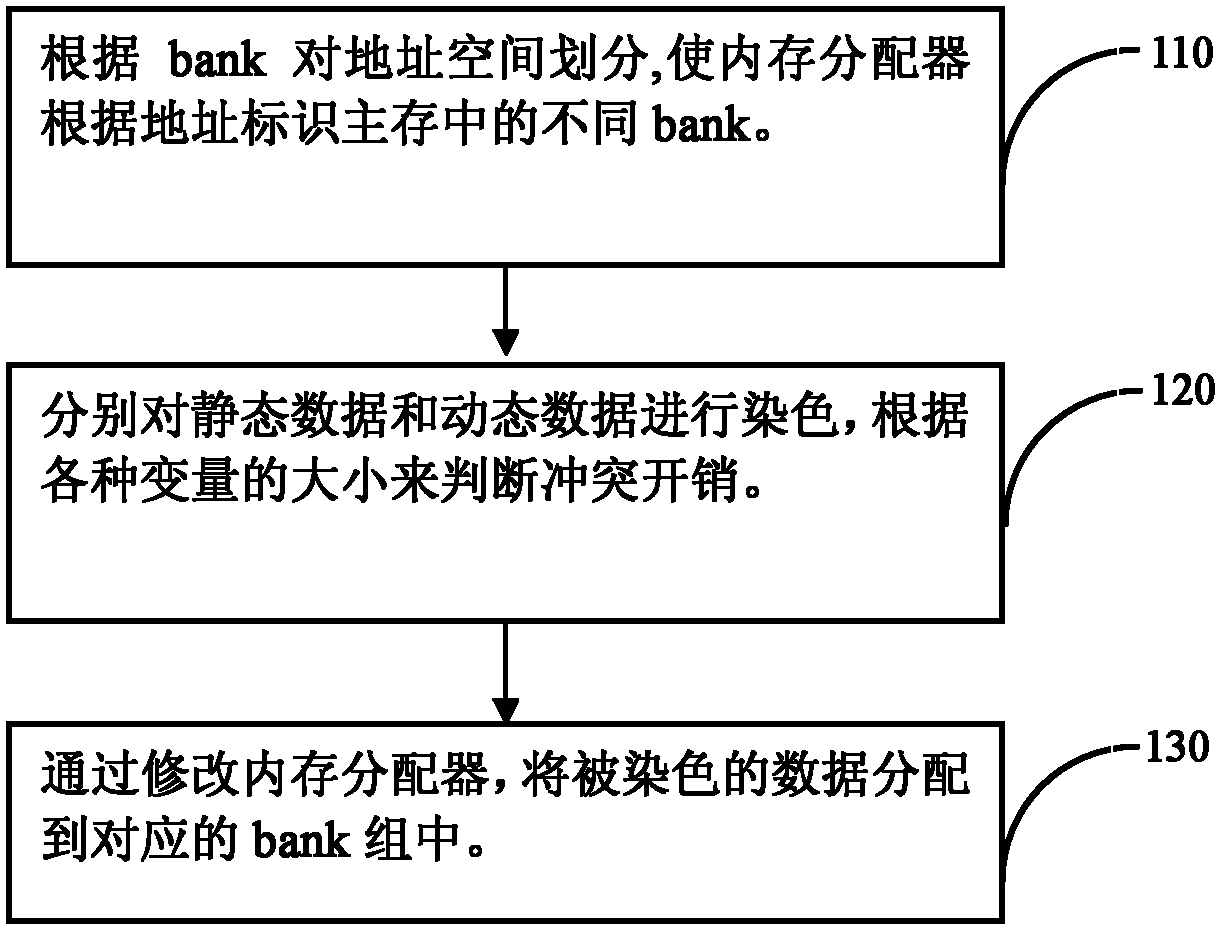

[0029] The memory management method using the large-capacity high-speed cache provided by the present invention, its process is as follows figure 2 shown, including the following steps:

[0030] 110: Divide the address space according to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com