Live action navigation method and live action navigation device

A navigation method and real-scene technology, applied to road network navigators and other directions, can solve the problems of large data volume of street view images and difficulty in choosing the direction of action for users, and achieve the effect of reducing the amount of data transmission

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

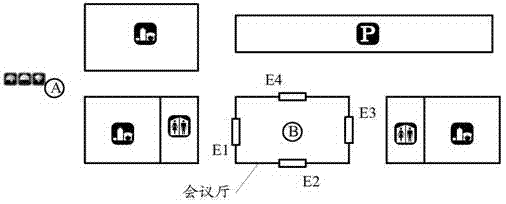

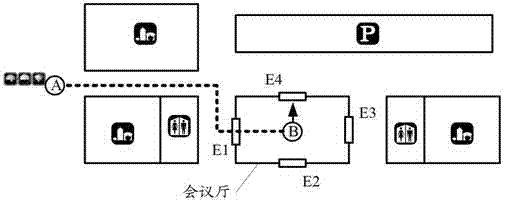

[0059] figure 2 The flow chart of the real-scene navigation method provided by Embodiment 1 of the present invention, such as figure 2 As shown, the method mainly includes the following steps:

[0060] Step 201: The system control center receives a real-scene navigation request from the user interface control system, and the real-scene navigation request includes the information of destination A.

[0061] The user can request a navigation route from the current location to the destination A through the user interface control system, that is, the user interface control system sends information including the destination A to the system control center.

[0062] Step 202: the system control center obtains the current geographic location B of the user from the user positioning system.

[0063] The user positioning system determines the current geographic location of the user based on satellite positioning systems (GPS signals, etc.), wireless communication networks, WiFi networ...

Embodiment 2

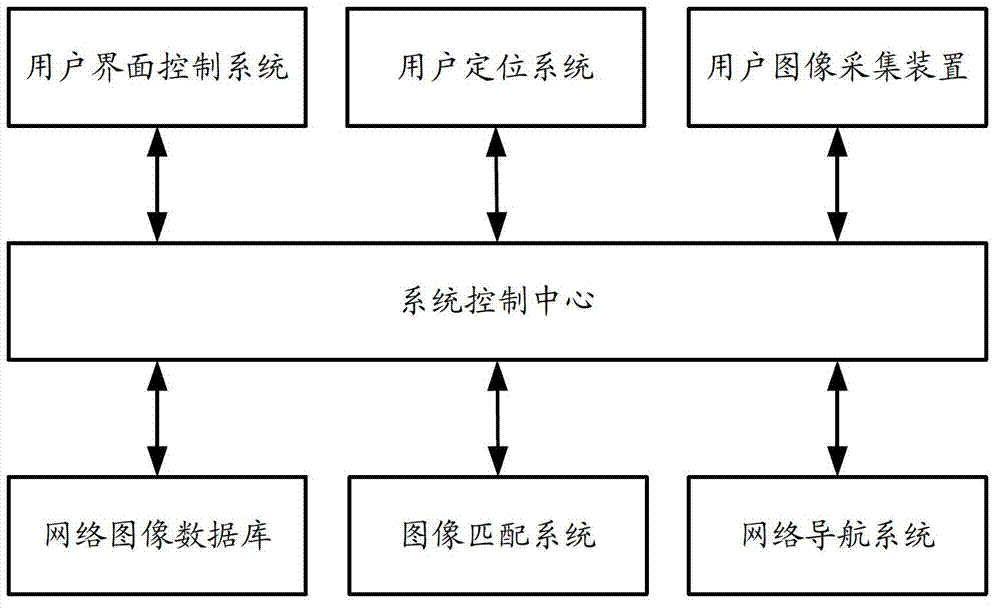

[0092] Figure 11 The structural diagram of the real-scene navigation device provided for Embodiment 2 of the present invention, such as Figure 11 As shown, the device may include: a real scene image acquisition unit 1101 , a network image acquisition unit 1102 , a matching image acquisition unit 1103 and an action direction determination unit 1104 .

[0093] The real-scene image acquisition unit 1101 is configured to acquire the real-scene image I of the scene where the user is currently located from the user's image acquisition device. The real-scene image I is the image information of the real scene where the user is located, and the collected image may be a single-frame image or a video image.

[0094] The network image acquisition unit 1102 is configured to acquire images corresponding to geographical locations within a preset range from the user's current geographic location B from the network image database to form an image data set U. The network image database cont...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com