Cloud computing resource pre-distribution achievement method

An implementation method and pre-allocation technology, applied in the field of cloud computing resource management, can solve problems such as affecting user satisfaction, inability to meet user resource requirements, and large processing delays.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The content of the present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

[0034] Example:

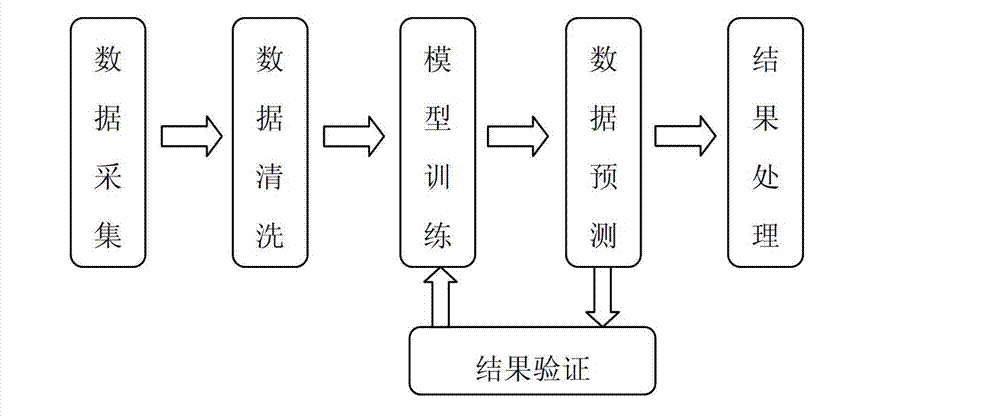

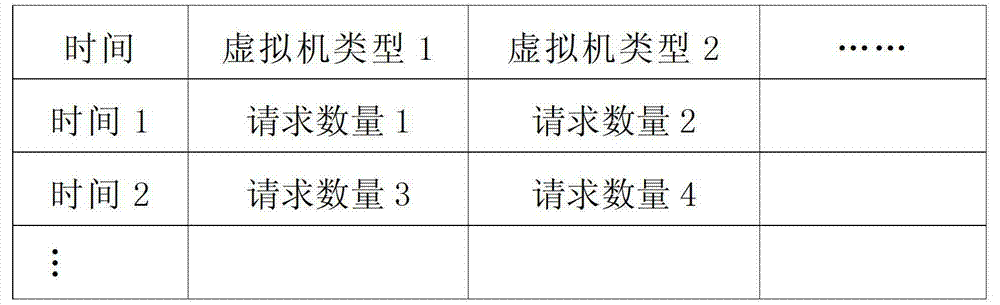

[0035] The present invention proposes a combined resource prediction method based on time series. After obtaining user request history records, the number of user requests per day is counted according to the type of virtual machine, and a time series based on the type of virtual machine is established, and then the combined forecasting model is used to Predict the request situation of the next stage. The implementation steps of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0036] According to the analysis of the problem, in order to establish the matrix of time and virtual machine type, it is necessary to preprocess the historical data and then perform model training. This processing flow can be divided into several steps: data acquisition, d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com