Method for acquiring dynamic scene depth based on fringe structure light

A dynamic scene and acquisition method technology, applied in the field of image processing, can solve problems such as inaccurate stripe boundary positioning, achieve the effect of reducing computational complexity and improving spatial resolution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention is an improvement to the existing dynamic scene depth acquisition algorithm of space coded structured light, does not increase the complexity of equipment, improves the spatial resolution of the acquired depth, and increases the accuracy of the acquired depth.

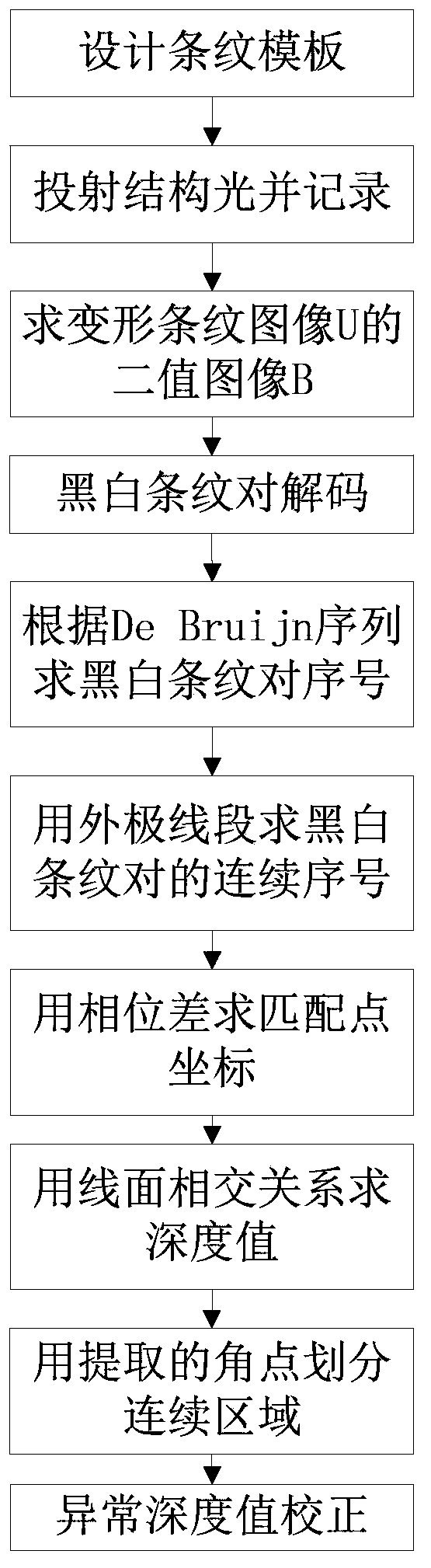

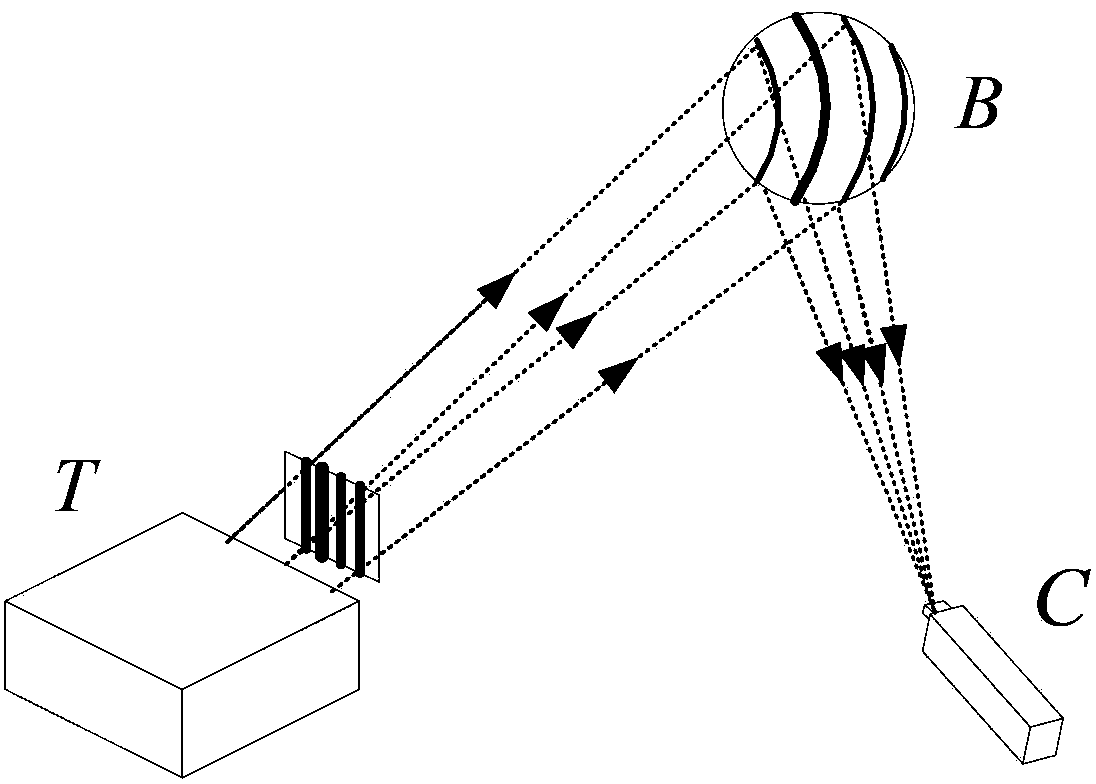

[0035] refer to figure 1 , the present invention is a dynamic scene depth acquisition method based on striped structured light, the steps are as follows:

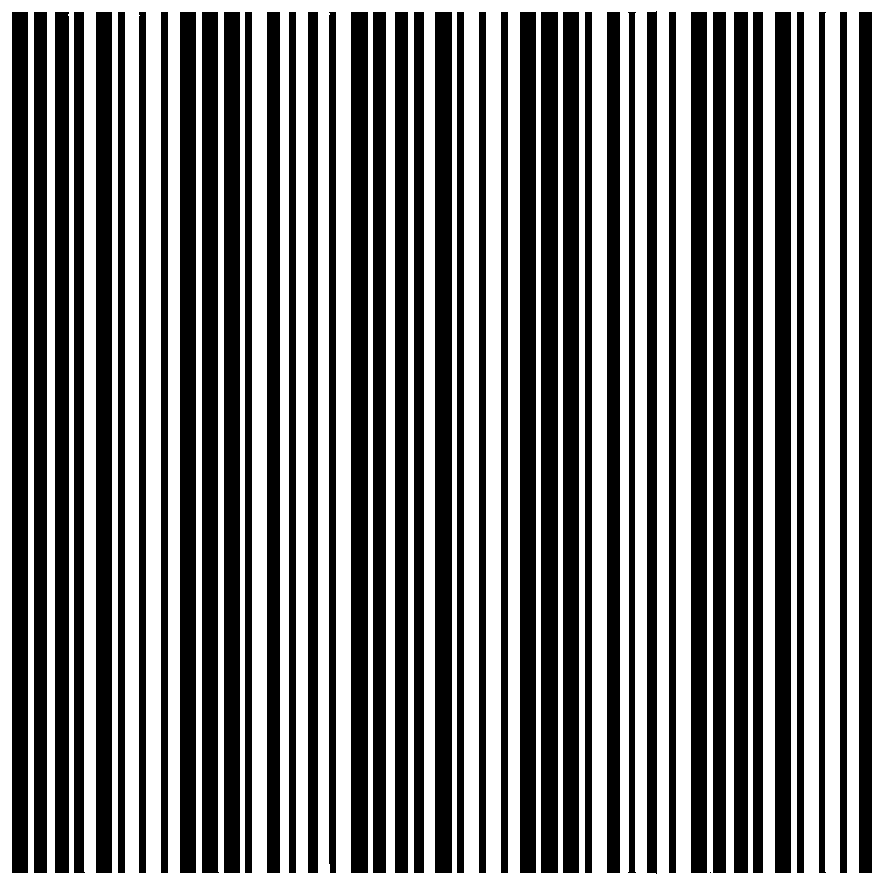

[0036] Step 1, design black and white stripe template P with different widths encoded by 2-element 3-time De Bruijn sequence.

[0037] (1a) Set a pair of black and white stripes as the basic unit, and the black stripes are on the left, and the white stripes are on the right, and the sum of the widths of the black and white stripes is set as a constant L, and the ratio of the width of the white stripes to L is defined as the duty cycle, when When the duty ratio of the white stripes is 2 / 6, the code of the pair of black and white stripes ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com