High performance server architecture system and data processing method thereof

A data processing and server technology, applied in the field of server architecture, can solve the problems of low data processing efficiency and poor ability, and achieve the effect of improving IO throughput, improving processing capacity, and reducing processing delay

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

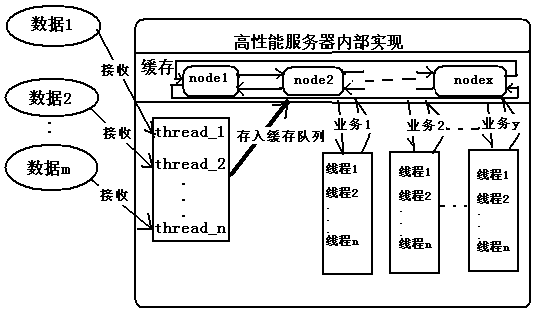

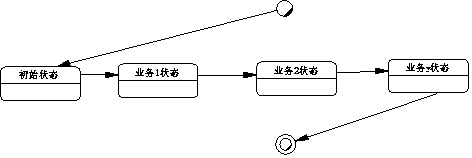

[0030] This embodiment provides a high-performance server architecture system, including a high-speed cache unit, a multi-task concurrent processing unit, a thread pool unit, and a data batch sending unit, and its internal implementation flow chart is as follows figure 1 shown. The cache unit allocates a cache queue in the memory, and pre-allocates the required memory space for the cache nodes in the cache queue, figure 1 The node1, node2...nodex shown are the cache nodes in the cache queue. When the business data arrives, it is directly stored in the cache nodes here, and there is no relationship between the cache nodes. Each cache node Points can be independently manipulated by business processes. The multi-task concurrent processing unit divides the data processing process of each cache node into multiple business states according to the business type of business information, such as figure 2 As shown, the life cycle of a business data node starts from the initial state,...

Embodiment 2

[0037] This embodiment provides a high-performance server architecture system, including a high-speed cache unit, a multi-task concurrent processing unit, a thread pool unit, and a data batch sending unit, and its internal implementation flow chart is as follows figure 1 shown. The cache unit allocates a cache queue in the memory, and pre-allocates the required memory space for the cache nodes in the cache queue, figure 1 The node1, node2...nodex shown are the cache nodes in the cache queue. When the business data arrives, it is directly stored in the cache nodes here, and the cache nodes are connected in series using a two-way circular linked list. The multi-task concurrent processing unit divides the data processing process of each cache node into multiple business states according to the business type of business information, such as figure 2 As shown, the life cycle of a business data node starts from the initial state, and passes through four business states: business 1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com