Motion estimation method based on image features and three-dimensional information of three-dimensional visual system

A technology of 3D vision and motion estimation, applied in the field of motion estimation, which can solve problems such as the influence of the initial value of 3D estimation, the inability to obtain 3D relative estimation, and algorithm failure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

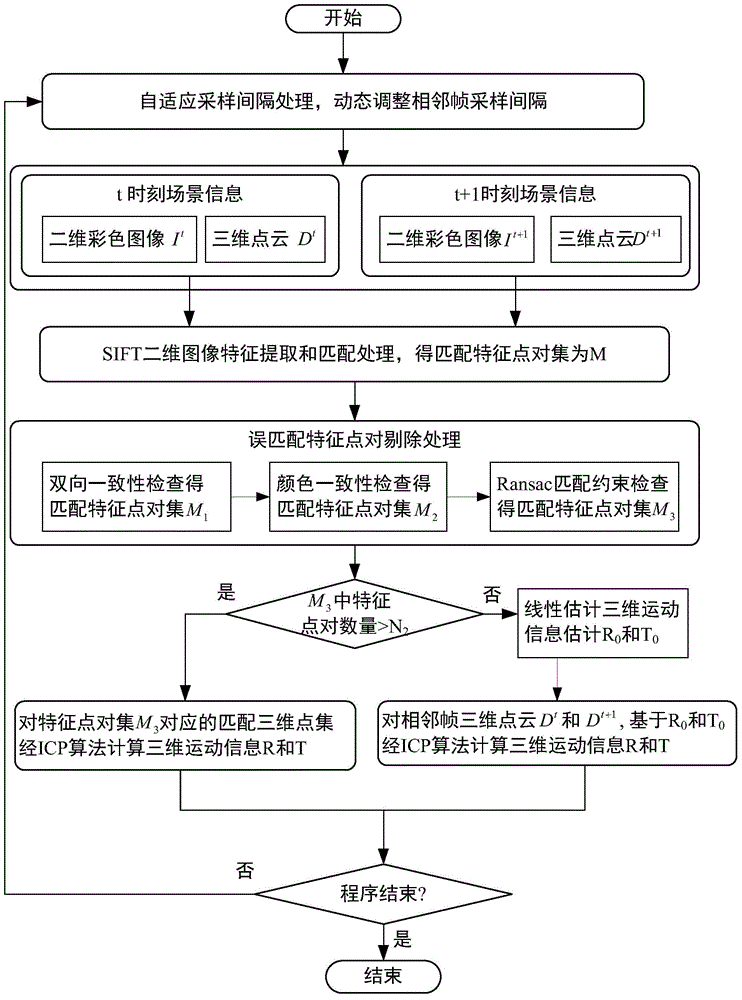

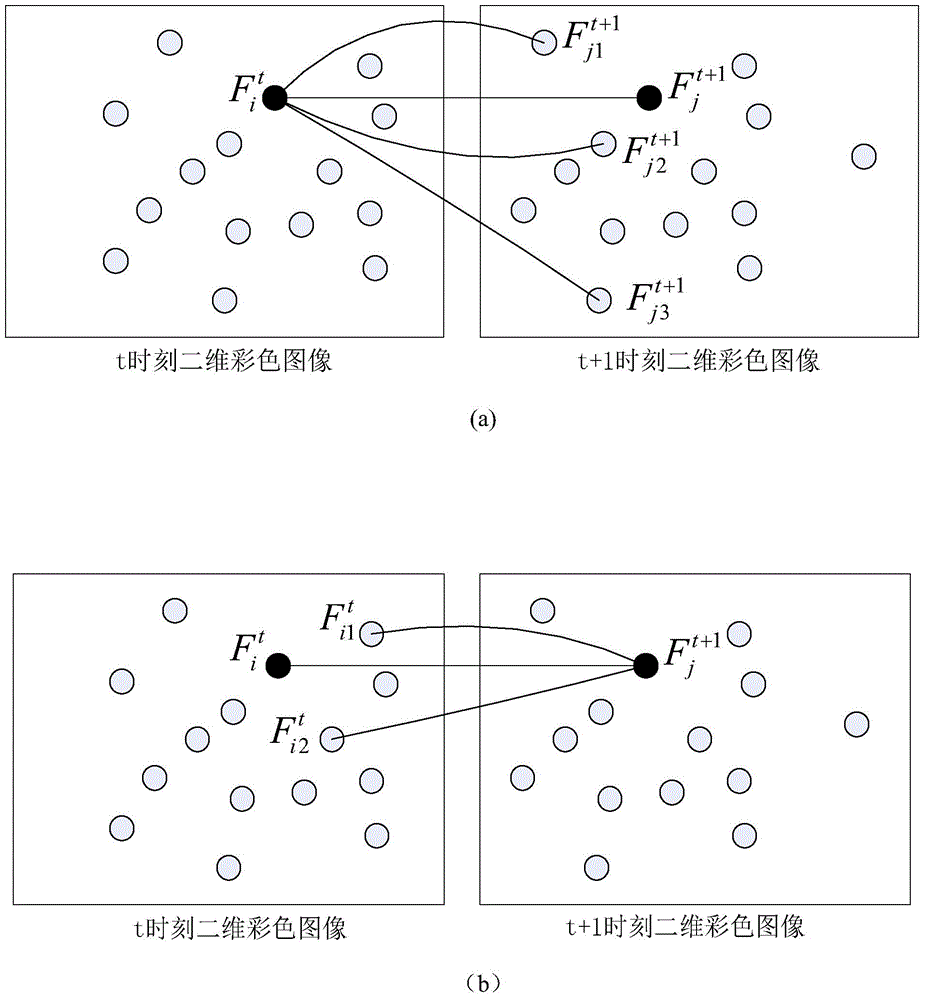

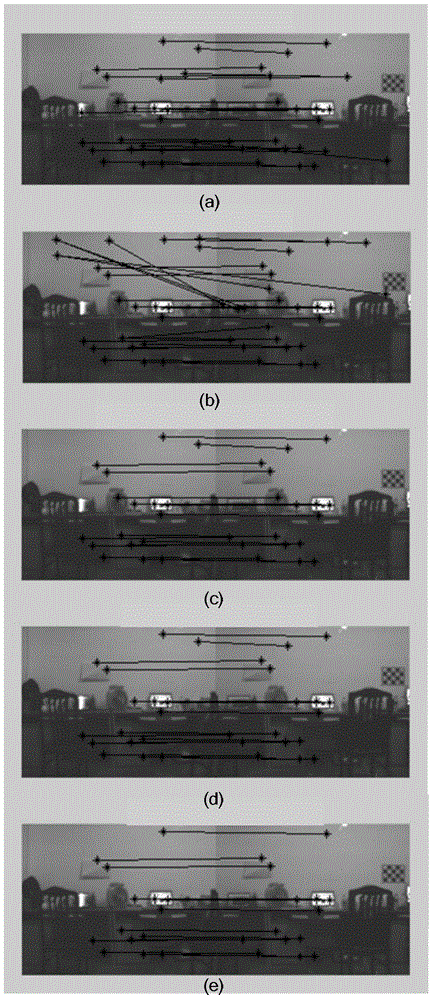

[0061] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0062] The 3D vision system involved in the present invention can adopt the 2D / 3D compound camera or Microsoft Kinect 3D camera etc. involved in the invention patent (201310220879.6). The three-dimensional vision system can simultaneously acquire scene two-dimensional color image information I and spatial three-dimensional information D, wherein the two-dimensional color image information I and spatial three-dimensional information D are matched and corresponded one by one according to the pixel coordinates of the two-dimensional color image, that is, the two-dimensional color image I The pixel point I(u,v) and the three-dimensional point cloud D of the uth row and the vth column in u,v (x, y, z) correspond. As an application example, the present invention provides the application effect based on the Kinect three-dimensional camera.

[0063] Such as ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com