In-depth extracting method of three-viewpoint stereoscopic video restrained by time-space domain

A technology for stereoscopic video and depth extraction, applied in stereoscopic systems, image data processing, instruments, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0115] The present invention will be described in detail below in conjunction with specific embodiments. The following examples will help those skilled in the art to further understand the present invention, but do not limit the present invention in any form. It should be noted that those skilled in the art can make several modifications and improvements without departing from the concept of the present invention. These all belong to the protection scope of the present invention.

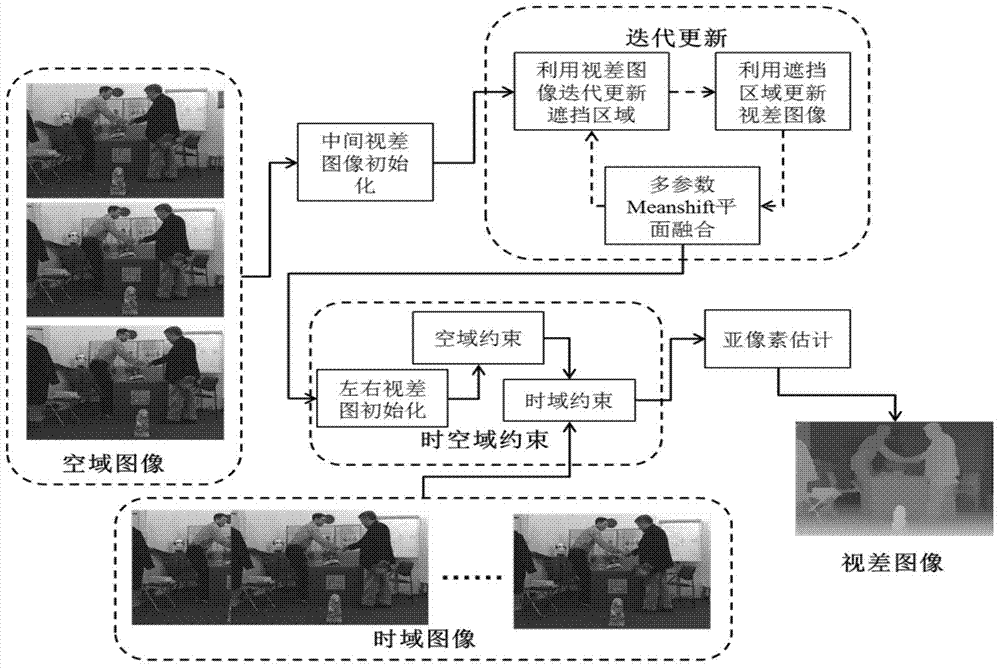

[0116] Such as figure 1 As shown, the three-viewpoint depth sequence estimation method of the present invention includes the initialization of the disparity map of the middle viewpoint, iterative update of the disparity map and the occlusion image, the initialization of the left and right disparity images, the space-time constraints and the sub-pixel estimation.

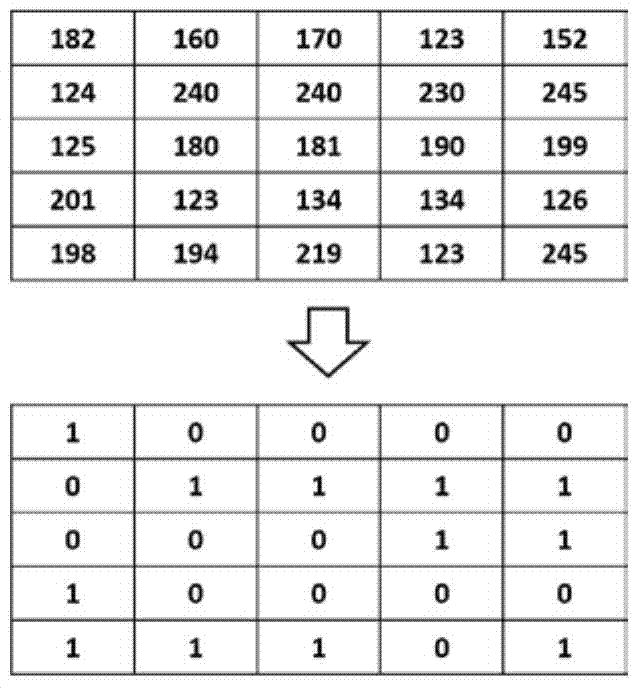

[0117] The first step, for the intermediate viewpoint image I t,L , to obtain its initial matching energy distribution, use the BP al...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com