Head Pose Estimation Method Based on Multi-feature Point Set Active Shape Model

A technology of active shape model and head posture, which is applied in computing, computer parts, character and pattern recognition, etc., can solve the problems of unstable feature point positioning and affecting the accuracy of head posture, etc., to improve accuracy and overcome The effect of inaccurate positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with accompanying drawing.

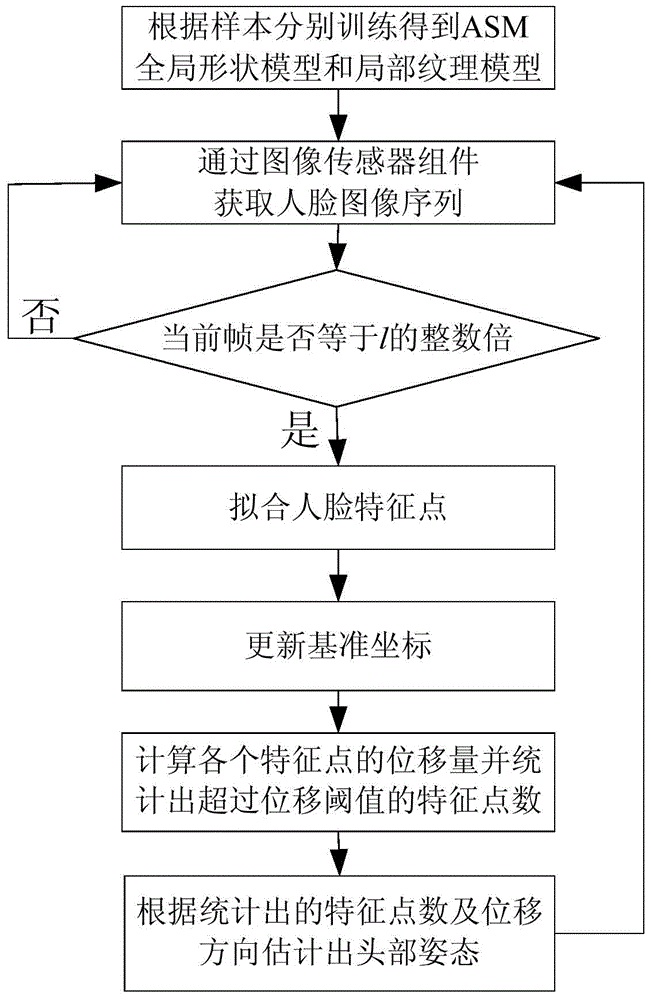

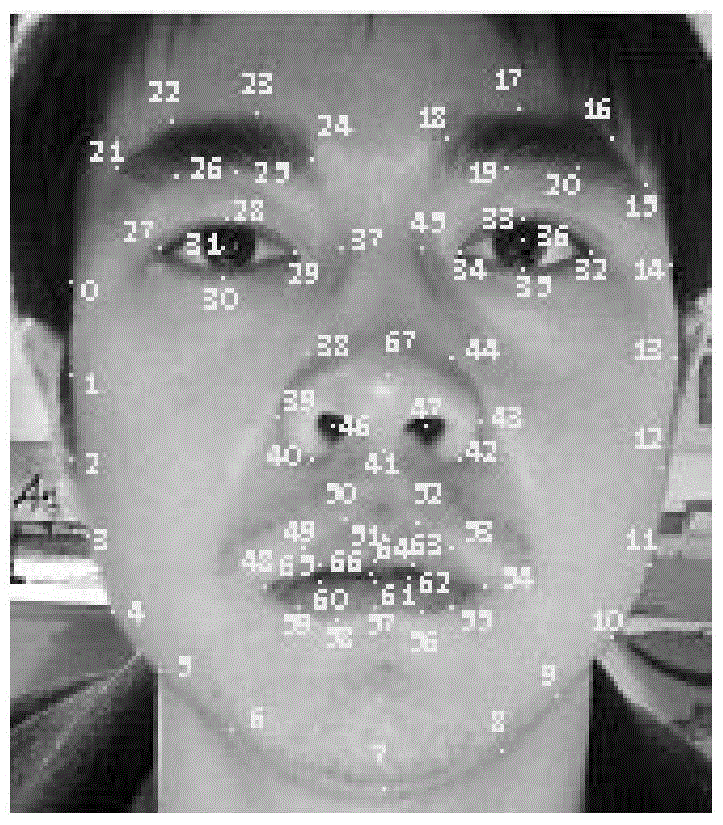

[0033] ASM is based on the point distribution model (Point Distribution Model, PDM), obtains the statistical information of the distribution of feature points in the sample by training image samples, and obtains the change direction that the feature points allow to exist, so as to find the corresponding features on the target image point location. The training samples need to manually mark the positions of all the feature points, record the coordinates of the feature points, and calculate the local grayscale model corresponding to each feature point as the feature vector for local feature point adjustment. Then put the trained model on the target image to find the next position of each feature point. Use the local grayscale model to find the feature point with the smallest Mahalanobis distance of the local grayscale model in the direction specified by the current feature p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com