Three-dimensional mixed minimum perceivable distortion model based on depth image rendering

A depth image and distortion model technology, applied in image communication, stereo systems, electrical components, etc., can solve the problems of visual redundancy, not removed, unable to accurately reflect the human eye's perception of stereo video distortion, etc., to save bits rate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to facilitate those of ordinary skill in the art to understand and implement the present invention, the present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the implementation examples described here are only used to illustrate and explain the present invention, and are not intended to limit this invention.

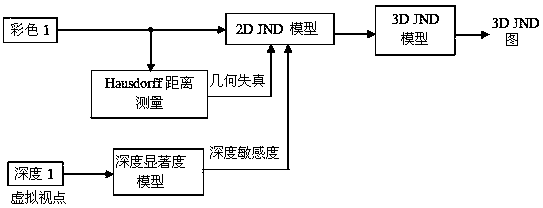

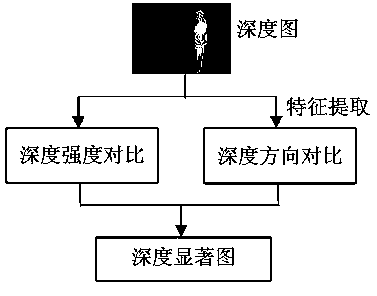

[0034] please see figure 1 , figure 2 and Fig. 3, the technical solution adopted by the present invention is: a stereo mixing minimum perceivable distortion model based on depth image rendering, comprising the following steps:

[0035] Step 1: Calculate the 2D minimum perceivable distortion value of the input image, which is implemented by nonlinearly adding the brightness adaptive factor and texture masking factor of the input image, and subtracting the difference between the brightness adaptive factor and the texture masking factor The overlap effect of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com