Voice emotion recognition method based on multi-fractal and information fusion

A voice emotion recognition and multiple technology, applied in the information field, can solve the problems of lack of acoustic features, chaotic features of voice signals, and low recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] The present invention will be described in further detail below in conjunction with the accompanying drawings.

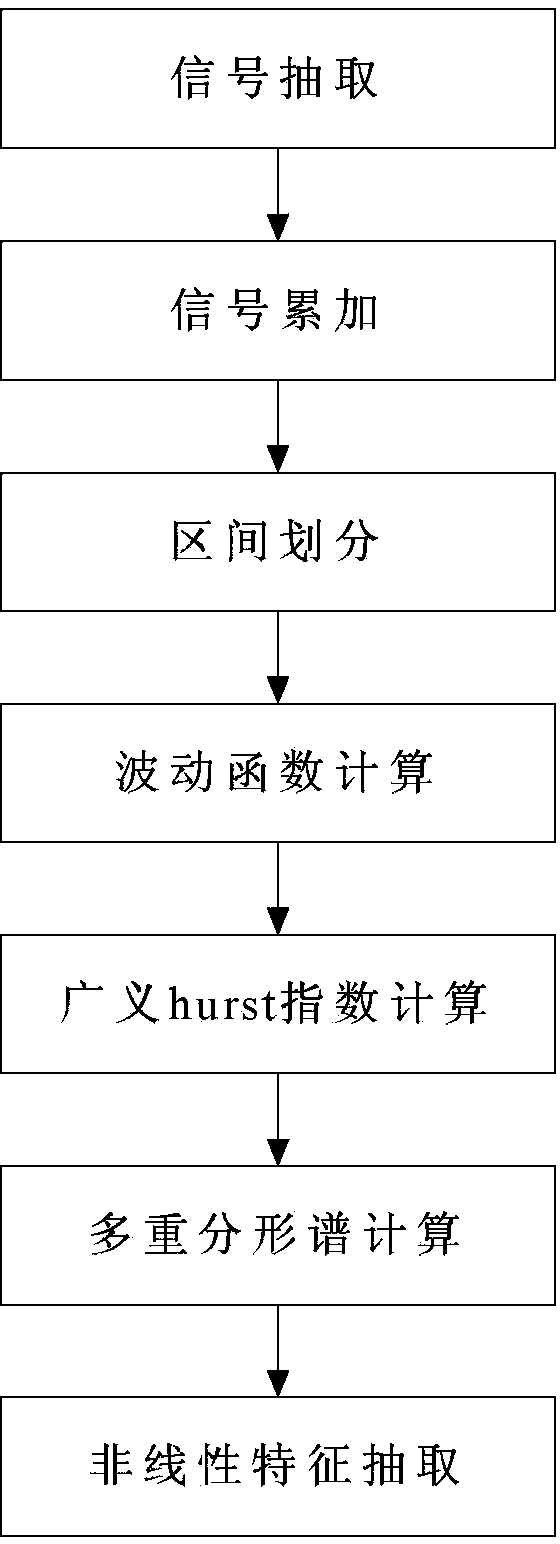

[0049] The present invention is a kind of speech emotion recognition method based on nonlinear analysis, wherein:

[0050] Step 1: The speech emotion database uses the Mandarin speech database of Beihang University. The speech database includes seven speech categories: sadness, anger, surprise, fear, joy, disgust and calmness. Select 180 speeches for each of anger, joy, sadness and calmness. Samples, a total of 720 speech samples for emotion recognition. Among them, the first 260 speech samples are used to train the recognition model, and the last 180 speech samples are used to test the performance of the recognition model.

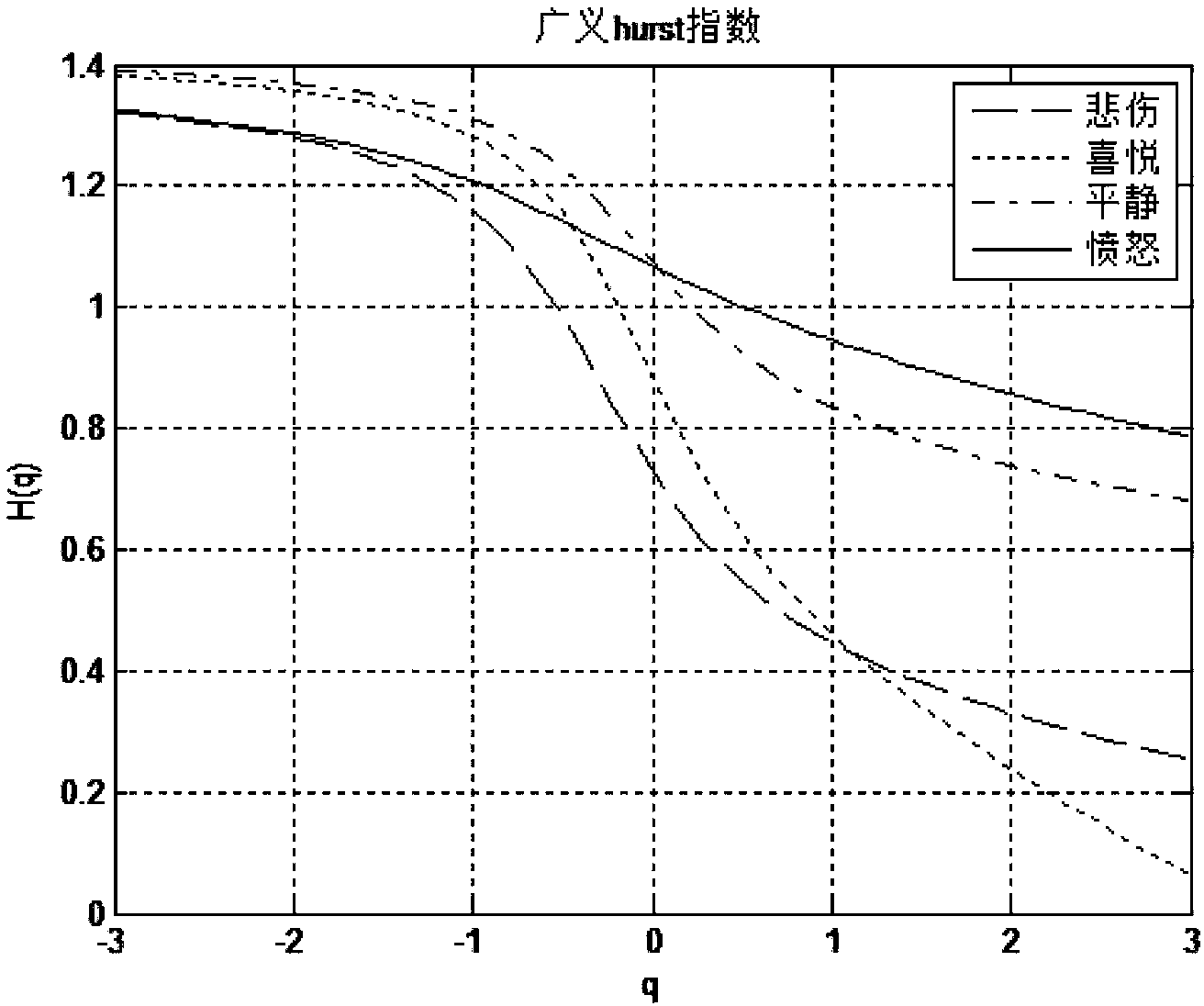

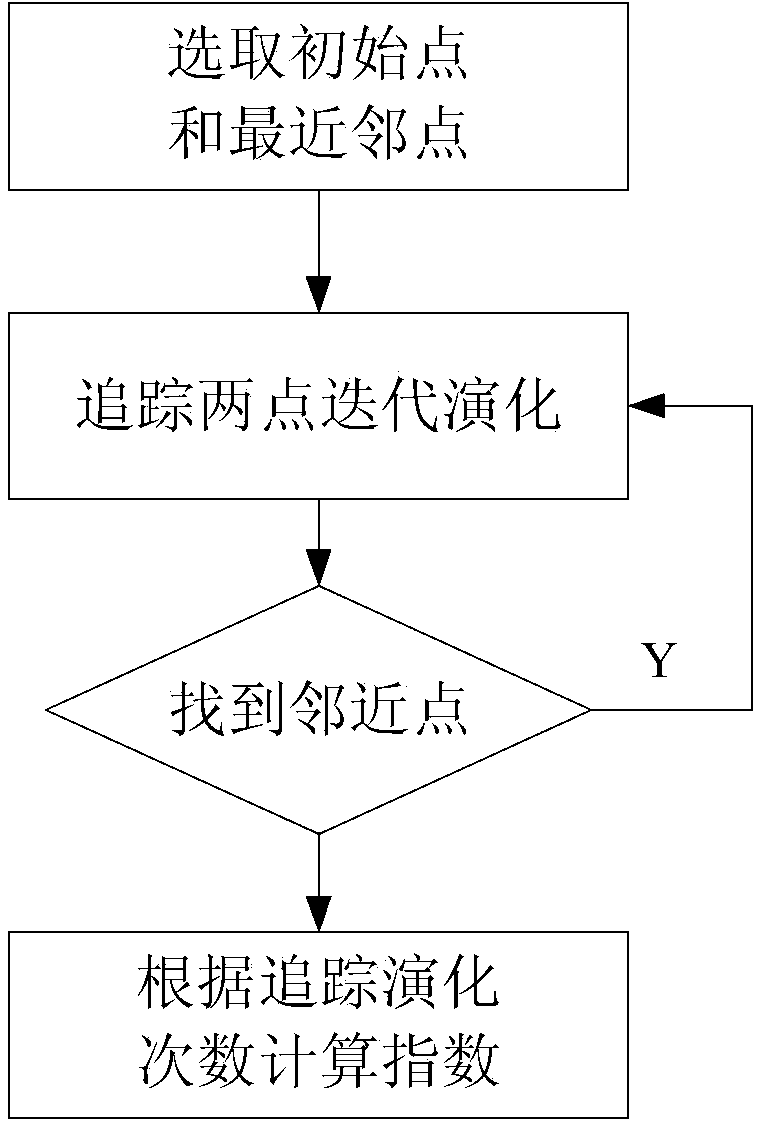

[0051]Step 2: The chaotic features of the speech signal are discriminated by Lyapunov exponent, such as figure 2 As shown, the Lyapunov exponent refers to the divergence or convergence rate of the orbits generated by two initial value...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com