A virtual-real fusion method of multiple video streams and 3D scenes

A three-dimensional scene, virtual and real fusion technology, applied in the field of virtual reality, can solve the problem that the virtual scene cannot reflect the dynamic changes of the environment, and achieve the effect of enhancing the realism of the scene and user experience, good scalability, and enhancing the relationship between time and space.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

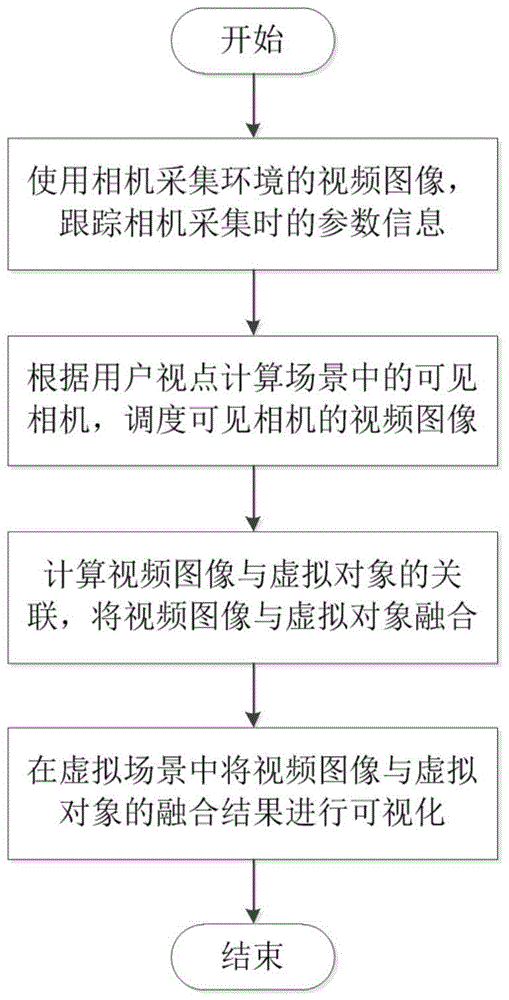

[0026] The present invention will be described in further detail below in conjunction with accompanying drawings and examples. The process flow of the multi-video stream and three-dimensional scene virtual-real fusion method proposed by the present invention is as follows: figure 1 As shown, the steps are as follows:

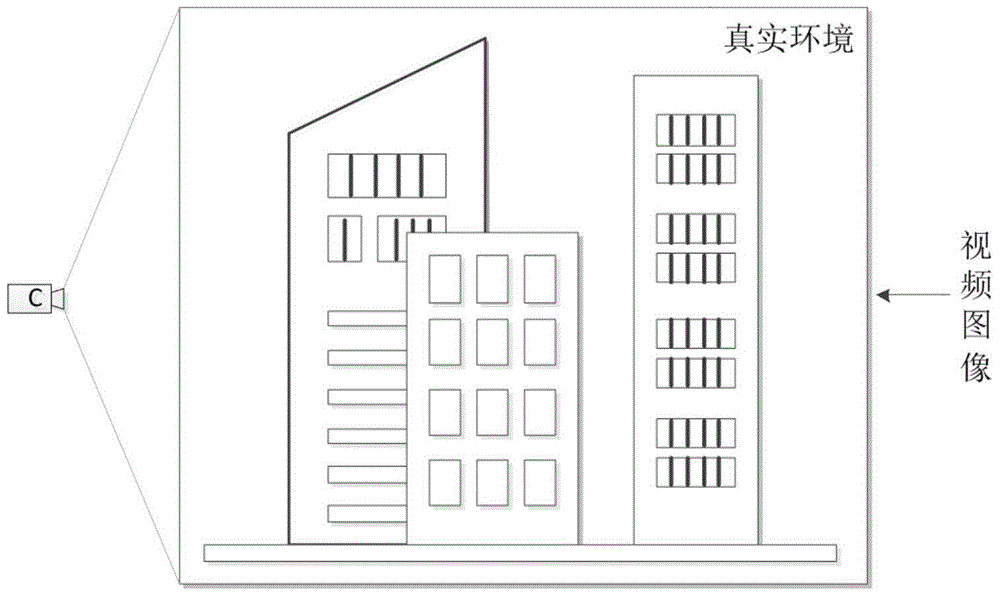

[0027] Step 1, using one or more cameras to collect video images of the environment, such as figure 2 shown. Track the parameter information collected by the camera, and the tracking can be realized by means of sensor recording and offline image analysis. The camera parameters obtained by tracking need to be transformed into the coordinate system in the virtual environment to complete the fusion process.

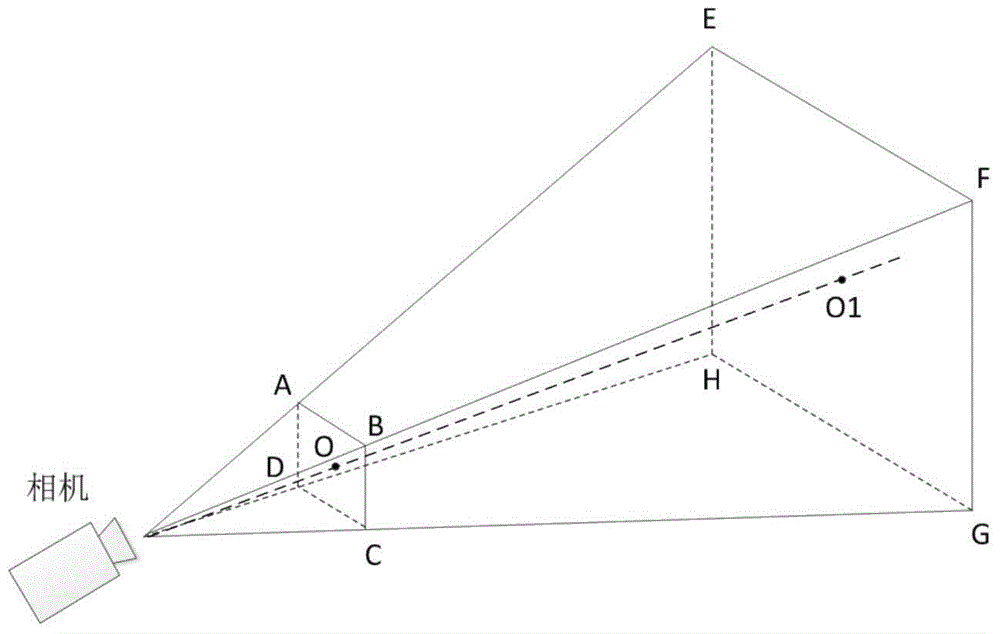

[0028] Step 2. Construct the viewing frustum of the camera in three-dimensional space according to the parameter information of the camera, such as image 3 shown. exist image 3 Among them, the hexahedron ABCD-EFGH represents the viewing frustum of a c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com